Lossless Image Compression - PowerPoint PPT Presentation

1 / 36

Title:

Lossless Image Compression

Description:

Lossless Image Compression Recall: run length coding of binary and graphic images Why does it not work for gray-scale images? Image modeling revisited – PowerPoint PPT presentation

Number of Views:203

Avg rating:3.0/5.0

Title: Lossless Image Compression

1

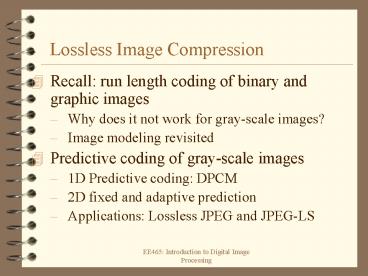

Lossless Image Compression

- Recall run length coding of binary and graphic

images - Why does it not work for gray-scale images?

- Image modeling revisited

- Predictive coding of gray-scale images

- 1D Predictive coding DPCM

- 2D fixed and adaptive prediction

- Applications Lossless JPEG and JPEG-LS

2

Lossless Image Compression

- No information loss i.e., the decoded image is

mathematically identical to the original image - For some sensitive data such as document or

medical images, information loss is simply

unbearable - For others such as photographic images, we only

care about the subjective quality of decoded

images (not the fidelity to the original)

3

Data Compression Paradigm

Y

entropy coding

binary bit stream

source modeling

discrete source X

P(Y)

probability estimation

Probabilities can be estimated by counting

relative frequencies either online or offline

The art of data compression is the art of source

modeling

4

Recall Run Length Coding

Y

Transformation by run-length counting

Huffman coding

binary bit stream

discrete source X

P(Y)

probability estimation

Y is the sequence of run-lengths from which X can

be recovered losslessly

5

Image Example

col.

156 159 158 155 158 156 159 158

160 154 157 158 157 159 158 158

156 159 158 155 158 156 159 158

160 154 157 158 157 159 158 158

156 153 155 159 159 155 156 155

155 155 155 157 156 159 152 158

156 153 157 156 153 155 154 155

159 159 156 158 156 159 157 161

row

Runmax4

6

Why Short Runs?

7

Why RLC bad for gray-scale images?

- Gray-scale (also called photographic) images

have hundreds of different gray levels - Since gray-scale images are acquired from the

real world, noise contamination is inevitable

You simply can not freely RUN in a gray-scale

image

8

Source Modeling Techniques

- Prediction

- Predict the future based on the causal past

- Transformation

- transform the source into an equivalent yet more

convenient representation - Pattern matching

- Identify and represent repeated patterns

9

The Idea of Prediction

- Remarkably simple just follow the trend

- Example I X is a normal persons temperature

variation through day - Example II X is intensity values of the first

row of cameraman image - Markovian school (short memory)

- Prediction does not count on the data a long time

ago but the most recent ones (e.g., your

temperature in the evening is more correlated to

that in the afternoon than that in the morning)

10

1D Predictive Coding

1st order Linear Prediction

x1 x2 xn-1 xn xn1 xN

original samples

y1 y2 yn-1 yn yn1 yN

prediction residues

x1 x2 xN

y1 y2 yN

- Encoding

y1x1

initialize

ynxn-xn-1, n2,,N

prediction

- Decoding

y1 y2 yN

x1 x2 xN

x1y1

initialize

prediction

xnynxn-1, n2,,N

11

Numerical Example

90

original samples

92

91

93

93

95

a

b

a-b

prediction residues

90

2

-1

2

0

2

a

b

decoded samples

90

92

91

93

93

95

ab

12

Image Example (take one row)

H(X)6.56bpp

original row signal x (left) and its histogram

(right)

13

Source Entropy Revisited

- How to calculate the entropy for a given

sequence (or image)? - Obtain the histogram by relative frequency

counting - Normalized the histogram to obtain probabilities

PkProb(Xk),k0-255 - Plug the probabilities into entropy formula

You will be asked to implement it in the

assignment

14

Cautious Notes

- The entropy value calculated in previous slide

need to be understood as the result if we choose

to model the image by an independent identically

distributed (i.i.d.) random variable. - It does not take spatially correlated and varying

characteristics into account - The true entropy is smaller!

15

Image Example (cont)

H(Y)4.80bpp

prediction residue signal y (left) and its

histogram (right)

16

Interpretation

- H(Y)ltH(X) justifies the role of prediction

(intuitively it decorrelates the signal). - Similarly, H(Y) is result if we choose to model

the residue image by an independent identically

distributed (i.i.d.) random variable. - It is an improved model when compared with X due

to the prediction - The true entropy is smaller!

17

High-order 1D Prediction Coding

k-th order Linear Prediction

x1 x2 xn-1 xn xn1 xN

original samples

x1 x2 xN

y1 y2 yN

- Encoding

y1x1,y2x2,,ykxk

initialize

prediction

- Decoding

y1 y2 yN

x1 x2 xN

x1y1,x2y2,,xkyk

initialize

prediction

18

Why High-order?

- By looking at more past samples, we can have a

better prediction of the current one - Compare c_, ic_ , dic_ and predic_

- It is a tradeoff between performance and

complexity - The performance quickly diminishes as the order

increases - Optimal order is often signal-dependent

19

1D Predictive Coding Summary

Y

entropy coding

binary bit stream

Linear Prediction

discrete source X

P(Y)

probability estimation

Prediction residue sequence Y usually contains

less uncertainty (entropy) than the original

sequence X

20

From 1D to 2D

1D

X(n)

n

future

causal past

2D

raster-scanning

Zigzag-scanning

21

2D Predictive Coding

raster scanning order

causal half-plane

Xm,n

22

Ordering Causal Neighbors

6

4

2

3

1

5

Xm,n

where

Xk the k nearest causal neighbors of Xm,n in

terms of Euclidean distance

prediction residue

23

Lossless JPEG

Notes

1 2 3 4 5 6 7

Predictor w n nw nw-nw w-(n-nw)/2 n-(w-nw)/2 (nw

)/2

horizontal

vertical

n

nw

diagonal

x

w

3rd-order

2nd-order

24

Numerical Examples

1D

a

b

X156 159 158 155

Y156 3 -1 -3

a-b

2D

Initialization no prediction applied

156 159 158 155 160 154 157

158 156 159 158 155 160 154 157

158

156 3 -1 -3 160 -6 3 1

156 3 -1 -3 160 -6 3 1

horizontal predictor

X

Y

Note

2D horizontal prediction can be viewed as the

vector case of 1D prediction of each row

25

Numerical Examples (Cont)

156 159 158 155 160 154 157

158 156 159 158 155 160 154 157

158

156 159 158 155 4 -5 -1

3 -4 5 1 -3 4

-5 -1 3

vertical predictor

X

Y

Note

2D vertical prediction can be viewed as

the vector case of 1D prediction of each column

Q Given a function of horizontal prediction, can

you Use this function to implement vertical

prediction? A Apply horizontal prediction to the

transpose of the image and then transpose the

prediction residue again

26

Image Examples

Comparison of residue images generated by

different predictors

vertical predictor

horizontal predictor

H(Y)5.05bpp

H(Y)4.67bpp

Q why vertical predictor outperforms horizontal

predictor?

27

Analysis with a Simplified Edge Model

100 100 50 50

100 50 100 50

n

nw

50 50 100 100

50 100 50 100

x

w

H_edge

V_edge

H_predictor

Y?50

Y0

V_predictor

Y?50

Y0

Conclusion when the direction of predictor

matches the direction of edges, prediction

residues are small

28

Horizontal vs. Vertical

Do we see more vertical edges than horizontal

edges in natural images? Maybe yes, but why?

29

Importance of Adaptation

- Wouldnt it be nice if we can switch the

direction of predictor to locally match the edge

direction? - The concept of adaptation was conceived several

thousands ago in an ancient Chinese story of how

to win a horse racing

emperor

general

good

90

80

gt

How to win?

gt

fair

70

60

poor

50

40

gt

30

Median Edge Detection (MED) Prediction

n

nw

x

w

Key

MED use the median operator to adaptively select

one from three candidates (Predictors 1,2,4 in

slide 44) as the predicted value.

31

Another Way of Implementation

n

nw

x

If

w

Q which one is faster? You need to find it out

using MATLAB yourself

else if

32

Proof by Enumeration

Case 1 nwgtmax(n,w)

If nwgtngtw, then n-nwlt0 and w-nwlt0, so nw-nwltwltn

and median(n,w,nw-nw)min(n,w)w

If nwgtwgtn, then n-nwlt0 and w-nwlt0, so nw-nwltnltw

and median(n,w,nw-nw)min(n,w)n

Case 2 nwltmin(n,w)

If nwltnltw, then n-nwgt and w-nwgt0, so nw-nwgtwgtn

and median(n,w,nw-nw)max(n,w)w

If nwltwltn, then n-nwgt0 and w-nwgt0, so nw-nwgtngtw

and median(n,w,nw-nw)max(n,w)n

Case 3 nltnwltw or wltnwltn nw-nw also lies between

n and w, so median(n,w,nw-nw)nw-nw

33

Numerical Examples

H_edge

V_edge

n100,w50, nw100

n50,w100, nw100

100 50 100 50

100 100 50 50

nw-nw50

nw-nw50

Note how we can get zero prediction residues

regardless of the edge direction

34

Image Example

Fixed vertical predictor H4.67bpp

Adaptive (MED) predictor H4.55bpp

35

JPEG-LS (the new standard for lossless image

compression)

36

Summary of Lossless Image Compression

- Importance of modeling image source

- Different classes of images need to be handled by

different modeling techniques, e.g., RLC for

binary/graphic and prediction for photographic - Importance of geometry

- Images are two-dimensional signals

- In 2D world, issues such as scanning order and

orientation are critical to modeling