Chapter 11: Analytical Learning - PowerPoint PPT Presentation

1 / 26

Title:

Chapter 11: Analytical Learning

Description:

Chapter 11: Analytical Learning Inductive learning training examples Analytical learning prior knowledge + deductive reasoning Explanation based learning – PowerPoint PPT presentation

Number of Views:285

Avg rating:3.0/5.0

Title: Chapter 11: Analytical Learning

1

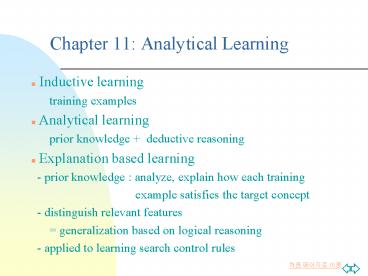

Chapter 11 Analytical Learning

- Inductive learning

- training examples

- Analytical learning

- prior knowledge deductive reasoning

- Explanation based learning

- - prior knowledge analyze, explain how each

training - example

satisfies the target concept - - distinguish relevant features

- generalization based on logical reasoning

- - applied to learning search control rules

2

Introduction

- Inductive learning

- poor performance when insufficient data

- Explanation based learning

- (1) accept explicit prior knowledge

- (2) generalize more accurately than inductive

system - (3) prior knowledge reduce complexity of

hypotheses space - reduce sample complexity

- improve generalization accuracy

3

- Task of learning play chess

- - target concept

- chessboard positions in which black will

lose its queen - within two moves

- - human

- explain/analyze training examples by prior

knowledge - - knowledge legal rules of chess

4

- Chapter summary

- - Learning algorithm that automatically

construct/learn - from explanation

- - Analytical learning problem definition

- - PROLOG-EBG algorithm

- - General properties, relationship to

inductive learning algorithm - - Application to improving performance at

large state-space - search problems

5

Inductive Generalization Problem

- Given

- Instances

- Hypotheses

- Target Concept

- Training examples of target concept

- Determine

- Hypotheses consistent with the training

examples

6

Analytical Generalization Problem

- Given

- Instances

- Hypotheses

- Target Concept

- Training Examples

- Domain Theory

- Determine

- Hypotheses consistent with training

examples and - domain theory

7

Example of an analytical learning problem

- Instance space describe a pair of objects

- Color, Volume, Owner, Material, Density, On

- Hypothesis space H

- SafeToStack(x,y) Volume(x,vx)

Volume(y,vy) LessThan(vx,vy) - Target concept SafeToStack(x,y)

- pairs of physical objects, such that one

can be stacked safely on the other

8

- Training Examples

- On(Obj1, Obj2) Owner(Obj1, Fred)

- Type(Obj1, Box) Owner(Obj2,Louise)

- Type(Obj2, Endtable) Density(Obj1,0.3)

- Color(Obj1,Red) Material(Obj1,Cardboard)

- Color(Obj2,Blue) Material(Obj2,Wood)

- Volume(Obj1,2)

- Domain Theory B

- SafeToStack(x,y) Fragile(y)

- SafeToStack(x,y) Lighter(x,y)

- Lighter(x,y) Weight(x,wx)

Weight(y,wy) LessThan(wx,wy) - Weight(x,w) Volume(x,v)

Density(x,d) Equal(w,times(v,d)) - Weight(x,5) Type(x,Endtable)

- Fragile(x) Material(x,Glass)

- .......

9

- Domain Theory B

- - explain why certain pairs of objects can be

safely stacked - on one another

- - described by a collection of Horn clause

- enable system to incorporate any learned

hypotheses into - subsequent domain theories

10

Learning with Perfect Domain Theories PROLOG-EBG

- Correct assertions are truthful statements

- Complete covers every positive examples

- Perfect domain theory is available?

- Yes

- Why does it need to learn when perfect domain

theory is given?

11

PROLOG-EBG

- Operation

- (1) Leaning a single Horn clause rule

- (2) Removing positive examples covered

- (3) Iterating this process

- Given a complete/correct domain theory

- --gt output a hypothesis (correct, cover

observed - positive training examples)

12

PROLOG-EBG Algorithm

- PROLOG-EBG(Target Concept,Training

Examples,Domain Theory) - Learned Rules

- Pos the positive examples from Training

Examples - for each Positive Examples in Pos that is not

covered by Learned Rules, do - 1. Explain

- - Explanation explanation in

terms of Domain Theory that Positive - Examples satisfies the Target

Concept - 2. Analyze

- - Sufficient Conditions the most

general set of features of Positive - Examples sufficient to satisfy the

Target Concept according to the - Explanation

- 3. Refine

- - Learned Rules Learned Rules

NewHornClause - Target Concept

Sufficient Conditions - Return Learned Rules

13

(No Transcript)

14

- Weakest Preimage

- The weakest preimage of a conclusion C with

respect to a - proof P is the most general set of initial

assertions A, - such that A entails C according to P

- the most general rules

- SafeToStack(x,y) Volume(x,vx)

Density(x,dx) - Equal(wx,times(vx,dx))

LessThan(wx,5) - Type(y,Endtable)

15

(No Transcript)

16

(No Transcript)

17

Remarks on Explanation-Based Learning

- Properties

- (1) justified general hypotheses by using

prior knowledge - (2) explanation determines relevant

attributes - (3) regressing the target concept allows

deriving more general - constraints

- (4) learned Horn clause sufficient

condition to satisfy target - concept

- (5) implicitly assume the complete/correct

domain theory - (6) generality of the Horn clause depends on

the formulation of - the domain theory

18

- Perspectives on example based learning

- (1) EBL as theory-guided generalization

- (2) EBL as example-guided reformation of

theories - (3) EBL as just restating what the learner

already knows - Knowledge compilation

- - reformulate the domain theory to produce

general rules that - classify examples in a single inference step

- - transformation efficiency improving task

without altering - correctness of systems knowledge

19

Characteristics

- Discovering New Features

- - learned feature feature by hidden units

of neural networks - Deductive Learning

- - background knowledge of ILP enlarge the

set of hypotheses - - domain theory reduce the set of

acceptable hypotheses - Inductive Bias

- - inductive bias of PROLOG-EBG domain

theory B - - Approximate inductive bias of PROLOG-EBG

- domain theory B

- preference for small sets of maximally

general Horn clauses

20

- LEMMA-ENUMERATOR algorithm

- - enumerate all proof trees

- - for each proof tree, calculate the weakest

preimage and - construct a Horn clause

- - ignore the training data

- - output a superset of Horn clauses output by

PROLOG-EBG - Role of training data

- focus algorithm on generating rules that

cover the distribution - of instances that occur in practice

- Observed positive example allow generalizing

deductively - to other unseen instances

- IF ((PlayTennis YES)

(Humidityx)) - THEN ((PlayTennis YES)

(Humidity lt x)

21

- Knowledge-level learning

- - the learned hypothesis entails predictions

that go beyond - those entailed by the domain theory

- - deductive closure set of all predictions

entailed by a set of - assertions

- Determinations

- - some attribute of the instance is fully

determined by certain - other attributes, without specifying the

exact nature of the - dependency

- - example

- target concept people who speak

Portuguese - domain theory the language spoken by a

person is determined - by their nationality

- training example Joe, 23-year-old

Brazilian, speaks Portuguese - conclusion all Brazilians speak

Portuguese

22

Explanation-based Learning of Search Control

Knowledge

- Speed up complex search programs

- Complete/Correct domain theory for learning

search control knowledge - definition of legal search operator

- definition of the search objective

- Problem

- find a sequence of operators that will

transform an arbitrary initial - state S to some final state F that satisfies

the goal predicate G

23

PRODIGY

- Domain-independent planning system

- find a sequence of operators that leads from S to

O - means-ends planner

- decompose problems into subgoals

- solve them

- combine their solution into a solution for

the full problem

24

SORA System

- Support a broad variety of problem-solving

strategies - Learned by explaining situations in which its

current strategy leads to inefficiencies

25

Practical Problems applying EBL to learning

search control

- The number of control rules that must be learned

is very large - (1) efficient algorithms for matching rules

- (2) utility analysis estimating the

computational cost and - benefit of each rule

- (3) identify types of rules that will be

costly to match - re-expressing such rules in

more efficient forms - optimizing rule-matching algorithm

26

- Constructing the explanations for the desired

target - concept is intractable

- (1) example

- - states for which operator A leads toward

the optimal solution - (2) lazy or incremental explanation

- - heuristics are used to produce

partial/approximate and - tractable explanation

- - learned rules may be imperfect

- - monitoring performance of the rule on

subsequent cases - - when error, original explanation is

elaborated to cover new case, - - more refined rules is extracted