Pentium Architecture - PowerPoint PPT Presentation

1 / 34

Title:

Pentium Architecture

Description:

P4 has a higher CPI on all benchmarks except mcf (in which the AMD is more than twice the P4) ... For the li benchmark ... for the doduc benchmark. Solution: ... – PowerPoint PPT presentation

Number of Views:362

Avg rating:3.0/5.0

Title: Pentium Architecture

1

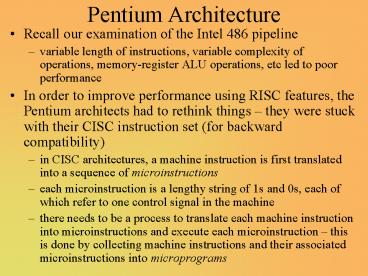

Pentium Architecture

- Recall our examination of the Intel 486 pipeline

- variable length of instructions, variable

complexity of operations, memory-register ALU

operations, etc led to poor performance - In order to improve performance using RISC

features, the Pentium architects had to rethink

things they were stuck with their CISC

instruction set (for backward compatibility) - in CISC architectures, a machine instruction is

first translated into a sequence of

microinstructions - each microinstruction is a lengthy string of 1s

and 0s, each of which refer to one control signal

in the machine - there needs to be a process to translate each

machine instruction into microinstructions and

execute each microinstruction this is done by

collecting machine instructions and their

associated microinstructions into microprograms

2

Why Microinstructions?

- First, since the Pentium architecture uses a

microprogrammed control unit, there is already a

necessary step of decoding a machine instruction

into microcode - Now, consider each microinstruction

- each is equal length

- each executes in the same amount of time

- unless there are structural hazards such as a

cache miss - branches are at the microinstruction level and

are more predictable than machine language level

branching - In a RISC architecture, each machine instruction

is carried out directly in hardware because each

instruction is simple and takes roughly 1 cycle

to execute - to more efficiently pipeline a CISC architecture,

we can pipeline the microinstructions (instead of

machine instructions) to keep a pipeline running

efficiently

3

Control and Micro-Operations

- An example architecture is shown to the right

- Each of the various connections is controlled by

a particular control signal - for instance, to send the MBR value to the AC, we

would signal C11 - note that this figure is incomplete

- a microprogram is a sequence of micro-operations

- each micro-operation is one or more control

signals sent out in a clock cycle to move

information from one location to another

this is not an x86 architecture!

4

Example

- Consider a CISC instruction such as Add R1, X

- this requires that X be moved into the MAR and a

read signaled - the datum returned will be placed into the MBR

- the adder is then sent the value in R1 and MBR,

adding the two and storing the result back into

R1 - this sequence can be written in terms of

micro-operations as - t1 MAR ? (IR (address) )

- t2 MBR ? Memory

- t3 R1 ? (R1) (MBR)

- There may be other sequences needed as well, for

instance, if register results are stored in an

accumulator temporarily, then we must change the

above to include - t3 Acc ? (R1) (MBR)

- t4 R1 ? (Acc)

- we can then convert these into the actual control

signals (for instance, MBR ? Memory is C5 in the

previous figure)

the values t1, t2, etc denote separately clock

cycles

5

Control Memory

Each micro-program consists of one or more

micro-instructions, each stored in a separate

entry of the control memory The control memory

itself is firmware, a program stored in ROM, that

is placed inside of the control unit

... Jump to Indirect or Execute

Fetch cycle routine

... Jump to Execute

Indirect Cycle routine

... Jump to Fetch

Interrupt cycle routine

Jump to Op code routine

Execute cycle begin

... Jump to Fetch or Interrupt

AND routine

... Jump to Fetch or Interrupt

ADD routine

Note each micro-program ends with a branch to

the Fetch, Interrupt, Indirect or Execute

micro-program

6

Example of Three Micro-Programs

- Fetch t1 MAR ? (PC) C2 t2 MBR ? Memory

C0, C5, CR PC ? (PC) 1 C

t3 IR ? (MBR) C4 - Indirect t1 MAR ? (IR (address) )

C8 t2 MBR ? Memory C0, C5, CR

t3 IR(address) ?

(MBR (address) ) C4 - Interrupt t1 MBR ? (PC) C1 t2 MAR ?

save address C PC ? routine

address C t3 Memory ? (MBR) C12, CW - CR Read control to system bus

- CW write control to system bus

- C0 C12 refers to the previous figure

- C are signals not shown in the figure

7

Horizontal vs. Vertical Micro-Instructions

Micro-instruction address points to a branch in

the control memory and is taken if the condition

bit is true

Micro-instruction Address

Function Codes

Jump Condition

Horizontal micro-instructions contain 1 bit for

every control signal controlled by the control

unit

Vertical micro-instructions use function codes

that need additional decoding

Internal CPU Control Signals

Micro-instruction Address

Because this micro-instruction requires 1 bit for

every control line, it is longer than the

vertical micro-instruction and therefore take

more space to store, but does not require

additional time to decode by the control unit

Jump Condition

System Bus Control Signals

8

Micro-programmed Control Unit

- Decoder analyzes IR

- delivers starting address of op codes

micro-program in control store - address placed in the to a micro-program counter

(here, called a Control Address Register) - Loop on the following

- sequencer signals read of control memory using

address in microPC - item in control memory moved to control buffer

register - contents of control buffer register generate

control signals and next address information - if the micro-instructions are vertical, decoding

is required here - sequencer moves next address to control address

register - next instruction (add 1 to current)

- jump to new part of this microprogram

- jump to new machine routine

9

Pentium IV RISC features

- All RISC features are implemented on the

execution of microinstructions instead of machine

instructions - microinstruction-level pipeline with dynamically

scheduled microoperations - fetch machine instruction (3 stages)

- decode machine instruction into microinstructions

(2 stages) - superscalar issues multiple microinstructions (2

stages, register renaming occurs here, up to 3

microinstructions can be issued per cycle) - execute of microinstructions (1 stage, units are

pipelined and can take from 1 to many cycles (up

to 32?) to execute) - write back (3 stages)

- commit (3 stages, up to 3 microinstructions can

commit in any cycle) - reservation stations (128 registers available)

and multiple functional units (7 of them) - branch speculation used (control of speculation

is given to reservation stations rather than a

reorder buffer, commit still occurs, controlled

by reservation stations) - trace cache used

10

Pentium IV Architecture

11

Specifications

- There are 7 functional units

- 2 simple ALUs (for simple integer operations like

add and compare) - 1 complex ALU (for integer multiplication and

integer division) - 1 load unit

- 1 store unit

- 1 floating point move (register to register move

and convert) - 1 floating point unit (addition, subtraction,

multiplication, division) - the simple ALU units execute in half a clock

cycle so each can accommodate up to two

microoperations per cycle reducing latency - the load and store units have their own address

calculation components so that the memory address

can be computed first and then the memory access

performed, along with aggressive data cache to

lower load latencies - floating point and complex ALU take more than 1

cycle so are pipelined - floating point units can handle up to 2 FP

operations at a time allowing for some SIMD

execution and improving overall FP performance - There are 128 registers for renaming

- reservation stations are used rather than a

re-order buffer (which was used in older versions

of the Pentium pipeline) - this means that instructions must wait in

reservation stations longer than in Tomasulos

version, waiting for speculation results

12

Pentium IV Pipeline

- Pentium III (Pentium Pro) pipeline was 10 stages

deep - taking a minimum of 10 clock cycles to complete

the shortest instructions with a clock rate of

1.1 GHz or less - the figure below shows the Pentium III pipeline

- For the Pentium IV

- pipeline depth was lengthened to 21 stages

(minimum) in order to accommodate a faster clock

rate of 1.5 GHz - by 2004, the pipeline was lengthened to 31 stages

(minimum) and the clock rate up to 3.2 GHz - The lengthening of the pipeline allowed for the

faster clock rates - the clock rate is now so fast that it takes 2

complete cycles for an instruction or data to

cross the chip so that at least 2 stages in the

pipeline are needed for certain operations like

data movement! - With the 128 reservation stations, 128

instructions could be in some state of operation

simultaneously (as opposed to 40 in the Pentium

III)

13

Trace Cache and Branch Prediction

- We talk about the trace cache in chapter 5

- for now, consider it to be an instruction cache

that stores instruction not by address but by the

order they are being executed - in this way, branches do not necessarily cost us

cache misses because the instruction being

branched to is not in the same cache block - The trace cache stores microinstructions (not

machine instructions) - repeated decoding is avoided, once a machine

instruction has been decoded, the decoded version

is placed in the trace cache, this greatly

reduces time necessary to do instruction decoding - A branch target buffer is used to store

microinstruction branches (not machine

instruction branches) within the trace cache - the target buffer uses a 2-level predictor to

select between local and global histories - target buffer is 8 times the size of the target

buffer used in the Pentium III - the misprediction rate for the target buffer is

below .15! - The trace cache and branch target buffer combined

mean that - microinstruction fetch and microinstruction

decoding is rarely needed because, once fetched

and decoded, the items are often found in the

cache and because predictions rarely cause wrong

instructions to be fetched

14

Source of Stalls

- This architecture is very complex and relies on

being able to fetch and decode instructions

quickly - the process breaks down when

- less than 3 instructions can be fetched in 1

cycle - trace cache causes a miss, or branches are miss

predicted - less than 3 instructions can be issued because

instructions have different number of

microoperations - e.g., one instruction has 4 and another has 1,

staggering when each instruction issues and

executes - limitation of reservation stations

- data dependencies cause a functional unit to

stall - data cache access results in a miss

- in some of these cases, the issue stage must

stall, in others the commit stage must stall - misprediction rates are very low, about .8 for

integer benchmarks and .1 for floating point

benchmarks (these are misprediction rates at the

machine level of instructions, not

microinstructions) - trace cache has nearly a 0 miss rate, the L1 and

L2 data caches have miss rates of around 6 and

.5 respectively - the machines effective CPI is around 2.2

15

Pentium IV Comparison

- Comparing the Pentium IV to the Pentium III

- P4 has over twice the performance in many SPEC

benchmarks in spite of a clock speed that isnt

twice as fast (this info is not in this text

edition) - The text provides a comparison between the P4 and

the AMD Opteron - the Opteron uses dynamic scheduling, speculation,

a shallower pipeline, issue and commit of up to 3

instructions per cycle, 2-level cache, and the

chip has a similar transistor count although is

only 2.8 GHz - the Opteron is a RISC instruction set, so

instructions are machine instructions, not

microinstructions - P4 has a higher CPI on all benchmarks except mcf

(in which the AMD is more than twice the P4) - so for the most case, instructions take less

clock time in the AMD than in the P4 but the P4

is a slightly faster clock - The text provides a briefer comparison between

the P4 and the IBM Power5 - the Power5 is only 1.9 GHz

- P5 is significantly better on most floating point

benchmarks and slightly worse on most integer

benchmarks with a clock speed half that of the P4 - see figures 2.28 2.34 for specific comparisons

16

A Balancing Act

- Improving one aspect of our processor does not

necessarily improve performance - in fact, it might harm performance

- consider lengthening the pipeline depth and

increasing clock speed (as with the P4) but

without adding reservation stations or using the

trace cache - Modern processor design takes a lot of effort to

balance out the factors - without accurate branch prediction and

speculation hardware, stalls from miss-predicted

branches will drop performance greatly - as clock speeds increase, stalls from cache

misses create a bigger impact on CPI, so larger

caches and cache optimization techniques are

needed (we cover the latter in chapter 5) - to support multiple issue of instructions, we

need a larger cache-to-processor bandwidth, which

can take up valuable space - as we increase the number of instructions that

can be issued, we need to increase the number of

reservation stations and reorder buffer size - For even greater improvement, we might need to

turn to software approaches instead of or in

addition to hardware enhancements in appendix

G, we will visit several compiler-based ideas

17

Sample Problem 1

- We see how complex an architecture can become in

the case of the Pentium IV - assume that we have additional space on the CPU

and want to enhance some element(s), what should

we pick and why? - choices are to

- add more reservation stations

- add more ALU functional units

- add another FP functional unit

- add more load/store units

- add a larger branch target buffer (either more

entries, or more prediction bits) - attempt to speed up the system clock and lengthen

the pipeline (the additional space will be used

for pipeline latches, control logic, etc) - add more memory to the trace cache

- add more memory to the L1 cache

- increase the microoperation queue size to store

more microoperations at any time

18

Solution

- Lets consider each not from the perspective of

how useful it might be but how much that

particular hardware is limiting instruction issue

and CPI - add more reservation stations because we can

issue no more than 3 microoperations per cycle,

and assuming that the average microoperation

executes for under 10 cycles, the 128 registers

should be sufficient - add more ALU/FP functional units since these

are pipelined, additional units are not necessary - add more load/store units limiting the number

of loads may be a source of data dependencies,

and so an additional load unit might help, an

additional store unit is probably not necessary - add a larger branch target buffer (either more

entries, or more prediction bits) prediction

accuracy is extremely high, more entries or bits

are not needed

19

Solution Continue

- attempt to speed up the system clock and lengthen

the pipeline (the additional space will be used

for pipeline latches, control logic, etc) there

is little that we can do to further lengthen the

pipeline, this may not be feasible - add more memory to the trace cache similar to

the branch target buffer, this will probably have

very little impact because of the low miss rate

of the current trace cache - add more L1 cache this can make a significant

impact since the miss rate is currently fairly

high, this would be my top choice - increase the microoperation queue size to store

more microoperations at any time although it is

unclear how many stalls arise from running out of

microoperations, because of the trace caches

performance, this is probably not necessary - Top choices increase L1 cache and add another

load unit

20

Sample Problem 2

- Two fallacies cited in the chapter are

- Processors with lower CPI will always be faster

- Processors with faster clock rates will always

be faster - Why are these not necessarily true?

- recall our CPU time formula CPU Time

ICCPICCT - if CPI is lower, the CPU Time is lower and thus

the processor is faster - if clock rate is higher, then CCT is lower and

CPU Time is lower, thus the processor is faster - BUT, we see from our examination of various

processors that - deeper pipelines can have a larger impact than

faster clock rates - multiple issue superscalars have a significant

impact on CPI but only if supported by

reservation stations, reorder buffers, and

accurate branch speculation - in the Pentium IV, the CPI might be lower than

other machines but its IC can be higher because,

in this case, IC is at the microinstruction level - additionally, a very low CPI with a slow clock

rate may not outperform a higher CPI with a

faster clock rate

21

Limitations on ILP (Chapter 3)

- From mid 80s through 2000, architects focused on

promoting ILP - deeper pipelines

- multiple instruction issue

- dynamic scheduling

- Speculation

- Hardware needs increased

- multiple function units

- cost grows linearly with the number of units

- increase (possibly very large) in memory

bandwidth - more register-file bandwidth

- which might take up significant space on the chip

and may require larger system bus sizes which

turns into more pins - more complex memory system

- possibly independent memory banks

22

Limitations

- By 2000, architects found limitations in just how

much ILP there is to exploit - inherent limitations to multiple-issue are the

limited amount of ILP of a program - how many instructions are independent of each

other? - how much distance is available between loading an

operand and using it? between using and saving

it? - multi-cycle latency for certain types of

operations that cause inconsistencies in the

amount of issuing that can be simultaneous - Architects more recently have concentrated

- on further optimizations of current architectures

- and achieving higher clock rates without

increasing issue rates

23

Limitations on Issue Size

- Ideally, we would like to issue as many

independent instructions simultaneously as

possible, but this is not practical because we

would have to - look arbitrarily far ahead to find an instruction

to issue - rename all registers when needed to avoid WAR/WAW

- determine all register and memory dependences

- predict all branches (conditional, unconditional,

returns) - provide enough functional units to ensure all

ready instructions can be issued - What is a possible maximum window size?

- to determine register dependences over n

instructions requires n2-n comparisons - 2000 instructions ? 4,000,000 comparisons

- 50 instructions ? 2450 comparisons

- window sizes have ranged between 4 and 32 with

some recent machines having sizes of 2-8 - a machine with window size of 32 achieves about

1/5 of the ideal speedup for most benchmarks (see

figure on next slide)

24

Window Size Impact on Instruction Issue

25

Realistic Branch Prediction

- Types of predictions

- Perfect branch prediction

- impossible to achieve so we wont bother with

this - Selective history prediction using

- correlating two-bit predictor

- non-correlating two-bit predictor

- selector between them

- Standard two-bit predictor with 512 two-bit

entries - Static predictor

- uses program profile history

- None

Misprediction Issue Rate Rate Selective 3

24 Standard 17 20 Static 10 21 see

the figures on the next slide for details

- Experimental results shown to the right

- notice that issue rate is not significantly

different and that the static predictor is the

easiest so might be a reasonable approach

26

Branch Predictor Performance

27

Effects of Finite Registers

- With infinite registers, register renaming can

eliminate all WAW and WAR hazards - with Tomasulos approach, the reservation

stations offer virtual registers - Power 5 has 88 additional FP and 88 additional

integer registers for reservation stations - surprisingly though, the number of registers does

not have a dramatic impact as long as there are

at least 64 64 registers available

28

Alias Analysis

- Aside from register renaming, we have name

dependencies on memory references - Three models are

- global (perfect analysis of all global vars)

- stack perfect (perfect analysis of all stack

references) - inspection (examine accesses for interference at

compile time) - none (assume all references conflict)

29

A Realizable Processor

- The authors describe an ambitious but realistic

processor that could be available with todays

technology - issue up to 64 instructions / cycle with no

restrictions on what instructions can be issued

in the same cycle - tournament branch predictor with 1K entries and

16 entry return predictor - perfect memory reference disambiguation performed

dynamically - register renaming with 64 int and 64 FP registers

- with a 64 instruction / cycle issue capability,

the average number of instructions issued per

cycle is estimated to be around 20 - if there are no stalls for limited hardware,

cache misses and miss-speculation, this would

result in a CPI of .05! - we might question whether a 64 instruction window

is reasonable given the complexity needed in

comparing up to 64 instructions together in each

cycle, today we find most computers limit window

sizes to 8 at most

30

Example

- Lets compare three hypothetical processors and

determine their MIPS rating for the gcc benchmark - processor 1 simple MIPS 2-issue superscalar

pipeline with clock rate of 4 GHz, CPI of 0.8,

cache system with .005 misses per instruction - processor 2 deeply pipelined MIPS with a clock

rate of 5 GHz, CPI of 1.0, smaller cache yielding

.0055 misses per instruction - processor 3 speculative superscalar with

64-entry window that achieves 50 of its ideal

issue rate (see figure 3.7) with a clock rate of

2.5 GHz, a small cache yielding .01 misses per

instruction (although 25 of the miss penalty is

not visible due to dynamic scheduling) - assume memory access time (miss penalty) is 50 ns

- to solve this problem, we have to determine each

processors CPI, which is a combination of

processor CPI and the impact of memory (cache

misses)

31

Solution

- Processor 1

- 4 GHz clock .25 ns per clock cycle

- memory access of 50 ns so miss penalty 50 / .25

200 cycles - cache penalty .005 200 1.0 cycles per

instruction - overall CPI 0.8 1.0 1.8

- MIPS 4 GHz / 1.8 2222 MIPS

- Processor 2

- 5 GHz clock .2 ns per clock cycle

- miss penalty 50 / .2 250 cycles

- cache penalty .0055 250 1.4 cycles per

instruction - overall CPI 1.0 1.4 2.4

- MIPS 5 GHz / 2.4 2083 MIPS

- Processor 3

- 2.5 GHz clock .4 ns per clock cycle

- miss penalty takes affect only 75 of the time,

so miss penalty .75 50 / .4 94 cycles - cache penalty .01 94 0.94

- CPU portion of the CPI is based on half the ideal

issue rate of a 64-entry window, which is 1 / (9

2) 0.22 - overall CPI 0.94 0.22 1.16

- MIPS 2.5 / 1.16 2155 MIPS

32

Sample Problem 1

- For the li benchmark

- compare a perfect processor from one that has a

128 window size, tournament branch predictor, 64

integer and 64 FP renaming registers and

inspection alias analysis - The perfect processor can issue 18 instructions

per cycle - but the branch prediction only permits up to 16

instructions per cycle and an infinite number of

registers and perfect alias analysis can only

accommodate 12 instructions per cycle - so the perfect processor can achieve an issue

rate of 12 instructions per cycle, or a CPI 1 /

12 .083 - The more realistic processor is most limited by

alias analysis (4 instructions per cycle), so a

CPI .25 - the perfect machine is then .25 / .083 3 times

faster on this benchmark

33

Sample Problem 2

- Architects are considering one of three

enhancements to the next generation of computer - more on-chip cache to reduce the impact of memory

access - faster memories

- faster clock rates

- Explain, using the example on pages 167-169, how

each of these would impact the three hypothetical

processors - more on-chip cache lowers cache CPI depending

on the current miss rate, this might be useful,

but for processor 1 and 2, the miss rates are

already lt .1 - faster memory reduces cache CPI (it decreases the

number of cycles needed for any cache miss)

since all three processors CPIs are roughly half

from cache miss and half from processor

performance, this could have a significant impact - faster clock rates increases cache CPI, possibly

will have no effect on execution CPI by merely

increasing clock rate, the stalls for memory

accesses will increase, however if this increase

is coupled with a longer pipeline, then execution

CPI might decrease and so overall performance

might improve

34

Sample Problem 3

- Consider a speculative superscalar with a window

size of 32 - with proper hardware support, the superscalar can

issue 70 of the expected issue rate (see figure

3.2) - the processor has a 3.33 GHz clock rate

- the processor stalls when all functional units

are busy (which arises once in every 12 cycles) - when there is a misprediction, the processor

require 6 complete cycles to flush the reorder

buffer and begin again (profile-based prediction

is used) - memory accesses take 40 ns, 40 of the

instructions are loads or stores and the

instruction cache has a miss rate of .5 and the

data cache has a miss rate of .03 - determine this machines MIPS rating for the

doduc benchmark - Solution

- cache miss penalty 40 ns / 3.33 GHz 120

cycles - memory CPI .005 120 .40 .0003 120

.614 - CPU CPI 1 / 6.3 1 / 12 6 .05 .542

- CPI .614 .542 1.156

- MIPS rating 3.33 GHz / 1.156 2881 MIPS