Our status and plans in CMS - PowerPoint PPT Presentation

1 / 19

Title:

Our status and plans in CMS

Description:

01.2005 join CMS SUSY BSM group. Responsibility: mSUGRA trileptons signature in large m0 ... 10.2005 Implemented NN and Genetic algo for the bkg. suppression. ... – PowerPoint PPT presentation

Number of Views:17

Avg rating:3.0/5.0

Title: Our status and plans in CMS

1

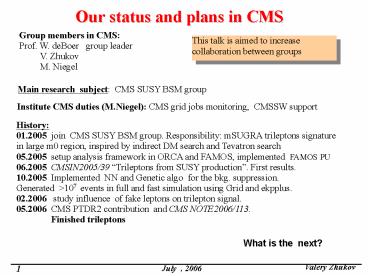

Our status and plans in CMS

Group members in CMS Prof. W. deBoer group

leader V. Zhukov M. Niegel

This talk is aimed to increase collaboration

between groups

Main research subject CMS SUSY BSM group

Institute CMS duties (M.Niegel) CMS grid jobs

monitoring, CMSSW support

History 01.2005 join CMS SUSY BSM group.

Responsibility mSUGRA trileptons signature in

large m0 region, inspired by indirect DM search

and Tevatron search 05.2005 setup analysis

framework in ORCA and FAMOS, implemented FAMOS

PU 06.2005 CMSIN2005/39 Trileptons from SUSY

production. First results. 10.2005 Implemented

NN and Genetic algo for the bkg.

suppression. Generated gt107 events in full and

fast simulation using Grid and ekpplus. 02.2006

study influence of fake leptons on trilepton

signal. 05.2006 CMS PTDR2 contribution and CMS

NOTE2006/113. Finished trileptons

What is the next?

2

1. Fake leptons

Why fake leptons?

MSUGRA trileptons

1. Important for SUSY search, especially in low

MET regions. 2. For all leptonic channels

(SM,Higgs) Fakes -increase bkg. -introduce

uncertainties Tevatron experience more fakes

than expected...

With fakes 50 increase bkg

Without fakes

Our experience standard lepton isolation cuts

tracker SPTlt1.5 GeV in cone Rlt0.3 , for muons

Ecallt5 GeV Rlt0.3

Rate per event (FAMOS)

CMS IN2005/28 Wjets ORCA Fe10 4 Fm 105

Large uncertainties in Fake rates, up to 50

Fake rate depends on the bkg. channel, event MC

generator (PS,ME, I/FSR) and detector

simulation (FAMOS,ORCA)

No clear understanding in CMS how to estimate

fake rates in simulation and with the real

data....

3

Fake leptons classification

in simulation -trace back all leptons and

calculate fake rates per each type of object

(jet, hadrons, leptons) -improve selection

algorithms (use NN), spot important detector

parameters.

with real data calibrate with standard candles (

Zjets,Wjets...) , but still PU, ISRF effects.

We need an algorithm for continuous monitoring!

Plans This is a 'long' term activity related to

different CMS working groups. 1. FakeFinder

package, first for FAMOS, later for CMSSW

10/2006 2. Optimization of leptons Id (in

collaboration with ...)

12/2006 3. Calibration with the real data (

algorithms)

5/2007

4

Fakes Finder

For example (preliminary)

FakeElectron

Fake

FakesFinder

Fake getfake(RecMuon ) Fake

getfake(ElectronCandidate ) ....

FakeMuon

bool fake double fakeness ...

FakeJet

FakesConfig

SimParticle SimHit CaloClusters RecMuon ...

void read() double dRmatch .....

Tracer

Containers of related pointers for easy navigation

HepParticle getMC() SimHit getSim() ...

How to match the MC, Sim and Rec objects? -use

existing reconstruction history -independently by

the isolation cone .....

As a result optimization of the leptons

identification

5

2. SUSY topology selector

SUSY (mSUGRA) free parameters (mo, m1/2, A0, tanb

, sgnm ) define mass spectrum and coupling of

new particles (q,g,c) and therefore signal

signature

METJetsLeptons

With different mSUGRA parameters

6

Inclusive and exclusive SUSY search

1. Inclusive search Check any deviations from

SM predictions already at low statistics (small

s, low Lint ) First days Physics! Counting like

experiments. Uncertainties are very important.

Can we constrain SUSY parameters already in

inclusive search?

2. Exclusive search With enough statistics

reconstruct kinematic variables , endpoints in

invariant mass distribution. Constrains mass

spectrum and SUSY parameters. Works in limited

regions , at low m0. Requires large statistics.

ATLAS

Reconstructed mass spectrum from kinematic end

points at 100 fb-1 for low mass region

Region accessible for kinematic end points

7

mSUGRA cross sections

Main production channels

Fractions of different production channels

Total mSUGRA cross section

s tot pb

tan?50 A00

Different channels -gt different topologies can be

used to separate regions

8

MSUGRA observables Jets

Reconstructed (FAMOS) averaged observables

ltNjgt ETgt30GeV

ltNBjgt B jets

mgmq long cascades

c02-gt H -gtbbar

Highest jet ltETgt

Second jet ltETgt

200

250

Hardest jet in q decays

Third jet ltETgt

Cosf between first and second

120

Cosf between first and MET

Cosf between second and MET

9

more observables MET

S ET from CaloTowers

MET from CaloTowers

2 body

Low MET region

MinvminvH1minvH2/2 invariant mass per hemisphere

Heff METS ET jets S ETleptons

10

And more Leptons

ltN gt electrons

ltN gt muons

ltN gt SameSignSameFlavor

ltN gt OppositeSignSameFlavor

Highest muon ltPTgt m1/2

Cosf OSSF and MET

back2back

Nss/Nss-

ltMinvgt OSSF

Expect asymmetry

Zpeak

11

Event selection in inclusive search

Inclusive analysis in CMS

Bkg hypothesis Hb

Signal hypothesis Hs

signal

Selector

ns

S

sb

nb

backgrounds

a

/- uncert.

S

nb

nbns

Selector is optimised for some particular test

point to get maximum significance S. For small

statistic regime at S5 (discovery reach) ns5,

nb1 shape of Hb distribution is very important!

(and not well known)

a -probability to accept wrongly the Hs

Significance Sns/sb , where sb

sqrt(nbdnb2) nb -Poisson term dnb - systematic

uncertainties

- Only one parameter (S) to characterize a

hypothesis - Systematic uncertainties of the background are

very important - Can not say much about the model parameters

12

Region Dependant event selection

-The mSUGRA topologies depends on parameters ,

can define n- region dependant selectors Seli

-There is only one solution , i.e. Selk the

Sel n-m should give no signal at the defined

threshold (5s) . Probability to observe a signal

in k -region Ppk Si?k (1-pi)

Multichannel inclusive analysis

signal

Selector i optimized for i-topology

Si

ns

ns

S

Si

Selector i optimized for i-topology

ns

nb

backgrounds

Preselector

nb

/- uncert.

Preselector suppresses most of the SM

background Selectors - tuned to different

mSUGRA topologies

Problem The bkg suppression efficiency should

be mSUGRA model independent, i.e. selection

efficiency Ei(Dbkg)const.

identify mSUGRA region, increases the model

significance. Can do a BLIND analysis, i.e.

exclude the signal topology and test the signal

and bkg hypothesis in neighbor regions. - very

model dependant . How to control bkg?

13

Region separation.

Use Neural Network (NN) for region separation.

Train NN for region i against other regions

j-i

Sig2/bkg13

Preselection efficiency

Preselect METgt50, Njgt2 (gt30GeV)

NN parameters MET, Heff, ETj1, ETj2, ETj3, Ptl1,

Nj,Njb cosf(j1j2), cosf(j1met),cosf(j2met)

3 test points (m0,m1/2,tanb50) Sel1(500,450) Sel

2 (1100,300) Sel3(2500,500)

Sel Efficiency

ExampleTeacher out

Selection efficiencies of 3 NNs trained for

Sig1/bkg23

Sig2/bkg13

Sig3/bkg12

Can constrain mSUGRA regions!

14

Constrain MSUGRA parameters

Can we separate channels and identify the

fractions(f.ex. gg, qq, etc)? Train NNs for

individual channels against others for one test

point (m0500 m1/2200)

gg

cc

MC and Rec fractions

NN selection efficiencies ( m0500 m1/2200)

The selection efficiency E is a convolution of

NN and preselection efficiencies Eie (presl)ki

e (NN)k . Find the fractions F i from the

observed Di DiEi X Fi and compare with the

expectation from Mc(normalized)

Another point(m02500 m1/2600) with the same

NN( m0500 m1/2200)

Larger errors, but still can constrain the ratios.

The s(gg)s(qq)s(cc) ratios constrain the

mSUGRA region

15

Bkg suppression

Preselect data samples and use NN to train ttbar

against different mSUGRA regions, produce many

NNs and check efficiency for the bkg and signal.

Preselection METgt50, Njgt2(ETgt30), 2m(PTgt10)

NNs (preselection) efficiency for bkg(ttbar).

Best is const, i.e. region independent

NNs preselection efficiency for two mSUGRA points

m0500 m1/2200

m02500 m1/2600

bkgttbar

Preselection efficiency dominates, similar

pattern. Are there improvement in discovery?

Up to 10 spread, is it acceptable?

Still work to be done...

16

Combining results

ATLAS experience kinematic edges cross

sections Use of Markov chain to Likelihood

maximization in multiparameters space

Can do 1. Combine outputs of different selectors

in one likelihood L(p), where p are the model

parameters. 2. Find the most probable set of

parameters p for the observed data using the

Markov chain. p(md)p(dm) p(m) p(dm) PLi

Powerful method of hypothesis testing in

multiparameters space. Looks promising..

17

Summary

General CMS interest activity Fakes Work is

started. Will produce the large data samples

(Wjets, Zjets, QCD) during summer. Implement and

debug the FakesFinder

Hot topic- how to identify SUSY regions? (SUSY

BSM) Topology selector based on NN in inclusive

search is working in principle. Need optimization

of the bkg suppression for different

topologies Select of observables less prone to

uncertainties. Study of the bkg uncertainties

(PYTHIA-ALPGEN)-started.

Combining results and hypothesis testing with the

Markov chain. Interesting statistical aspect,

can be used in many studies. Just started...

This is just enough...

Anybody interested can joint our efforts... Fun

and important results are guaranteed.

18

Selection efficiency for the PTDR analysis

Use selection cuts defined in PTDR analysis. Most

of them are tuned to mo60, m12250 tanb10 bulk

region

tan?50 A00

19

Discovery reaches 10 fb-1

Taking the Nbkg from analysis, the results are

very close... PTDR is tuned to tanb10, here is 50