Visible Markov Models - PowerPoint PPT Presentation

1 / 10

Title:

Visible Markov Models

Description:

1. Hidden Markov Models (HMMs) First: Visible VMMs. Formal Definition. Recognition. Training ... Let's revisit speech for a moment. In speech we are given a ... – PowerPoint PPT presentation

Number of Views:20

Avg rating:3.0/5.0

Title: Visible Markov Models

1

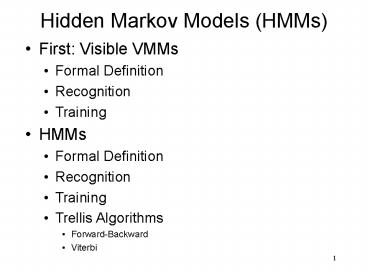

Hidden Markov Models (HMMs)

- First Visible VMMs

- Formal Definition

- Recognition

- Training

- HMMs

- Formal Definition

- Recognition

- Training

- Trellis Algorithms

- Forward-Backward

- Viterbi

2

Visible Markov Models

- Probabilistic Automaton

- N distinct states S s1, , sN

- M-element output alphabet K k1, , kM

- Initial state probabilities ? pi, i ? S

- State transition at t 1, 2,

- State trans. probabilities A aij, i,j ? S

- State sequence X X1, , XT, Xt ? S

- Output seq. O o1, , oT, ot ? K

3

VMM Weather Example

4

Generative HMM

- We choose the state sequence probabilistically

- Lets try this using

- the numbers 1-10

- drawing from a hat

- an ad-hoc assignment scheme

5

2 Questions

- Training Problem

- Given an observation sequence O and a space of

possible models which spans possible values for

model parameters w A, ?, how do we find the

model that best explains the observed data? - Recognition (decoding) problem

- Given a model wi A, ?, how do we compute how

likely a certain observation is, i.e. P(O wi) ?

6

Training VMMs

- Given observation sequences Os, we want to find

model parameters w A, ? which best explain

the observations - I.e. we want to find values for w A, ? that

maximises P(O w) - A, ? chosen argmax A, ? P(O A, ?)

7

Training VMMs

- Straightforward for VMMs

- frequency in state i at time t 1

- (number of transitions from state i to state j)

- --------------------------------------------------

--------------------------------------------------

--------------------------------------------------

------------------- - (number of transitions from state i)

- (number of transitions from state i to state j)

- --------------------------------------------------

--------------------------------------------------

--------------------------------------------------

------------------- - (number of times in state i)

8

Recognition

- We need to calculate P(O wi)

- P(O wi) is handy for calculating P(wiO)

- If we have a set of models L w1,w2,,wV then

if we can calculate P(wiO) we can choose the

model which returns the highest probability, i.e. - wchosen argmax wi ? L P(wiO)

9

Recognition

- Why is P(O wi) of use?

- Lets revisit speech for a moment.

- In speech we are given a sequence of

observations, e.g. a series of LPC vectors - E.g. LP coefficients taken from frames of length

20-40ms, every 10-20 ms - If we have a set of models L w1,w2,,wV then

if we can calculate P(wiO) we can choose the

model which returns the highest probability, i.e. - wchosen argmax wi ? L P(wiO)

10

wchosen argmax wi ? L P(wiO)

- P(wiO) difficult to calculate as we would have

to have a model for every possible observation

sequence O - Use Bayes rule

- P(x y) P (y x) P(x) / P(y)

- So now we have

- wchosen argmax wi ? L P(O wi) P(wi) / P(O)

- P(wi) can be easily calculated

- P(O) is the same for each calculation and so can

be ignored - So P(O wi) is the key!!!