SIAM Parallel Processing - PowerPoint PPT Presentation

Title:

SIAM Parallel Processing

Description:

Halving factor : baroque tuned. Stopping criterion : simple tuned ... 'Baroque hybrid' adaptation: there is an -implicit- dynamic choice between two algorithms ... – PowerPoint PPT presentation

Number of Views:35

Avg rating:3.0/5.0

Title: SIAM Parallel Processing

1

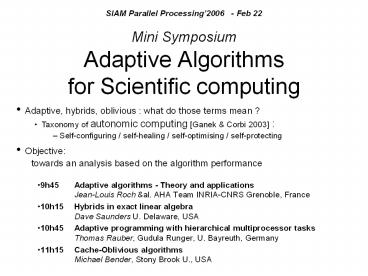

SIAM Parallel Processing2006 - Feb 22 Mini

SymposiumAdaptive Algorithms for Scientific

computing

- Adaptive, hybrids, oblivious what do those

terms mean ? - Taxonomy of autonomic computing Ganek Corbi

2003 - Self-configuring / self-healing /

self-optimising / self-protecting - Objective towards an analysis based on

the algorithm performance

- 9h45 Adaptive algorithms - Theory and

applications Jean-Louis Roch al. AHA Team

INRIA-CNRS Grenoble, France - 10h15 Hybrids in exact linear algebra Dave

Saunders U. Delaware, USA - 10h45 Adaptive programming with hierarchical

multiprocessor tasks Thomas Rauber, Gudula

Runger, U. Bayreuth, Germany - 11h15 Cache-Oblivious algorithms Michael

Bender, Stony Brook U., USA

2

Adaptive algorithmsTheory and applications

- Van Dat Cung, Jean-Guillaume Dumas, Thierry

Gautier, Guillaume Huard, Bruno Raffin,

Jean-Louis Roch, Denis Trystram - IMAG-INRIA Workgroup on Adaptive and Hybrid

Algorithms Grenoble, France

Contents I. Some criteria to analyze adaptive

algorithms II. Work-stealing and adaptive

parallel algorithms III. Adaptive parallel

prefix computation

3

Why adaptive algorithms and how?

Resources availability is versatile

Input data vary

Measures on resources

Measures on data

Adaptation to improve performances

- Scheduling

- partitioning

- load-balancing

- work-stealing

- Calibration

- tuning parameters block size/ cache

choice of instructions, - priority managing

4

Modeling an hybrid algorithm

- Several algorithms to solve a same problem f

- Eg algo_f1, algo_f2(block size), algo_fk

- each algo_fk being recursive

algo_fi ( n, ) . f ( n

- 1, ) . f ( n /

2, )

- E.g. practical hybrids

- Atlas, Goto, FFPack

- FFTW

- cache-oblivious B-tree

- any parallel program with scheduling

support Cilk, Athapascan/Kaapi, Nesl,TLib

- .

5

- How to manage overhead due to choices ?

- Classification 1/2

- Simple hybrid iff O(1) choices eg block

size in Atlas, - Baroque hybrid iff an unbounded number of choices

eg recursive splitting factors in FFTW - choices are either dynamic or pre-computed based

on input properties.

6

- Choices may or may not be based on architecture

parameters. - Classification 2/2. an hybrid is

- Oblivious control flow does not depend neither

on static properties of the resources nor on the

input eg cache-oblivious algorithm Bender - Tuned strategic choices are based on static

parameters eg block size w.r.t cache,

granularity, - Engineered tuned or self tunedeg ATLAS and

GOTO libraries, FFTW, eg LinBox/FFLAS

Saundersal - Adaptive self-configuration of the algorithm,

dynamlc - Based on input properties or resource

circumstances discovered at run-timeeg idle

processors, data properties, eg TLib

RauberRünger

7

Examples

- BLAS libraries

- Atlas simple tuned (self-tuned)

- Goto simple engineered (engineered tuned)

- LinBox / FFLAS simple self-tuned,adaptive

Saundersal - FFTW

- Halving factor baroque tuned

- Stopping criterion simple tuned

- Parallel algorithm and scheduling

- Choice of parallel degree eg Tlib

RauberRünger - Work-stealing schedile baroque hybrid

8

Adaptive algorithmsTheory and applications

- Van Dat Cung, Jean-Guillaume Dumas, Thierry

Gautier,Guillaume Huard, Bruno Raffin,

Jean-Louis Roch, Denis Trystram - INRIA-CNRS Project onAdaptive and Hybrid

Algorithms Grenoble, France

Contents I. Some criteria to analyze for

adaptive algorithms II. Work-stealing and

adaptive parallel algorithms III. Adaptive

parallel prefix computation

9

Work-stealing (1/2)

Work W1 total operations

performed

Depth W? ops on a critical path (parallel

time on ?? resources)

- Workstealing greedy schedule but

distributed and randomized - Each processor manages locally the tasks it

creates - When idle, a processor steals the oldest ready

task on a remote -non idle- victim processor

(randomly chosen)

10

Work-stealing (2/2)

Work W1 total operations

performed

Depth W? ops on a critical path (parallel

time on ?? resources)

- Interests -gt suited to heterogeneous

architectures with slight modification

Bender-Rabin02 -gt with good probability,

near-optimal schedule on p processors with

average speeds ?ave Tp lt W1/(p ?ave) O ( W?

/ ?ave ) - NB succeeded steals task migrations lt p

W? Blumofe 98, Narlikar 01, Bender 02 - Implementation work-first principle Cilk,

Kaapi - Local parallelism is implemented by sequential

function call - Restrictions to ensure validity of the default

sequential schedule - serie-parallel/Cilk

- reference order/Kaapi

11

Work-stealing and adaptability

- Work-stealing ensures allocation of processors to

tasks transparently to the application with

provable performances - Support to addition of new resources

- Support to resilience of resources and

fault-tolerance (crash faults, network, ) - Checkpoint/restart mechanisms with provable

performances Porch, Kaapi, - Baroque hybrid adaptation there is an

-implicit- dynamic choice between two algorithms - a sequential (local) algorithm depth-first

(default choice) - A parallel algorithm breadth-first

- Choice is performed at runtime, depending on

resource idleness - Well suited to applications where a fine grain

parallel algorithm is also a good sequential

algorithm Cilk - Parallel DivideConquer computations

- Tree searching, BranchX

- -gt suited when both sequential and parallel

algorithms perform (almost) the same number

of operations

12

But often parallelism has a cost !

- Solution to mix both a sequential and a parallel

algorithm - Basic technique

- Parallel algorithm until a certain grain

then use the sequential one - Problem W? increases also, the number of

migration and the inefficiency o( - Work-preserving speed-up Bini-Pan 94

cascading Jaja92 Careful interplay of both

algorithms to build one with both W? small

and W1 O( Wseq ) - Divide the sequential algorithm into block

- Each block is computed with the (non-optimal)

parallel algorithm - Drawback sequential at coarse grain and

parallel at fine grain o( - Adaptive granularity dual approach

- Parallelism is extracted at run-time from any

sequential task

13

Self-adaptive grain algorithm

- Based on the Work-first principle Executes

always a sequential algorithm to reduce

parallelism overhead - gt use parallel algorithm only if a processor

becomes idle by extracting parallelism from a

sequential computation - Hypothesis two algorithms

- - 1 sequential SeqCompute- 1 parallel

LastPartComputation at any time, it is

possible to extract parallelism from the

remaining computations of the sequential

algorithm - Examples - iterated product Vernizzi 05 -

gzip / compression Kerfali 04 - MPEG-4 / H264

Bernard 06 - prefix computation Traore 06

14

Adaptive algorithmsTheory and applications

- Van Dat Cung, Jean-Guillaume Dumas, Thierry

Gautier,Guillaume Huard, Bruno Raffin,

Jean-Louis Roch, Denis Trystram - INRIA-CNRS Project onAdaptive and Hybrid

Algorithms Grenoble, France

Contents I. Some criteria to analyze for

adaptive algorithms II. Work-stealing and

adaptive parallel algorithms III. Adaptive

parallel prefix computation

15

Prefix computation an example where

parallelism always costs ?1 a0a1

?2a0a1a2 ?na0a1an

- Sequential algorithm for (i 0 i lt n

i ) ? i ? i 1 a i - Parallel algorithm Ladner-Fischer

W1 W? n

a0 a1 a2 a3 a4 an-1 an

W? 2. log n but W1 2.n Twice more

expensive than the sequential

16

Adaptive prefix computation

- Any (parallel) prefix performs at least W1 ? 2.n

- W? ops - Strict-lower bound on p identical processors Tp

? 2n/(p1) block algorithm pipeline

Nicolaual. 2000 - Application of adaptive scheme

- One process performs the main sequential

computation - Other work-stealer processes computes parallel

segmented prefix - Near-optimal performance on processors with

changing speeds Tp lt 2n/((p1).

?ave) O ( log n / ?ave)

lower bound

17

Adaptive Prefix on 3 processors

?1

18

Adaptive Prefix on 3 processors

?3

?7

19

Adaptive Prefix on 3 processors

?8

20

Adaptive Prefix on 3 processors

?8

?8

?5

?6

?9

?11

21

Adaptive Prefix on 3 processors

?12

?11

?8

?8

?5

?6

?7

?9

?11

?10

22

Adaptive Prefix on 3 processors

Implicit critical path on the sequential process

23

Adaptive prefix some experiments

Join work with Daouda Traore

Prefix of 10000 elements on a SMP 8 procs (IA64 /

linux)

External charge

Time (s)

Time (s)

processors

processors

Multi-user context Adaptive is the

fastest15 benefit over a static grain algorithm

- Single user context

- Adaptive is equivalent to

- - sequential on 1 proc

- - optimal parallel-2 proc. on 2 processors

- -

- - optimal parallel-8 proc. on 8 processors

24

The Prefix race sequential/parallel fixed/

adaptive

On each of the 10 executions, adaptive completes

first

25

Conclusion

- Adaptive what choices and how to choose ?

- Illustration Adaptive parallel prefix based on

work-stealing - - self-tuned baroque hybrid O(p log n )

choices - - achieves near-optimal performance

- processor oblivious

- Generic adaptive scheme to implement parallel

algorithms with provable performance

26

Mini SymposiumAdaptive Algorithms for

Scientific computing

- Adaptive, hybrids, oblivious what do those

terms mean ? - Taxonomy of autonomic computing Ganek Corbi

2003 - Self-configuring / self-healing /

self-optimising / self-protecting - Objective towards an analysis based on

the algorithm performance

- 9h45 Adaptive algorithms - Theory and

applications Jean-Louis Roch al. AHA Team

INRIA-CNRS Grenoble, France - 10h15 Hybrids in exact linear algebra Dave

Saunders, U. Delaware, USA - 10h45 Adaptive programming with hierarchical

multiprocessor tasks Thomas Rauber, U. Bayreuth,

Germany - 11h15 Cache-Obloivious algorithms Michael

Bender, Stony Brook U., USA

27

Questions ?