Introduction to Vision and Graphics - PowerPoint PPT Presentation

1 / 54

Title:

Introduction to Vision and Graphics

Description:

Rafael Gonzalez and Richard Woods. Digital Image Processing Using Java. Nick Efford ... along the neutral axis is called value, the strength (pureness) of the colour ... – PowerPoint PPT presentation

Number of Views:25

Avg rating:3.0/5.0

Title: Introduction to Vision and Graphics

1

Introduction to Vision and Graphics

2

Course Information

- Course structure

- Lecture Wednesday

- 1pm - C60

- 2pm - LT1

- Practical Friday

- 12pm A32 (start week 3)

- Assessment

- Assignment (25)

- 2 hour exam (75)

- Website

- http//cs.nott.ac.uk/bai/IVG/index.html

- Text books

- Digital Image Processing

- Rafael Gonzalez and Richard Woods

- Digital Image Processing Using Java

- Nick Efford

3

Contents

- Introduction to vision and graphics

- Image processing

- Edge detection

- Image segmentation

- Image registration

- Image transforms

- Motion detection and tracking

- Introduction to graphics

4

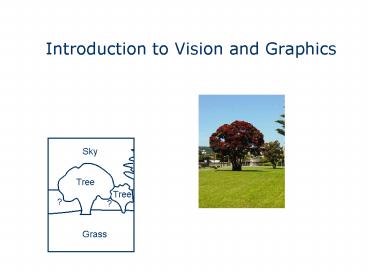

Image Processing, Vision, Graphics

- In image processing we do things like

- Removing noise from images

- Finding edges and features in images

- In computer vision we do things like

- Finding moving objects in a scene

- Recognising objects from a database

- Build abstract models of the world from images

- In computer graphics we do things like

- Creating a 3D model, with realistic shape,

colour, texture, and project it to 2D for viewing - Animate the 3D model

- Creating a virtual world and animate the objects

in it - The link between imaging and graphics

- Image based modelling

5

Vision Example - Face Recognition

What Vision does

6

Graphics examples

7

Convergence of Vision and Graphics

Courtesy of Chinese Academy of Sciences

8

Week One

- Image Acquisition

- Colour Models

- Image Formats/Compression

- A simple Java class JPEGImage

- Image Noise Filtering

9

Image Acquisition

- A simple camera model is the pinhole camera model

(conventional cameras do not have pinholes) - Light passes through a small hole and falls on an

image plane - The image is inverted and scaled

- Digital cameras use CCDs (charge-coupled devices)

- A CCD is a circuit, consisting of an electrode,

attached to an insulator, behind which is a block

of semi-conducting silicon - The electrode is connected to the positive

terminal of a power supply, and the silicon to

negative - When a photon (light) hits a CCD, it strips

electrons from silicon and electrons are

attracted to electrode - Counting the number of electrons under electrode

is where charge coupling comes in varying the

charges on electrodes moves electrons from

electrode to electrode to be collected and

counted by additional circuits - The outputs of CCDs are numbers representing the

amount of light that reached the devices when the

image is formed

10

Digital Images

- So a digital image contains a 2D array of pixels

- Each pixel may either have a single grey value or

several (colour) values, typically RGB (red,

green, blue)

11

Colour

- We need a way to represent colour of pixels of an

image - RGB is a common colour model used for colour

displays such as computer monitors, televisions,

etc

- The colours we perceive in an object are

determined by the nature of the light reflected

from the object - When light hits objects, some of the wavelengths

are absorbed and some are reflected, depending on

the materials in the object. The reflected

wavelengths are what we perceive as the object's

color - Visible light is composed of a narrow band of

frequencies in the electromagnetic spectrum,

e.g., green objects reflect light with

wavelengths in 500 to 570 nm range

500

600

700

400

Wavelength (nanometers)

12

RGB Colour Model

B

- Three primary colours red, green, blue. These

are colours of light rather than pigments - RGB Space is linked to the way our eyes work - 3

sorts of cones, each responds differently to

different wavelengths of light, red, green, and

blue - RGB colours can be seen as a 3D space, with axes

of red, green, and blue. Other the colours made

by mixing these lie in a cube extending from the

origin (black)

255

255

R

255

G

Black (0, 0, 0) Red (255, 0, 0)

Green (0, 255, 0) Yellow (255, 255, 0)

Blue (0, 0, 255) Magenta (255, 0, 255)

Cyan (0, 255, 255) White (255, 255,

255) (red, green, blue)

13

Primary and Secondary Colours

Blue

Red Blue Magenta

Blue Green Cyan

Red

Green

Green Red Yellow

Primary Red, Green, Blue Secondary Magenta,

Cyan, Yellow

14

RGB Example

Colour Image

Red

Green

Blue

15

Greyscale

- Sometimes we want a single value at each pixel to

makes processing easier - This value is usually the intensity or grey

value - We can convert an RGB image to greyscale using

- i 0.30r 0.59g 0.11b

- i is the grey value

- r, g, and b are the red green and blue values

Original

Average

Weighted

16

HSV Colour Model

- Primary colours are separated by 120 degrees

- Secondary colours are 60 degrees from primaries

17

HSV ? RGB

- We can convert HSV to/from RGB

- Scaling is important, as different values are

used e.g, RGB values are often in the range 0,1

or 0,255, hue angles can be in degrees or

radians - We will assume

- RGB values are in the ranges 0,1

- H values in degrees and in the range 0,360

- S and V are in the range 0,1

- In RGB, if we move a plane perpendicular to the

axis from black (0,0,0) to white point

(255,255,255), only the strength of the colour

will change - In HSV the position along the neutral axis is

called value, the strength (pureness) of the

colour

18

RGB ? HSV

- V is the largest of the RGB values

- S is the range of the RGB values compared to V

- The Hue depends on R,G,B in a more complex manner

- If RGB then we have a grey colour, so H is

undefined - Otherwise the highest of R,G,B tells us which

part of the colour wheel were in

19

RGB ? HSV

- V max(R,G,B)

- delta max(R,G,B) - min (R,G,B)

- if (V 0 OR delta 0)

- H is undefined

- else if (R V)

- H 60(G-B)/delta

- else if (G V)

- H 120 60(B-R)/delta

- else if (B V)

- H 240 60(R-G)/delta

20

HSV ? RGB

- if (S 0) R G B V

- else

- C H \ 60

- F (H - 60C)/60

- P V(1-S)

- Q V(1-SF)

- T V(1-S(1-F))

- if (C 0) R V G T B P

- else if (C 1) R Q G V B P

- else if (C 2) R P G V B T

- else if (C 3) R P G Q B V

- else if (C 4) R T G P B V

- else if (C 5) R V G P B Q

21

HSV Example

Colour Image

Hue

Saturation

Value

22

Image Formats/Compression

- Digital images take up a large amount of space,

e.g., for an image of 2048x1536 pixels, with 1

byte for each of red, green, and blue, 9Mb is

required for the image - Many image formats have been proposed

- JPEG (Joint Photographic Experts Group)

- GIF (Graphics Interchange Format)

- BMP, TIFF, etc.

- Most formats aim to reduce the size of the file,

e.g., for a 225x300 image, GIF is 40 kilobytes,

JPEGs range from 41 to 6 kilobytes depending on

quality - GIF images use a colour palette, e.g., each pixel

is assigned one of the 255 chosen colours to

reduce the size to one third original - JPEG converts images using a discrete cosine

transform, and quantises the results to remove

unused frequencies

23

Image Formats/Compression

Original

GIF

JPEG (high quality)

24

A simple Java class JPEGImage

- Inside JPEGImage

- A BufferedImage is used to store the actual image

information - Java provides a JPEGCodec (coder and decoder)

which is used to read and write JPEG files - public JPEGImage()

- Default constructor, makes an instance of

JPEGImage which doesnt have an image - public JPEGImage(int width, int height)

- Creates an image of the given size and colours

all the pixels black

- public void read(String filename)

- Reads a JPEG image file

- public void write(String filename)

- Writes the image as a JPEG file

- public int getHeight()

- Return the dimensions of an image. Also

getWidth() - public int getRed(int x, int y)

- Returns the red value at a given coordinate. Also

getGreen(), getBlue() - public void setRed(int x, int y, int value)

- Changes the red value at the coordinates to the

given value. Also setGreen() and setBlue() - public void setRGB(int x, int y, int r, int

g, int b)

25

Example - Reading in an Image

- JPEGImage imageOne new JPEGImage()

- try

- imageOne.read(args0)

- catch (Exception e)

- System.out.println(

- "ERROR reading file " args0)

- System.exit(1)

26

Example - Copying to a New Image

- imageTwo new JPEGImage(imageOne.getWidth(),

- imageOne.getHeight())

- for (int x 0 x lt imageOne.getWidth() x)

- for (int y 0 y lt imageOne.getHeight() y)

- int red imageOne.getRed(x, y)

- int green imageOne.getGreen(x, y)

- int blue imageOne.getBlue(x, y)

- imageTwo.setRGB(x, y, red, green, blue)

27

Example - Writing Image to a File

- try

- imageTwo.write(args1)

- catch (Exception e)

- System.out.println(

- "ERROR writing file " args1)

- System.exit(1)

28

A Typical Construct

- for (int x 0 x lt width x)

- for (int y 0 y lt height y)

- // Do stuff

- This is a very common construct in computer image

processing programs - It passes over the image and visits each pixel

29

Image Denoising

- Digital images are quantized, typically we only

record 256 different light levels - When we use lossy compression we introduce errors

- Imperfect sensors also introduce noise

- Noise can be seen as a probability density

distribution, with PDE e.g., - Uniform

- Gaussian

- Adding noise to image

- A random number seed must be specified

- Each pixel in the noisy image is the sum of the

true pixel value and a random, Uniform or

Gaussian distributed noise value

30

Uniform Noise

a 5

a 10

a 25

Image with varying degrees of uniform noise added

- Pixel values are usually

- close to their true value

- Errors lie in the range -a to a,

- each value is equally likely

- Noise PDF is a uniform distribution

P(x)

x

-a

a

31

Gaussian Noise

s 10

s 20

s 1

Images with varying degrees of Gaussian noise

added

- Noise PDF is a Gaussian distribution,

- Mean (µ) 0 (normally), Variance (s2)

- indicates how much noise there is

- Each pixel in the noisy image is the

- sum of the pixel value and a random, Gaussian

distributed noise value

µ

32

Removing Noise

- Some sorts of processing are sensitive to noise

- We want to remove the effects of noise before

going further - However, we may lose information in doing so

- Most noise removal processes are called filters

- They are applied to each point in an image

through convolution - They use information in a small local window of

pixels

33

Image Filters

Source image

Target image

34

Mean Filter

- A simple noise removal filter is the mean filter

- Each pixel is set to the mean (average) over a

local window - This blurs the image, making it smoother and

removes fine details

The 3 3 mean filter

1/9

1/9

1/9

1/9

1/9

1/9

1/9

1/9

1/9

convolution operation with the image area

covered p11/9 p21/9p91/9

35

Mean Filter

Original

Uniform

Gaussian

36

Median Filter

- An alternative to the mean filter is the median

filter - Statistically the median is the middle value in a

set - Each pixel is set to the median value in a local

window

123

124

125

129

127

9

126

123

131

37

Median Filter

Uniform

Gaussian

Original

38

Code for filters

- With a filter the processing at (x,y) depends on

a neighbourhood, typically a square with radius

r (3x3 has radius 1, 5x5 radius 2) - int x, y, dx, dy, r

- for (x 0 x lt image.getWidth() x)

- for (y 0 y lt image.getHeight() y)

- for (dx -r dx lt r dx)

- for (dy -r dy lt r dy)

- // Do something with (xdx, ydy)

39

Gaussian Filter

- Gaussian filters are based on the same function

used for Gaussian noise

- To generate a filter it needs to be 2D

- If we take the mean to be 0 then we get

40

Gaussian Filter

- The Gaussian filter has a 2D bell shape

- It is symmetric around the centre

- The centre value gets the highest weight

- Values further from the centre get lower weights

2-D Gaussian distribution with mean (0,0) and

standard deviation 1

41

Discrete Gaussian Filters

- The Gaussian

- Extends infinitely in all directions, but we want

to process just a local window - Has a volume underneath it of 1, which we want to

maintain - is a function (continuous)

- We can approximate the Gaussian with a discrete

filter - We restrict ourselves to a square window and

sample the Gaussian function - We normalise the result so that the filter

entries add to 1

42

Example

- Suppose we want to use a 5x5 window to apply a

Gaussian filter with s2 1 - The centre of the window has x y 0

- We sample the Gaussian at each point

- We then normalise it

-2

-1

0

1

2

0

1

2

-1

-2

43

The Gaussian Filter

Original

Uniform

Gaussian

44

Separable Filters

- The Gaussian filter is separable

- This means you can do a 2D Gaussian as 2 1D

Gaussians - First you filter with a horizontal Gaussian

- Then with a vertical Gaussian

45

Separable Filters

- This gives a more efficient way to do a Gaussian

filter - Generate a 1D filter with the required variance

- Apply this horizontally

- Then apply it to the result vertically

0.06

0.24

0.40

0.24

0.06

0.06

0.24

0.40

0.24

0.06

46

Separable Filters

- The separated filter is more efficient

- Given an NN image and a nn filter we need to do

O(N2n2) operations - Applying two n1 filters to a NN image takes

O(2N2n) operations

- Example

- A 600400 image and a 55 filter

- Applying it directly takes around 6,000,000

operations (600400x5x5) - Using a separable filter takes around 2,400,000

operations (600400x5 600400x5) - less than

half as many

47

Gaussian Filters

- How big should the filter window be?

- With Gaussian filters this depends on the

variance (s2) - Under a Gaussian curve we know that 98 of the

area lies within 2s of the mean

- If we take the filter width to be at least 5s we

get more than 98 of the values we want

98.8

5s

48

The Problem with Edges

- We have seen two ways to filter images

- Mean filter - take the average value over a local

window - Median filter - take the middle value over a

local window - Filters operate on a local window

- We need to know the value of all the neighbours

of each pixel - Pixels on the edges or corners of the image dont

have all their neighbours

49

Dealing with Edges

- There are a number of ways we can deal with edges

- Ignore them - dont use filters near edges

- Modify our filter to account for smaller

neighbourhoods - Extend the image to predict the unknowns

- Ignoring the edges

- This is simple

- It means that we lose the border pixels each time

we apply a filter - Might be OK for one or two small filters, but

your image can shrink rapidly

50

Modifying the Filter

- Many filters can be modified to give a reasonable

value near the edge - We may need different filters for top, bottom,

left, and right edges, and for each corner

- Example mean filter

1/6

1/6

1/6

1/4

1/4

1/4

1/4

1/6

1/6

1/6

1/4

1/4

1/4

1/4

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/6

1/4

1/4

1/4

1/4

1/6

1/6

1/6

1/4

1/4

1/4

1/4

51

Extending the Image

- We need to predict what lies beyond the edges of

the image - We can use the value of the nearest known pixel

- We can apply a more detailed model to the image

- Nearest neighbour

52

Linear Extrapolation

- A model can be fitted to an image

- We use known pixels to establish a model of the

pixel value as a function of x and y - We use this model to find missing values

- Models are usually fitted locally

- A simple example is a linear model through a pair

of pixels

A

B

X

X B (B-A)

53

Linear Extrapolation

139

142

143

136

138

134

130

133

127

54

- function gaussianfilter()

- Parameters of the Gaussian filter

- n110sigma13n210sigma23theta0

- The amplitude of the noise

- noise10

- w,mapimread('lena.gif', 'gif')

- xind2gray(w,map)

- x_randnoiserandn(size(x))

- m,nsize(x)

- for i1m

- for j1n

- y(i,j)x(i,j)x_rand(i,j)

- jj1

- end

- ii1

- end

- Function "d2gauss.m"

- This function returns a 2D Gaussian filter with

size n1n2 theta is - the angle that the filter rotated counter

clockwise and sigma1 and sigma2 - are the standard deviation of the Gaussian

functions. - function h d2gauss(n1,std1,n2,std2,theta)

- rcos(theta) -sin(theta)

- sin(theta) cos(theta)

- for i 1 n2

- for j 1 n1

- u r j-(n11)/2 i-(n21)/2'

- h(i,j)gauss(u(1),std1)gauss(u(2),std2)

- end

- end

- h h / sqrt(sum(sum(h.h)))

- Function "gauss.m"

- function y gauss(x,std)