Learning to Win - PowerPoint PPT Presentation

1 / 49

Title:

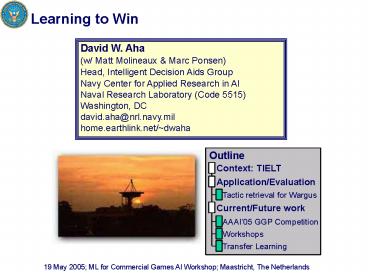

Learning to Win

Description:

Sokaban, Tetris. Puzzles. AI Roles. Sub-Genres. User Focus. Examples. Genre. Dimensions: ... A free, supported tool for integrating decision systems with simulators ... – PowerPoint PPT presentation

Number of Views:37

Avg rating:3.0/5.0

Title: Learning to Win

1

Learning to Win

David W. Aha (w/ Matt Molineaux Marc

Ponsen) Head, Intelligent Decision Aids

Group Navy Center for Applied Research in

AI Naval Research Laboratory (Code

5515) Washington, DC david.aha_at_nrl.navy.mil home.e

arthlink.net/dwaha

Outline

19 May 2005 ML for Commercial Games AI Workshop

Maastricht, The Netherlands

2

Testbed for Integrating and Evaluating Learning

Techniques

3

Objective

Encourage the study of rapid, enduring, and

embedded learning techniques in cognitive systems.

Learning

4

Learning in Cognitive Systems

Status

- Few deployed cognitive systems integrate

techniques that exhibit rapid enduring learning

behavior on complex tasks - Its costly to integrate evaluate embedded

learning techniques

5

A Vision for Supporting Cognitive Learning

1986

6

Key Benefit Reduce Integration Costs

Problem Simulator/system integrations are

expensive! (time, )

1 integration

Proposed Solution Middleware

mn integrations

Simulator1

Decision System1

. . .

. . .

Testbed

Decision Systemn

Simulatorm

7

Simulator Focus Multi-Agent Virtual Games

- Several AI research challenges

- e.g., huge search spaces, adversarial,

collaborative, real-time (some), partial

observability, multiple reasoning levels,

relational (e.g., temporal, spatial) data - Feasible Breakthroughs are expected to occur (w/

funding) - Inexpensive

- Military analog (i.e., training simulators

involving CGF) - Popular (with public, industry, academia,

military)

8

Gaming Genres of Interest (modified from (Laird

van Lent, 2001 Fairclough et al., 2001

Spronck, 2005))

- Dimensions

- Interaction Turn-based, Real-time

- Interface Textual, Visual

- Perspective 1st, 3rd, God

- Users Single player, multiplayer, massively

multiplayer

9

TIELT

- A free, supported tool for integrating decision

systems with simulators - Users define their own APIs (message format and

content) - As-is license

- Initial focus Evaluating learning techniques in

complex games - Learning foci Task, Player/Opponent, or Game

Model - Targeted technique types Broad coverage

- Supervised/unsupervised, immediate/delayed

feedback, analytic, active/passive,

online/offline, direct/indirect,

automated/interactive

10

Functional Architecture

TIELTs User Interface

Evaluation Interface

Prediction Interface

Coordination Interface

Advice Interface

TIELT User

TIELTs Internal Communication Modules

Selected Game Engine

Selected Decision System

Learned Knowledge (inspectable)

Game Player(s)

TIELTs KB Editors

Selected/Developed Knowledge Bases

Game Model

Agent Description

Game Interface Model

Decision System Interface Model

Experiment Methodology

TIELT User

Knowledge Base Libraries

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

11

Knowledge Bases

Game Interface Model

Defines communication processes with the game

engine

Decision System Interface Model

Defines communication processes with the decision

system

Game Model

- Defines interpretation of the game

- e.g., initial state, classes, operators,

behaviors (rules) - Behaviors could be used to provide constraints on

learning

Agent Description

- Defines what decision tasks (if any) TIELT must

support - TMK representation

Experiment Methodology

Defines selected performance tasks (taken from

Game Model Description) and the experiment to

conduct

12

Use Controlling a Game Character

TIELTs User Interface

Evaluation Interface

Prediction Interface

Coordination Interface

Advice Interface

TIELT User

TIELTs Internal Communication Modules

Selected Game Engine

Selected Decision System

Learned Knowledge (inspectable)

TIELTs KB Editors

Selected/Developed Knowledge Bases

Game Model

Agent Description

Game Interface Model

Decision System Interface Model

Experiment Methodology

TIELT User

Knowledge Base Libraries

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

13

Use Prediction

TIELTs User Interface

Evaluation Interface

Prediction Interface

Coordination Interface

Advice Interface

TIELT User

TIELTs Internal Communication Modules

Selected Game Engine

Selected Decision System

Learned Knowledge (inspectable)

TIELTs KB Editors

Selected/Developed Knowledge Bases

Game Model

Agent Description

Game Interface Model

Decision System Interface Model

Experiment Methodology

TIELT User

Knowledge Base Libraries

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

14

Use Game Model Revision

TIELTs User Interface

Evaluation Interface

Prediction Interface

Coordination Interface

Advice Interface

TIELT User

TIELTs Internal Communication Modules

Selected Game Engine

Selected Decision System

Learned Knowledge (inspectable)

TIELTs KB Editors

Selected/Developed Knowledge Bases

Game Model

Agent Description

Game Interface Model

Decision System Interface Model

Experiment Methodology

TIELT User

Knowledge Base Libraries

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

15

A Researcher Use Case

- Define/store decision system interface model

- Select game simulator interface

- Select game model

- Select/define performance task(s)

- Define/select expt. methodology

- Run experiments

- Analyze displayed results

Selected/Developed Knowledge Bases

Knowledge Base Libraries

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

GM

AD

EM

GIM

DSIM

16

TIELT Status (May 2005)

Features (v0.7)

- Pure Java implementation

- Message protocols

- Java Objects, Console I/O, TCP/IP, UDP

- Supports external shared memory

- Message content User-configurable

- Instantiated templates tell it how to communicate

with other modules - Messages API-specific

- Start, Stop, Load Scenario, Set Speed,

- Agent description representations

- Task-Method-Knowledge (TMK) process models

- Hierarchical Task Networks (HTNs)

- Experimental results can be stored in databases

supporting JDBC or ODBC standards (e.g., Oracle,

MySQL, MS Excel) - 119 downloads from 61 people (25 are

collaborators)

17

TIELT http//nrlsat.ittid.com

TIELT Users Manual, Tutorial, publications

e.g., for uploading/obtaining TIELT KBs

Bug tracking and feature requests

Download

18

FY05 Collaboration Projects

User Interfaces

TIELT User

Prediction Interface

Evaluation Interface

Coordination Interface

Advice Interface

U.Minn-D.

USC/ICT

U.Mich.

Game Library

TIELT Test Bed

Decision System Library

ISLE ICARUS Lehigh Case-based

planner U.Michigan SOAR UTA DCA UT Austin

Neuroevolution

ToEE

EE2

Mad Doc

Troika

FreeCiv

NWU

ISLE

KB Editors

Knowledge Base Library

LU, USC

Mich/ISLE

U. Mich.

Many

Game Model

Agent Descriptions

Game Interface Model

Decision System Interface Model

Experiment Methodology

Many

TIELT User

19

TIELT Integrations

20

Stratagus

- Free Real-time strategy (RTS) game engine

- Player objective Achieve some game-winning

condition in real time in a simulated world - Data sets (i.e., games) 8 exist of varying

maturity - Multiple unit types

- Combat follows rock-paper-scissors principle

- Partial observability

- Adversarial

21

Wargus A Warcraft II? Clone

Goal Defeat opponents, build Wonder,

etc. Measure Score f(time, achievements) Map

Size (e.g., 128x128), location, terrain, climate

Time Stamp Real-time advancement

- State

- Map (partially observed)

- Resources

- Units

- Attributes Location, Cost, Speed, Weapon,

Health, - Actions Move, attack, gather, Build generator,

- Generators (e.g., buildings, farms)

- Attributes Location, cost, maintenance,

- Actions Build units, Conduct research, Achieve

next era - Consumables (used to build) Gold, wood, iron

- Research achievements Determines what can be

built - Actions pertain to

- Generators

- Units

- Diplomacy

- Adversaries State for each (partially observed)

22

Wargus Decision Space Analysis

Variables

- W Number of workers

- A Number of possible actions

- P Average number of workplaces

- U Number of units

- D Average number of directions a unit can move

- S Choice of units stance

- B Number of buildings

- R Average number of research objectives

- C Average number of buildable units

Decision complexity per turn (for a simple game

state)

- O( 2W(AP) 2U(DS) B(RC) ) this is a

simplification - This example W4 A5 P3 U8 (offscreen)

D2 S3 B4 R1 C1 - Decision Space 1.4x103

- Exponential in the number of units

- Motivates the use of domain knowledge (to

constrain this space) - Comparison Average decision space per chess move

is 30

23

Learning to Win in Wargus

Ponsen, M.J.V., Muñoz-Avila, H., Spronck, P.,

Aha, D.W. (IAAI05). Automatically acquiring

adaptive real-time strategy game opponents using

evolutionary learning.

Tactics1

Tactics1

Game Opponent Scripts

Evolved Counter- Strategies

Dynamic Scripting

Genetic alg.

. . .

. . .

Tactics20

Tactics20

Initial Policy (i.e., equal weights)

Opponent-Specific Policy f(Statei)Tacticij

Action Language

Goal Eliminate all enemy units and buildings

Wargus

24

Opponent AI in Wargus

- Low level actions are hard-coded in the engine

- An action language

- Game AI is defined in a script (action sequence)

- in LUA

AiNeed(AiCityCenter) AiWait(AiCityCenter) AiSet(Ai

Worker,4) AiForce(1, AiSoldier,

1) AiWaitForce(1) AiAttackWithForce(1) ...

25

A State Space Abstraction for Wargus

A lattice of 20 building states

- Manually predefined

- Node building state

- Corresponds to the set of buildings a player

possesses, which determines the units and

buildings that can be created, and the technology

that can be pursued - Arc New building (state transition)

- Tactic Sequence of actions within a state

- A sub-plan

Evolved_SC5

26

Genetic Chromosomes (one per script)The source

for Tactics

Chromosome (a complete plan)

Action Sequence Tactic (for 1 Building State)

27

A Decision Space Abstraction for Wargus

Space of Primitive Actions

28

Dynamic Scripting (Spronck, 2005)

Learns to assign weights to tactics for a single

opponent

- Consequences

- Separate policies must be learned per opponent

- The opponent must be known (a priori)

Our Goal Relax the assumption of a fixed

adversary

29

Case-Based Reasoning (CBR)Applied to Games

(partial summary)

30

Learning to Win in Wargus(versus random

opponents)

Aha, D.W., Molineaux, M., Ponsen, M.

(ICCBR05). Learning to win Case-based plan

selection in a real-time strategy game.

Sources of domain knowledge

- State space abstraction (building state lattice)

- Decision space abstraction (tactics)

- f(Statei,Tacticij) Performance

Cases

Evolved Counter- Strategies

Evaluation Interface

Game Opponent Scripts

TIELTs Internal Communication Modules

Editors

Tactics1

Wargus

CaT

. . .

Tactics20

Knowledge Bases

(Case-based Tactician)

31

CaT Case Representation

C ltBuildingState, Description, Tactic,

Performancegt

32

CaT Retrieval

- Tactic retrieval occurs when a new building state

b is entered - retrieve(b,k,e)

// k cases retrieved eexploration - d description() // 8

features - IF (casesb lt e tacticsb)

- THEN t least_used(tacticsb) // then explore

- ELSE C max_performer(maxk Sim(C,d)

C?casesb) - t CTactic

- Return t

Sim(C, d) (CPerformance/dist(CDescription, d))

- dist(CDescription, d)

33

CaT Reuse

- Adaptation not controlled by CaT

- Instead, its performed by the build-in

primitives of Wargus - e.g., if an action is to create a building, the

game engine determines its location and which

workers will build it

34

CaT Retention

- CaT(C) C Stored cases

- b1 Initial building state

- gameWargus() Start game

- UNTIL game_ends i.e., a win occurs or 10

minutes - Record for current state b

- Description db

- Tactic tb

- Scores sb for player and opponent

- b Wargus(tb) Transitions to a new state b

- For each building state b

- pb compute_performance(sb,Final_scores)

- IF (a case C?Cb matches ltdb,tbgt)

- THEN update_performance(Cb,pb)

Averaging - ELSE Cb Cb ltb,db,tb,pbgt

Store new case

CaT acquires at most 1 case per building state

per game

35

CaT Evaluation

- Competitors

- Uniform Select a tactic randomly (uniform

distribution) - Best Evolved Counter-Strategy

- Test each of 8 counter-strategies (i.e., the best

ones evolved vs. each opponent strategy) - Record the one that performs the best on randomly

selected opponents - CaT Tested on randomly-selected opponents

(uniform distr.)

Hypothesis CaT can significantly outperform

(i.e., attain a higher winning percentage and

higher average relative scores) Uniform and the

evolved counter-strategies

36

Empirical Methodology

- Variables

- Fixed k3, e3 // e Exploration parameter

- Independent Amount of Training

- Dependent Win percentage, relative score

- Strategy LOOCV (leave-one-out cross validation)

- Train on 7 opponents, test on eighth (10x after

every 25 games) - Trial 140 games (100 for training, 40 for

testing)

37

Results I Win Performance

0.9

0.8

0.7

0.6

0.5

games won vs. test set (LOOCV)

0.4

0.3

0.2

0.1

0

25

50

75

100

Training Trials

Result CaT outperforms the best

counter-strategy, but not significantly

- Significance test

- One-tail paired two sample t-test gives t.18

82 probability that CaT is better than best

counter-strategy. (However, performance is

significantly better at the .005 level against

the second best counter-strategy.) - Paired sample t-test is appropriate when two

algorithms are compared against an identical

problem we use it to compare performance against

the same opponent

38

Results II Score Performance

Training Trials

- Significance test

- One-tail paired two sample t-test gives p.0002

99.98 probability that CaT is better than the

best counter-strategy

39

Potential Future Work Foci

- Reduce/eliminate non-determinism

- Optimize state descriptions (e.g., test for

correlations) - Stop cheating!

- Tune CaTs parameters (i.e., k and e)

- Plan adaptation

- Compare vs. an extension of dynamic scripting

- Permit random initial states

- Multiple simultaneous adversaries

- On-line learning (i.e., learn/apply during a

single game)

40

Other TIELT Collaborations

University of Michigan PI John Laird

- SOAR decision system integration

USC/CTRAC PI Michael van Lent

- Full Spectrum Command/Research (FSC/R) simulator

integration FSC 1.5 is a PC-based training aid

that models the command and control of a U.S.

Army Light Infantry Company in a MOUT envt.

41

AAAI05 General Game Playing (GGP)

Competition(M. Genesereth, Stanford U.)

Goal Win any game in a pre-defined category

- Initial category Chess-like (analytical) games

- Games are produced by a game generator

- Input Rules on how to play the game

- Move grammar is used to communicate actions

- GDL Language based on relational nets

- Output (desired) A winning playing strategy

Annual AAAI Competition

- WWW games.stanford.edu

- AAAI05 Prize 10K

42

Upcoming TIELT-Related Events

General Game Playing Competition

- AAAI05 (24-28 July 2005 Pittsburgh)

- Chair Michael Genesereth (Stanford U.)

- We our integrating TIELT with GGP, attracting

additional competitors

TIELT-Related Workshops

- At IJCAI05 (31 July 2005 Edinburgh)

- Reasoning, Representation, and Learning in Gaming

- Co-chairs Héctor Muñoz-Avila, Michael van Lent

- 20 submissions talks by Ian Davis (MDS), Michael

Genesereth - At ICCBR05 (24 August 2005 Chicago)

- Computer Gaming and Simulation Environments

- Co-chair David C. Wilson

- 8 submissions talks by John Laird, Ken Forbus,

Pádraig Cunningham

TIELT Challenge Problems

Design 15 April Code 15 May

43

City Control Challenge Problem (MDS)

Messages received from the decision system

Pressing the T button calls on the

TIELT-connected decision system to identify the

enemies closest to the TIELT-controlled units and

calculates the appropriate force to send into

battle.

Real-time strategy game, where the goal is to

gain control over city blocks that vary in

utility, and require different levels of control.

44

Initial Challenge Problem (CP) Components

- State S

- Map M B11,,Bmn A map

consists of a set of city blocks - City Block Bij

- features(Bij) f1(),,fl() A block

has a set of features (e.g., location, utility) - objects(Bij)?O

- Objects O objectType(), location(), status(),

- Units U player(), type(), actions(), weapon(),

armor(), - Resources R type(), amount(),

- Categories Controllable (e.g., petrol,

electricity), natural (e.g., uranium, water) - Actions A

- apply(Ui,Aj,S) S?

Units can apply actions to modify the state - Tasks T type(), preconditions(), subtasks(),

subtaskOrdering(), ... - Categories Strategic, tactical (where primitive

tasks invoke actions)

- CP M,S0,SG,F,R,E

- M Map

- SG Goal is to achieve a state (i.e., control

of specified city blocks) - e.g., (Bij, Gij) Bij?M Gij?G (1im, 1jn)

- Goals can be achieved by tasks or actions

- F Friendly units

Controllable units - R Resources Objects

that can be used in actions - E Enemy units

Non-controllable adversary units

45

City Control Challenge Problem (Sketch)

- Goal Control of a set of city blocks BG

currently under control of E - Starting State

- F all located in on block Bij (of varying

significance) - 3 concentrations of Ei

- Notes

- Success may involve controlling some

strategic/tactical blocks BST ? BG - Securing blocks nearby BG may assist with the

goal - Controlling a block Bij involves leaving F??F on

Bij - Several issues involved with force distribution

decisions

Block structure of the challenge problem map

46

An Evaluation Framework for Transfer Learning

Goal

Evaluate Transfer Learning systems for their

ability to learn knowledge from interactions with

a source task (concerning a complex adversarial

simulation game) to increase initial performance,

performance rate, and/or asymptotic performance

on a target task.

Cognitive Transfer Learning System for

Decision-Making

Learning Module

Transferring learned knowledge

Learned Knowledge

Experiences, plans, user preferences, etc.

Differing

Train on a turn-based game, test on a real-time

game

Train on one real-time game, test on another

Reformulating

Train when using deception only for unit

locations, and test when deception is also used

for weapon identities

Generalizing

Vary weapons and armor available

Abstracting

Train with only dismounted or only mounted

soldiers, test with them both available

Composing

Challenge Problems

Restyling

Vary map M

Difficulty

Vary number of friendly (F) and/or enemy (E)

units

Extending

Restructuring

Vary non-combatants on map M

Change composition of F and/or E

Extrapolating

Transfer Learning Levels

Change initial locations for units in F and/or E

Parameterization

Same initial and goal states

Memorization

47

Our Transfer Learning Proposal(2005-2008)

48

(No Transcript)

49

Summary Learning to Win

- TIELT (nrlsat.ittid.com)

- May reduce system-simulator integration effort

- Free, as-is license, late Alpha stage, supported,

tested - Of possible interest for research and class

projects - Not restricted to gaming simulators, nor learning

systems

- CaT (Aha et al., ICCBR05)

- First significant application of TIELT

- First case-based reasoning system designed to

learn to win a real-time strategy game - Outperformed baseline competitors

- Leverages three knowledge sources

- State space abstraction (building state lattice)

- Decision space abstraction (tactics)

- Cases f(state, tactic) performance

- Builds on work by (Ponsen Spronck, Game-On04)

- Many future research directions

![❤️[READ]✔️ The Social Styles Handbook: Adapt Your Style to Win Trust (Wilson Learning Library) PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10098860.th0.jpg?_=202408140510)

![[PDF READ ONLINE] The Social Styles Handbook: Adapt Your Style to Win Trust (Wilson Learni PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10120504.th0.jpg?_=202409051210)

![[PDF READ ONLINE] The Social Styles Handbook: Adapt Your Style to Win Trust (Wilson Learni PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10102696.th0.jpg?_=20240820030)

![[PDF READ ONLINE] The Social Styles Handbook: Adapt Your Style to Win Trust (Wilson Learni PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10100548.th0.jpg?_=20240816114)