Parallel Quicksort - PowerPoint PPT Presentation

1 / 48

Title:

Parallel Quicksort

Description:

the master pivots define the buckets used by each process. continue as in ... way so that each process needs to store only one block of A and one block of B. ... – PowerPoint PPT presentation

Number of Views:318

Avg rating:3.0/5.0

Title: Parallel Quicksort

1

Parallel Quicksort

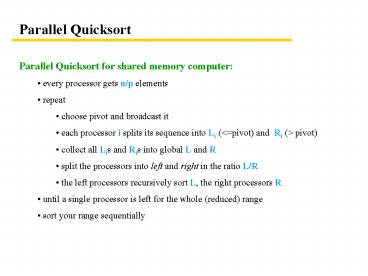

- Parallel Quicksort for shared memory computer

- every processor gets n/p elements

- repeat

- choose pivot and broadcast it

- each processor i splits its sequence into Li

(ltpivot) and Ri (gt pivot) - collect all Lis and Ris into global L and R

- split the processors into left and right in the

ratio L/R - the left processors recursively sort L, the

right processors R - until a single processor is left for the whole

(reduced) range - sort your range sequentially

2

Sample Sort

- Goal get rid of the uniformly distributed data

assumption in Bucket sort - Idea sample the input data and compute a good

set of bucket intervals - High-level description

- sort local data and choose p-1 evenly spaced

pivots - send these pivots to the master process

- master collects all p(p-1) pivots, sorts them

and chooses p-1 evenly spaced (among the p(p-1)

pivots) master pivots - master broadcasts the master pivots

- the master pivots define the buckets used by

each process - continue as in the bucket sort

- distribute local data into the buckets (e.g by

inserting the master pivots using binary search

into the sorted local sequence) - send buckets to appropriate processes

- sort the received data

3

Sorting Summary

Odd-even transposition sort O(n) time with n

processors, for linear array, simple,

inefficient, the best we can hope for in linear

array if only communication with direct

neighbours is possible Shear sort O(?n (log n

1)) time with n processors, for 2D mesh, simple,

there is an O(?n) version, which is optimal in 2D

mesh if only communication with direct neighbours

is possible Bitonic sort O(log2 n) time with n

processors (in hypercube), simple, still not cost

optimal, hypercube and 2D mesh implementations Qui

ck Sort O(log2 n) time with n processors (with

good pivots), shared memory and message-passing

implementations Sample Sort for ngtp2, alleviates

partially the problem of uneven data

distribution There exists O(log n) sorting

algorithm with n processors, but it is very

complicated and the constant factors are too high

to be practical.

4

Matrix Algorithms

- Matrix-Vector Multiplication

- with 1D partitioning

- with 2D partitioning

- Matrix-Matrix Multiplication

- simple parallel algorithm

- Cannons algorithm

- the DNS algorithm

- Solving Systems of Linear Equations

- simple Gaussian elimination

- with pipelining

- with partial pivoting

- back substitution

5

Matrix-Vector Multiplication

Sequential code

Vector MAT_VECT(A,x,y) for(i0

iltn i) yi 0

for(j0 jltn j)

yiyiAi,jxj

return y

6

Matrix-Vector Multiplication II

- Analysis

- all-to-all broadcast tslog p tw(n/p)(p-1) ?

tslog p twn - local computation n x n/p n2/p

- Total Tp ? n2/p tslog p twn

7

Matrix-Vector Mult., 2D Partitioning

8

Matrix-Vector Mult., 2D Partitioning

- Analysis

- distributing vector x to the diagonal ts

tw(n/?p) - broadcasting component of vector x in the

columns (ts tw(n/?p))log(?p) - local computation n2/p

- reduction in rows (ts tw(n/?p))log(?p)

- Total

- Tp ? n2/p tslog p tw(n/?p)log p

- 1D vs 2D

- difference only in the last term

- 1D twn vs 2D tw(n/?p)log p

- conclusion 2D partitioning can use up to n2

processors and has smaller bandwidth

requirements, i.e. it can scale better

9

Matrix Algorithms

- Matrix-Vector Multiplication

- with 1D partitioning

- with 2D partitioning

- Matrix-Matrix Multiplication

- simple parallel algorithm

- Cannons algorithm

- the DNS algorithm

- Solving Systems of Linear Equations

- simple Gaussian elimination

- with pipelining

- with partial pivoting

- back substitution

Matrix algorithms based on Kumars book.

10

Matrix-Matrix Multiplication

- Sequential code

- Sequential complexity O(n3)

- slightly better algorithms possible (e.g.

Strassens algorithm), but the constant factors

render them non-practical for most realistic

values of n

Vector MAT_MULT(A,B,C) for(i0

iltn i) for(j0 jltn j

Ci,j 0

for(k0 kltk j)

Ci,jiAi,kX Bk,j

11

Simple Matrix-Matrix Multiplication

12

Simple Matrix-Matrix Multiplication II

- Complexity

- initial row-broadcast of blocks of A and

column-broadcast of blocks of B 2(tslog(?p)

tw(n2/p)(?p-1)) - local computation ?p X (n/?p)3 n3/p

- Total Tp ? n3/p tslog p 2tw(n2/?p)

- Memory usage

- a block of rows of A and a block of columns of B

- 2(n X n/?p) per processor, 2n2?p in total

- wasteful with respect to the sequential

algorithm 2n2

13

Cannons Matrix-Matrix Multiplication

- Main idea

- pipeline the simple algorithm by shifting the

blocks after each block multiplication in a smart

way so that each process needs to store only one

block of A and one block of B. - rotate row/column i of A/B i steps to the left/up

Initial alignment of A

Initial alignment of B

A and B after initial alignment

A0,2 B2,2

A0,0

A0,1

A0,2

A0,3

B0,0

B0,1

B0,2

B0,3

A0,0 B0,0

A0,1 B1,1

A0,3 B3,3

A1,3 B3,2

A1,0

A1,1

A1,2

A1,3

B1,0

B1,1

B1,2

B1,3

A1,1 B1,0

A1,2 B2,1

A1,0 B0,3

A2,0 B0,2

A2,0

A2,1

A2,2

A2,3

B2,0

B2,1

B2,2

B2,3

A2,2 B2,0

A2,3 B3,1

A2,1 B1,3

A3,1 B1,2

A3,0

A3,1

A3,2

A3,3

B3,0

B3,1

B3,2

B3,3

A3,3 B3,0

A3,0 B0,1

A3,2 B2,3

14

Cannons Matrix-Matrix Multiplication II

After first shift

After second shift

After third shift

A0,1 B1,0

A0,2 B2,1

A0,3 B3,2

A0,0 B0,3

A0,2 B,2,0

A0,3 B3,1

A0,0 B0,2

A0,1 B1,3

A0,3 B,3,0

A0,0 B0,1

A0,1 B1,2

A0,2 B2,3

A1,2 B2,0

A1,3 B3,1

A1,0 B0,2

A1,1 B1,3

A1,3 B3,0

A1,0 B0,1

A1,1 B1,2

A1,2 B2,3

A1,0 B0,0

A1,1 B1,1

A1,2 B2,2

A1,3 B3,3

A2,3 B3,0

A2,0 B0,1

A2,1 B1,2

A2,2 B2,3

A2,0 B0,0

A2,1 B1,1

A2,2 B2,2

A2,3 B3,3

A2,1 B1,0

A2,2 B2,1

A2,3 B3,2

A2,0 B0,3

A3,0 B0,0

A3,1 B3,1

A3,2 B2,2

A3,3 B3,3

A3,1 B1,0

A3,2 B2,1

A3,3 B3,2

A3,0 B0,3

A3,2 B2,0

A3,3 B3,1

A3,0 B0,2

A3,1 B1,3

- Analysis

- initial shift 2(ts

tw(n2/p)) - communication in one step 2(ts tw(n2/p))

- local computation in one step (n/?p)3

- number of steps ?p

- Total Tp n3/p 2ts?p 2tw(n2/?p)

15

The DNS Algorithm (still M3)

- Cannons algorithm with n2 processors has time

O(n). - Can we have faster Matrix-Matrix Multiplication

algorithm? - Yes, but we need more processors.

- Algorithm DNS

- take n3 processors Pi,j,k for i,j,k 0n-1

- Ai,j and Bi,j initially stored at processors

Pi,j,0 - A and B are broadcasted so that processor

Pi,j,k gets Ai,k and Bk,j - processor Pi,j,k computes Ai,k x Bk,j

- processors Pi,j, perform Reduce() of the

results - processor Pi,j,0 stores the result Ci,j

- The only nontrivial part How to efficiently

broadcast A and B so that everybody gets what it

needs?

16

The DNS Algorithm II

Initial distribution of A and B

k3

A,B

k2

k1

k

j

k0

i

17

The DNS Algorithm III

After broadcasting Ai,j along j axis

0,3

1,3

2,3

3,3

A

0,2

1,2

2,2

3,2

k

0,1

1,1

2,1

3,1

j

0,0

1,0

2,0

3,0

i

18

The DNS Algorithm IV

- Analysis

- moving Ai,j(Bi,j) from Pi,j,0 to Pi,j,j

(Pi,j,i) ts tw - broadcasting within a row/column (ts tw)log n

- local computation 1

- reduction (ts tw)log n

- Total Tp 1ts(2log n 1) tw(2log n 1)

- Note Not cost-optimal, as processor-time product

is O(n3 log n). Can be adapted for pq3 lt n3

processors. If pltn3/log n then the DNS algorithm

is cost-optimal.

19

Matrix Algorithms

- Matrix-Vector Multiplication

- with 1D partitioning

- with 2D partitioning

- Matrix-Matrix Multiplication

- simple parallel algorithm

- Cannons algorithm

- the DNS algorithm

- Solving Systems of Linear Equations

- simple Gaussian elimination

- with pipelining

- with partial pivoting

- back substitution

20

Solving a set of linear equations

We want to solve this set of equations x1

x2 - 2x3 - 3x4 0 3x1 4x2 - 4x3 - x4

0 3x1 x2 - 2x3 - x4 0 4x1 - x2 2x3

5x4 3 Can be represented by the following

matrix

21

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(0)

22

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(0)

EliminateRow(0,1) row1 row1 - 3x row0

23

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(0)

EliminateRow(0,1) row1 row1 - 3x row0

EliminateRow(0,2) row2 row2 - 3x row0

24

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(0)

EliminateRow(0,1) row1 row1 - 3x row0

EliminateRow(0,2) row2 row2 - 3x row0

EliminateRow(0,2) row3 row3 - 4x row0

25

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(1)

26

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(1)

EliminateRow(1,2) row2 row2 2x row1

27

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(1)

EliminateRow(1,2) row2 row2 2x row1

EliminateRow(1,3) row3 row3 5x row1

28

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(2)

29

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(2)

EliminateRow(2,3) row3 row3 - 20x row2

30

Gaussian Elimination - Sequential

System of equations is first reduced to upper

triangular form, the solution is then computed

using back substitution. We focus on the first

step

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

DivideRow(3)

31

Gaussian Elimination

- Analysis of sequential algorithm

- dividing rows n(n-1) /2 n2 /2

- elimination 2n3/3

- total cost O(n3)

- Quite a lot, lets parallelize it!

- Hmm, where to start?

SequentialGE( ) for(k0 kltn

k) DivideRow(k)

for(ik1 iltn i)

EliminateRow(i,k)

32

Gaussian Elimination

- Idea

- lets assign each row to one processor

- we can then do all the elimination steps in

parallel, as they would be done by different

processors - that is good, as the elimination is the costly

step

33

Simple Parallel Gaussian Elimination

p0

DivideRow(0)

P1

p3

p4

34

Simple Parallel Gaussian Elimination

p0

P1

DivideRow(1)

p3

p4

35

Simple Parallel Gaussian Elimination

36

Simple Parallel Gaussian Elimination II

- Analysis (simple parallel)

- dividing rows O(n2)

- broadcast O(n(ts twn)log n) O(tsn log(n)

twn2 log(n)) - elimination O(n2)

- Total time Tp O(tsn log(n) twn2 log(n))

- Cost (with n processors) O(n3 log n)

37

Pipelined Parallel Gaussian Elimination (1D)

- Problem

- simple parallel Gaussian Elimination is not

cost-optimal because of the cost of broadcasting - Solution

- pipeline the broadcasting of rows

- when a process gets data, it forwards them down

the pipeline and only then proceeds to process

the data

38

Pipelined Parallel Gaussian Elimination (1D)

- Analysis

- dividing rows O(n2)

- forwarding (n-1)(ts twn) tsn twn2

- start-up (n-1)(ts twn)

- elimination O(n2)

- Total time O(n2 tsn twn2 )

- Total cost O(n3)

- Less then n processes

- block vs striped partitioning

39

2D partitioning

- Why?

- make use of more then n processors

- Issues

- pipelining, block vs striped partitioning

- Analysis (n2 processors)

- each computation step O(1)

- each communication step ts tw

- of communication/computation steps per

process O(n) - Total time O(n tsn twn )

- Total cost O(n3)

- Code for processor Ai,j

- for iltj

- for ij

- for igtj

40

Partial Pivoting

- Problem

- if Ak,k is 0 or close to 0, numerical errors

are greatly magnified - Solution Partial Pivoting

- examine row k and choose the pivot column j such

that Ak,j is biggest in the row k - swap columns j and k (either explicitly or

implicitly) - Issues

- other rows need to learn the pivot column

- if row-wise 1D partitioning is used, the pivot

can be identified locally and passed along with

the data - column-wise 1D partitioning requires

communication to identify the pivot (and swapping

columns can unbalance the load) - 2D partitioning cannot be well pipelined with

partial pivoting identifying pivot for iteration

k1 cannot commence immediately after iteration k

41

Back Substitution

Sequential solution

SeqBackSubstitution(U,x,y) for(kn-1

kgt0 k--) xk yk

for(ik-1 igt 0 i--) yi -

xkUi,k

y

U

x0 x1 x2 x3

42

Back Substitution

Sequential solution

SeqBackSubstitution(U,i,k) for(kn-1

kgt0 k--) xk yk

for(ik-1 igt 0 i--) yi -

xkUi,k

y

x3 -1 y2 0-(-1)3 3 y1 0-(-1)8

8 y0 0-(-1)(-3) -3

U

x0 x1 x2 x3

43

Back Substitution

Sequential solution

SeqBackSubstitution(U,i,k) for(kn-1

kgt0 k--) xk yk

for(ik-1 igt 0 i--) yi -

xkUi,k

y

x2 3 y1 8-32 2 y0 -3-3(-2) 3

U

x0 x1 x2 x3

44

Back Substitution

Sequential solution

SeqBackSubstitution(U,i,k) for(kn-1

kgt0 k--) xk yk

for(ik-1 igt 0 i--) yi -

xkUi,k

y

x1 2 y0 3-21 1

U

x0 x1 x2 x3

45

Back Substitution

Sequential solution

SeqBackSubstitution(U,i,k) for(kn-1

kgt0 k--) xk yk

for(ik-1 igt 0 i--) yi -

xkUi,k

y

x0 1

U

Sequential cost O(n2)

x0 x1 x2 x3

46

Back Substitution

How to parallelize it? Simple 1D solution - use

row partitioning

1DParBackSubs(U,i,k) for(kn-1 kgt0 k--)

xk yk Broadcast(xk)

for(ik-1 igt 0 i--) in parallel

yi - xkUi,k

Algorithm for processor i

for(kn-1 kgti k--) RecvBroadcast(xk)

yi - xkUi,k xi yi

Broadcast(xi)

47

Back substitution

- Analysis

- local computation O(1) times n

- broadcast O(log n) times n

- overall time O(n log n)

- overall parallel cost O(n2 log n)

- not cost optimal

- but the overall cost of solving equations is

determined by gaussian elimination. - back substitution can be made optimal using

pipelining. - Homework

- write pipelined version of back substitution

- how to do 2D version of back substitution?

48

Matrix Algorithms Summary

- Matrix-Vector Multiplication

- with 1D/2D partitioning

- Matrix-Matrix Multiplication

- simple parallel algorithm

- Cannons algorithm

- the DNS algorithm

- Solving Systems of Linear Equations

- simple Gaussian elimination

- with pipelining/partial pivoting

- back substitution