Multiple Regression - PowerPoint PPT Presentation

1 / 5

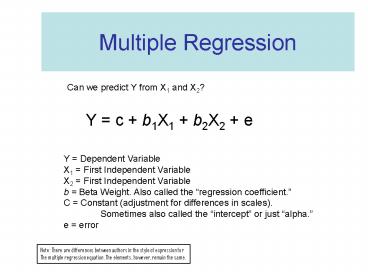

Title: Multiple Regression

1

Multiple Regression

Can we predict Y from X1 and X2?

- Y c b1X1 b2X2 e

Y Dependent Variable X1 First Independent

Variable X2 First Independent Variable b Beta

Weight. Also called the regression

coefficient. C Constant (adjustment for

differences in scales). Sometimes also called

the intercept or just alpha. e error

Note There are differences between authors in

the style of expression for The multiple

regression equation. The elements, however,

remain the same.

2

Notes for multiple regression

1 . If all variables are standardized (converted

to z scores) The b is expressed as a ß

ß is interpretable as a partial correlation

coefficient. The constant disappears.

Standardizing variables is generally automatic

with multiple regression software

programs.

2 . ß is typically tested for significance The

test is for the probability that the independent

variable does not explain any of the variation

in Y beyond what is explained by the other

independent variables.

3. Multicollinearity occurs because two (or more)

variables are strongly related they measure

essentially the same thing. Remove one Combine

them Increase sample size

3

(No Transcript)

4

Can you visualize having 6 or more independent

variables?

5

Additional Notes

R² The multiple regression correlation

coefficient A measure of the proportion of

variance in Y explained by the set of

independent variables

Order of Entry. Since ßi is a partial with

representing the removal of the effects of other

independent variables already entered, ßi

changes depending on the order of entry into the

equation.

Stepwise Regression. When you want to know what

subsets of independent variables best predict

the dependent variable, you use a stepwise

selection process

1) Forward Selection Enter independent variables

based on the correlation with the dependent

variable after taking into account the

independent variables already included. Each

addition requires a recalculation of the

regression coefficients. Stop entering when

adding them fails to further "significantly"

explain residual variation in Y. 2) Backward

Selection Starting with all the variables in the

model successively drop the least "significant,"

until all that are left are only "significant"

predictors.