Class Wrap-up - PowerPoint PPT Presentation

1 / 41

Title:

Class Wrap-up

Description:

NL/UI/Medical collaboration. 5. Research Projects. Vision. Shree ... How to construct a Bayesian net for a given problem. What are the independence assumptions? ... – PowerPoint PPT presentation

Number of Views:24

Avg rating:3.0/5.0

Title: Class Wrap-up

1

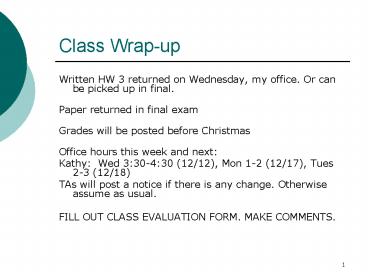

Class Wrap-up

- Written HW 3 returned on Wednesday, my office. Or

can be picked up in final. - Paper returned in final exam

- Grades will be posted before Christmas

- Office hours this week and next

- Kathy Wed 330-430 (12/12), Mon 1-2 (12/17),

Tues 2-3 (12/18) - TAs will post a notice if there is any change.

Otherwise assume as usual. - FILL OUT CLASS EVALUATION FORM. MAKE COMMENTS.

2

Life After AI

3

Research Projects

- Send email to project_at_cs.columbia.edu

- http//www.cs.columbia.edu/project

4

Research Projects

- Natural Language Processing

- Kathy McKeown

- Julia Hirschberg

- Owen Rambow (CCLS)

- Becky Passonneau (CCLS)

- Mona Diab (CCLS)

- Nizar Habash (CCLS)

- Text Classification

- Given a question, is a sentence in a relevant

document an answer? - Given a translated/transcribed sentence, which

English sentence is it closest in meaning to? - NL/UI/Medical collaboration

5

Research Projects

- Vision

- Shree Nayar

- Peter Belhumeur

- John Kender

- Data mining

- Sal Stolfo, but on sabbatical this year

- Machine Learning

- Tony Jebara

- Rocco Serveddio (theoretical)

6

Stop by and visit

- CS Advising

- Recommendation letters

- Research project

- Advice on applying to graduate school

7

Final Exam

- Wednesday, December 19th, 833 Mudd

- Cumulative exam

- Slightly more focus on second half of class

- Knowledge Representation

- Logic and inference

- First order logic

- Probabilistic Reasoning and Bayes Nets

- Machine learning

- Vision

- Natural Language processing

- But can expect search, game playing, constraint

satisfaction to be covered

8

Exam Format

- Multiple Choice

- Short answer

- Problem solving

- Essay

9

Problems

- Proof by Refutation

- FOL

- Bayesian Nets

- Machine learning

- Vision/NLP

- Essay

10

Refutation/Resolution

- Introduce negated sentence

- Convert to a CNF (disjunction of terms, or

literals) - Apply resolution search to determine

satisfiability (SAT) or unsatsifiability (UNSAT) - SAT, then not entailed

- Semi-decidability implies there may be a SAT

solution we can never find - UNSAT, then entailed

11

Inference Properties

- Inference method A is sound (or truth-preserving)

if it only derives entailed sentences - Inference method A is complete if it can derive

any sentence that is entailed - A proof is a record of the progress of a sound

inference algorithm.

12

Resolution

- Prove (DVE)

- KB (A-gt CVD) (AVDVE) (A-gt C)

13

Negate Goal

14

Negate goal

- (DVE)

- D?E

15

Convert to Conjunctive Normal Form

16

(No Transcript)

17

Convert to Conjunctive Normal Form

- Equivalence A-gtB AVB

- (AVCVD) ?

- (AVDVE) ?

- (AVC) ?

- D ?

- E

18

Apply resolution rule

19

Apply resolution rule

- (AVCVD)

- (AVDVE)(CVDVE)

- 2. (AVDVE)

- 3. (AVC)

- (DVEVC)

- R1 (CVDVE)R2 (DVEVC) (DVE)

- R3 (DVE)4. D E

- R4 E5. E contradiction

20

FOL

- 4 points Using First Order Logic, write axioms

describing the predicates Grandmother, Cousin.

Use only the predicates Mother, Father, sibling

in your definitions.

21

- ?x ?y? w ? z grandmother(x,y) ? mother (x,z) ?

((father(z,y) V mother z(y)) - ?x ?y? w ? z cousin (x,y) ? (mother(z,x) ?

sibling(z,w) V father(z,x) ? sibling(z,w)) ?

(mother(w,y) V father (w,y))

22

- 4 points Translate each of the following

English sentences into the language of standard

first-order logic, including quantifiers. Use the

predicates French(x), Australian(x), Wine(x), ?,

and the functions Price(x) and Quality(x) - All French wines cost more than all Australian

wines. - The best Australian wine is better than some

French wines.

23

- ?w?y French (w) ? Australian (y) ? Price (w) ?

Price (y) - ?z ? w ? y Australian (z) ? Australian (w) ?

French (y) ? (quality (w) ? quality (y)) ? (

quality(w) ? quality (z))

24

Bayesian NetworksWhat do you need to know?

- Why are independence and conditional independence

important? - What is Bayes Rule used for? How?

- How to construct a Bayesian net for a given

problem - What are the independence assumptions?

- How to do inference using a Bayesian net (i.e,

what does it predict?)

25

An Example

- Smoking causes cancer

- Graph?

- Conditional Probability Tables?

26

(No Transcript)

27

How do we compute inferences?

- What is the probability that a person has a

malignant tumor and is not a smoker? - What is the probability that a person has a

benign tumor given that she is a light smoker?

28

(No Transcript)

29

Can we compute inferences in the opposite

direction?

- What is the probability that a person is not a

smoker if I know that he has a benign tumor? - What is the probability that a person is a smoker

if I know that he has a malignant tumor? - Under what circumstances is it helpful to compute

inferences in the reverse direction?

30

(No Transcript)

31

a

c

Converging

a

b

b

b

Diverging

Linear

c

c

a

32

D-separation (opposite of d-connecting)

- A path from q to r is d-connecting with respect

to the evidence nodes E if every interior node n

in the path has the property that either - It is linear or diverging and is not a member of

E - It is converging and either n or one of its

decendants is in E - If a path is not d-connecting (is d-separated),

the nodes are conditionally independent given E

33

(No Transcript)

34

- Are age and gender independent?

- Is cancer independent of age and gender given

smoking? - Is serum calcium independent of lung tumor?

- Is serum calcium independent of lung tumor given

cancer?

35

(No Transcript)

36

(No Transcript)

37

(No Transcript)

38

Advanced approachesNLP and vision

- How much do you need to know?

- Some idea of the applications

- Some idea of the approaches

- Reduction to simpler problems e.g. edge

detection - NLP syntax, semantics, pragmatics, statistics

- How do these applications fit into our view of

artificial intelligence?

39

- 2 points Answer this question for either the

natural language problem or the vision problem

(not both) - Vision You are writing a supervised learning

program to identify the handwritten digits 1-9.

What would your training material be? What

features might your program use? - Natural language You are writing a supervised

learning program to translate from French to

English. What would your training material be?

What features might your program use?

40

- 3. Learning from Observations (10 points)

- Ms Apple I. Podd is a Columbia University

Computer Science major who listens to music

almost everywhere she goes. She often has

homework due and many of them involve

programming. Weve sampled her choice of music

genre over several points in time. - Naive Bayes is another machine learning method,

but is based on probabilities. The formula below

can be used to compute the most probable target

category given a new instance. Suppose a friend

of yours observes Ms. Podd in the morning. She

has homework due and it does not involve

programming. Use the formula to show what type of

music Naïve Bayes will predict she is listening

to. VNB argmax

P(vj)?iP(aivj) -

vj?V

41

VNB argmax P(vj)?iP(aivj)

vj?V

42

Knowledge Representation

- Given an entry in WordNet, show the pieces of the

ontology that are implied - Given a machine learning problem, show how the

ontology might be used to improve accuracy of

learning - Given a statement in English, show how to

represent it in semantic network

43

- Thank you, good luck on the final exam, and have

a great winter break! - See you on the 19th!