Linear%20and%20Nonlinear%20Data%20Dimensionality%20Reduction - PowerPoint PPT Presentation

Title:

Linear%20and%20Nonlinear%20Data%20Dimensionality%20Reduction

Description:

d=1: yk=[yk1]T. PCA Approach 1: Least Squares Dist. d-Dimensional: d lines ... Construct neighborhood graph, G. 1. O(DN2) Description. Name. Step. Isomap: Algorithm ... – PowerPoint PPT presentation

Number of Views:95

Avg rating:3.0/5.0

Title: Linear%20and%20Nonlinear%20Data%20Dimensionality%20Reduction

1

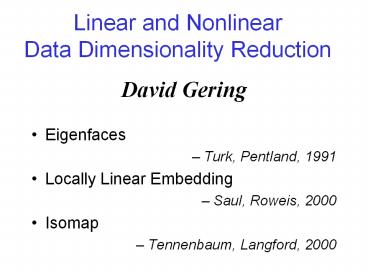

Linear and NonlinearData Dimensionality Reduction

David Gering

- Eigenfaces

- Turk, Pentland, 1991

- Locally Linear Embedding

- Saul, Roweis, 2000

- Isomap

- Tennenbaum, Langford, 2000

2

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

3

Data Dimensionality Reduction for Images

D gtgt d

4

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

5

Principle Component Analysis

- 3 Appoaches

- Least Squares Dist.

- Change of Variables

- Matrix Factorization

6

PCA Approach 1 Least Squares Dist.

Hotelling, 1901

0-Dimensional single point

D2 xkxk1 xk2T

7

PCA Approach 1 Least Squares Dist.

1-Dimensional single line

I2

u

m

I1

D2 xkxk1 xk2T

d1 ykyk1T

8

PCA Approach 1 Least Squares Dist.

d-Dimensional d lines

I2

u1

u2

m

I1

Ii standard basis (axes) xij standard

components ui principle axes yij principle

components

D2 xkxk1 xk2T d2 ykyk1 yk2T

9

PCA Approach 1 Least Squares Dist.

d-Dimensional d lines

I2

I2

u1

u2

u2

m

m

u1

I1

I1

10

PCA Approach 2 Change of Variables

Pearson 1933

kTH principle component linear combination

ukTx that maximizes Var(ukTx) subject to

uTu1 Cov(ukx, ujx) 0 for k lt j

11

PCA Approach 3 Matrix Factorization

12

PCA Approach 3 Matrix Factorization

13

MDS Classical Multidimensional Scaling

14

PCA Summary

Compression

15

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

16

Eigenfaces

- PCA

- Project faces onto a face space that spans the

significant variations of known faces - Projected Face weighted sum of Eigenfaces

- Eigenfaces are the eigenvectors (principle axes)

of the scatter matrix that span face space

17

Eigenfaces Algorithm

18

Eigenfaces Experimental Results

19

Eigenfaces Applications

- (Training)

- Calculate the basis from the training set images.

- Project the training images into FaceSpace

- Compression

- Project the test image into FaceSpace

- Detection

- Determine if the image is a face by measuring its

distance from FaceSpace - Recognition

- If it is a face, compare it to the training

images (using FaceSpace coordinates) - Knobification

20

Eigenfaces Advantages

- Discovers structure of data lying near a linear a

subspace of the input space - Unsupervised learning

- Linear nature easily visualized

- Simple implementation

- No assumptions regarding statistical distribution

of data - Non-iterative, globally optimal solution

- Polynomial time complexity

- Training O(N3)

- Test O(DND)

21

Eigenfaces Disadvantages

- Not capable of discovering nonlinear degrees of

freedom - Optimal only when xi form a hyperellipsoid cloud

- Multi-dimensional Gaussian distribution

- Consequence of least-squares derivation

- Unable to answer how well new data are fit

probabilistically - Registration and scaling issues

- Compression gtgt Detection gtgt Recognition

- Eigenfaces are not logical face components

22

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

23

Locally Linear Embedding (LLE)

Main Idea Overlapping local structure

collectively analyzed can provide information

about global geometry

24

LLE Algorithm

25

LLE Algorithm

Step Name Description

1 O(DN2) K neighbors Compute the neighbors of each data point xi

2 O(DNK3) Wij Compute the weights Wij that best reconstruct each data point xi from its neighbors

3 O(dN2) yi Compute the vectors yi that are best reconstructed by the weights Wij

26

LLE Advantages

- Ability to discover nonlinear manifolds of

arbitrary dimension - Non-iterative

- Global optimality

- Few parameters K, d

- Captures context

- O(DN2) and space efficient due to sparse matrix

27

LLE Disadvantages

- Requires smooth, non-closed, densely sampled

manifold - Must choose parameters K, d

- Quality of manifold characterization dependent on

neighborhood choice - Fixed radius would allow K to vary locally

- Clustering would help high, irregular curvature

- Sensitive to outliers

- Weight less those Wi for points with poor

reconstructions (in least squares sense)

28

Comparisons LLE vs Eigenfaces

- PCA find embedding coordinate vectors that

minimize distance to all data points - LLE find embedding coordinate vectors that best

fit local neighborhood relationships - Application to Recognition and Detection (Map

test image from input space to manifold space) - Determine novel points K neighbors

- Compute test points reconstruction as linear

combination of training points - Approximate points manifold coordinates by

applying its weights to Y from training stage

29

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

30

Isomap

Main Idea Use approximate geodesic distance

instead of Euclidean distance

31

Isomap Algorithm

Step Name Description

1 O(DN2) Construct neighborhood graph, G Compute matrix DGdX(i,j) dx(i,j) Euclidean distance between neighbors

2 O(DN2) Compute shortest paths between all pairs Compute matrix DGdG(i,j) dG(i,j) sequence of hops approx geodesic dist.

3 O(dN2) Construct d-dimensional coordinate vectors, yi Apply MDS to DG instead of DX

32

Isomap Algorithm

33

Isomap Comparison Eigenfaces

- Eigenfaces w/ MDS

- DX derived from X in Euclidean space

- Only difference with Isomap

- DG is computed in approximate Geodesic space

- Map test image to manifold

- Compute dx to neighbors

- Interpolate y of neighbors

34

Isomap Advantages

- Nonlinear

- Non-iterative

- Globally optimal

- Parameters K, d

35

Isomap Comparison to LLE

- Similarities

- Begin with preprocessing step to identify

neighbors - Preserve intrinsic geometry of data by computing

local measures, after which data can be discarded - Overcome limitations of attempts to extend PCA

- Difference in local measure

- Geodesic distance vs. neighborhood relationship

- Differences in application

- Depends how well local metrics characterize

manifold - Isomap more robust to outliers

- Isomap preserves distance, LLE angles

- LLE avoids complexity of pairwise distance

computation

36

Choosing the Algorithm for the Application

- Smooth manifolds manifest themselves as slightly

noticeable changes between an ordered set of

examples - Video sequence

- Data that could conceptually be organized as an

aesthetically pleasing video sequence - Presence of nonlinear structure not known a

priori - Run both PCA and manifold learning to inspect

results for discrepency - Preservation of gedesic distances

37

Agenda

- Introduction to the Problem

- Background on PCA, MDS

- Eigenfaces

- LLE

- Isomap

- Summary

38

Summary

Eigenfaces LLE Isomap

Optimization Constraint Linear combination of original coordinates that accounts for most variance Local intrinsic geometry represented as linear combination of neighbors Geodesic distance approximated as neighbor-to-neighbor hopping distance

Parameters None K or ? K or ?

Global Optimality Yes Yes Yes

Nonlinear Manifolds No Yes Yes

Training Complexity O(N3) O(DN2) O(DN2)

39

Isomap Disadvantages

- Guaranteed asymptotically to recover geometric

structure of nonlinear manifolds - As N increases, pairwise distances provide better

approximations to geodesics by hugging surface

more closely - Graph discreteness overestimates dM(i,j)

- K must be high to avoid linear shortcuts near

regions of high surface curvature - Mapping novel test images to manifold space