TextMap: An Intelligent QuestionAnswering Assistant - PowerPoint PPT Presentation

1 / 37

Title:

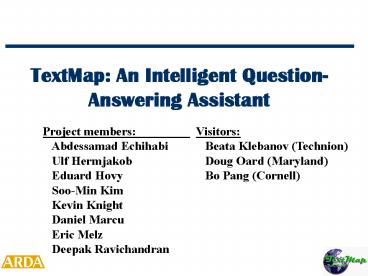

TextMap: An Intelligent QuestionAnswering Assistant

Description:

Presley died PP PP in A_DATE , and SNT . Q: When did Elvis Presley die? S: Presley died of heart disease at Graceland in 1977, and the faithful return by the ... – PowerPoint PPT presentation

Number of Views:88

Avg rating:3.0/5.0

Title: TextMap: An Intelligent QuestionAnswering Assistant

1

TextMap An Intelligent Question-Answering

Assistant

Project members Visitors Abdessamad

Echihabi Beata Klebanov (Technion) Ulf

Hermjakob Doug Oard (Maryland) Eduard

Hovy Bo Pang (Cornell) Soo-Min Kim

Kevin Knight Daniel Marcu Eric Melz

Deepak Ravichandran

2

TextMap Dec 2001 (Webclopedia)

3

Lesson 1 IR matters

QA Typology

- Inquery advantages

- accuracy

- speed

- structured queries (proximity

- operators and weights)

Structured Query Gen.

Inquery IR

4

Lesson 2 Source size matters

QA Typology

Web-based Query Gen.

The Web

Inquery IR

5

Lesson 3 Understanding what we are doing matters

- Question Who is the leader of France?

- Henri Hadjenberg, who is the leader of France's

Jewish community, endorsed confronting the

specter of the Vichy past. - 100 word overlap, but sentence does not

contain answer. - Bush later met with French President Jacques

Chirac. - 0 word overlap, but sentence contains the

correct answer.

One fundamental problem of QA is that of

designing similarity metrics and spaces in which

the distance between questions and good answers

is small.

6

An alternative view of Web-based search

7

Question paraphrasing

Text

IR

Question (Q)

8

Examples (Hermjakob et al., 2002)

- Who invented the cotton gin?

- ltwhogt invented the cotton gin

- ltwhogt was the inventor of the cotton gin

- ltwhogt's invention of the cotton gin

- ltwhogt was the father of the cotton gin

- ltwhogt received a patent for the cotton gin

- How did Mahatma Gandhi die?

- Mahatma Gandhi died lthowgt

- Mahatma Gandhi drowned

- Mahatma Gandhi suffocated

- ltwhogt killed Mahatma Gandhi

- ltwhogt assassinated Mahatma Gandhi

9

Lesson 3 Understanding what we are doing matters

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

10

Lesson 4 Understanding what the Russians are

doing matters

- Observation

- Simple, pattern-based QA systems can achieve

high-levels of performance. - Problem need large number of patterns, per QA

type - Research direction

- Develop techniques for learning QA patterns

automatically - Develop techniques for using QA-based patterns in

end-to-end QA system.

When was Mozart born? Mozart ( 1756 1792 )

.. ..born in 1756 , Mozart Mozart was born in

1756 ,

CAN QA PATTERNS BELEARNED AUTOMATICALLY?

11

Learning Answer Patterns (Ravichandran and Hovy,

20022003)

- New bootstrapping method

- Choose target relation

- Provide seed words to Altavista Mozart, 1756

- Use answer sentences

- Extract surface patterns

- Mozart ( 1756 - 1792

- ...born in 1756 , Mozart

- Advantages

- Learns patterns automatically with minimal human

effort - Easy and fast to build snd use

- Approach easily goes multilingual

12

Surface Patterns Disadvantages

- No use of Named-Entity tagger and Part of Speech

tagger, so sometimes get bad matches - Patterns do not delineate exact answers

- Question Who are the Aborigines?

- Pattern ltQuestion_Termgt, an ltAnswergt

- Answer time helping Aborigines, an ancient

tribe whose plight - has been similar to American Indians.

- Patterns still require semantic QA

Classification - When was A born? BIRTHDATE

- When did A become the President of B?

DATE-OF-OFFICE - When did C achieve statehood? DATE-OF-CHANGE

- Not real regular expressions

- Cannot handle near misses in patterns, since

they are templates

13

Learning long dependency/multilevel patterns

- Current work create more sophisticated pattern

language - Babe Ruth was born in Baltimore, on February 6,

1895. - George Herman Babe Ruth was born here in 1895.

(word, semantic tag, part of speech, wildcard

toward regular expression language)

14

Question and Answer Patterns

- Answer Patterns (7000)

- ltORGANIZATION_QTgt and ltORGANIZATION_ATgt

- ltLOCATION_ATgt , ltLOCATION_QTgt

- ltPERSON_QTgt _CC _DT ltNON_ATgt

- ltPERSON_QTgt was born (s) in ltLOCATION_ATgt ,

- ltNON_QTgt (g) from ltNON_ATgt

- that ltNON_QTgt is _RB ltNON_ATgt

- ltLOCATION_ATgt ( ltLOCATIONgt _CC ltLOCATION_QTgt

- _IN (g) ltNON_ATgt of ltNON_QTgt

- Question Patterns (221)

- What is (g) ?

- What is (g) of (g) ?

- Where is (g) ?

- What does (g) stand for ?

- What is the population of (g) ?

- Who was (g) of (g) ?

15

Using Patterns to Acquire Knowledge

- Can apply pattern method offline, to first build

up large knowledge base - no time limit

- cross-verify answers from various patterns

- answer future questions directly and quickly

- Recent work in KB building

- used 15GB text collection from ISI (Fleischman et

al. 2002) - built up several thousand categories of instances

- tested on 50 random TREC what is X? questions

Exact matches

Accuracy 48 (exact) 60 (close)

16

Maximum Entropy Modeling

- C ? set of all possible answer chunks

- Q ? given question

- A ? set of all potential Answer sentences

- F ? set of all features

ME Reranker

Top Answer

Q

F

A,Q

A,Q,C

Feature functions

Chunker

IR

17

Feature Functions

Specific features

- Feature Functions

- Question Patterns

- Answer Patterns

- Expected Question Class (QTarget)

- Answer Class

- Syntactic Class

- Word Match

- Frequency

General features

System in which IR has at least one answer

Complete System

18

Lesson 4 Understanding what the Russians are

doing matters

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

Pattern-based Answer Selection

QA Pattern Database

19

Lesson 5 Understanding what LCC is doing matters

- Example

- Q1570 What is the legal age to vote in

Argentina? - Answer Voting is mandatory for all Argentines

aged over 18. - Lexical Chains (1) legala1

-gt GLOSS -gt rulen1 -gt RGLOSS -gt

mandatorya1 - (2) agen1 -gt RGLOSS -gt ageda3

- (3) Argentinea1 -gt GLOSS -gt Argentinan1

LCC Reasoning is a method for establishing

word-alignments between answer and question

terms QA is like Machine Translation

20

Statistical-Based Approach to QA (Echihabi and

Marcu, 2003)

- Machine Translation

- Collect lots of translations pairs (e,f)

- Find a generative story that explains how e can

be rewritten into f - Train a model

- P(F E)

- Use this model in order to generate translations

Ei for unseen sentences Fj - E argmax P( Ei Fj )

- Question Answering

- Collect lots of (Q,A) pairs

- Find a generative story that explains how the

parse tree of an answer sentence can be rewritten

into a question - Train a model

- P(Q parsetree(S i, Ai,j))

- Use this model for answer selection and

re-ranking in order to find answers to a question

- A argmax P(Q parsetree(S i, Ai,j))

21

Q When did Elvis Presley die? S Presley died

of heart disease at Graceland in 1977, and the

faithful return by the hundreds each year

to mark the anniversary.

NP PERSON Presley

When did Elvis Presley

die ?

22

Model training

- The cuts in the answer sentence parse trees are

made deterministically - The answer selection is also made

deterministically - The parameter estimation of the steps that map

flattened answer parse trees into questions is

done with an off-the-shelf statistical machine

translation package, GIZA. - The model learns, for example, that

- p(When A_DATE) 0.7

- p(When NP ) 0.15

23

Generating test cases

- We dont know where the answer is, so we generate

all possible Q-S i, Ai,j pairs - Q When did Elvis Presley die?

- SA1 Presley died A_PP PP PP , and SNT .

- SAi Presley died PP PP in A_DATE , and SNT .

- SAj Presley died PP PP PP , and NP return by

- A_NP NP .

24

A noisy-channel-based QA system

25

Lesson 5 QA can be thought of as an translation

process

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

Pattern-based Answer Selection

Statistics-based Answer Selection

QA Pattern Database

26

Lesson 6 Common Sense matters

- Various answer selection systems have

complementary strengths/weaknesses. - Redundancy is good.

- Blatant errors should be avoided

- It or They are not good answers to any

questions. - Thursday is not likely to be a good answer for

most When questions. - Need a framework to integrate all these

heuristics.

27

Lesson 6 Common Sense matters

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

Pattern-based Answer Selection

Statistics-based Answer Selection

QA Pattern Database

ME-based ranking

28

Lesson 6 Common Sense matters (Echihabi et al.)

29

Lesson 7 Engineering matters

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

Pattern-based Answer Selection

Statistics-based Answer Selection

QA Pattern Database

ME-based ranking

30

Lesson 8 Users matter

- Users dont want answers from TREC. They want

answers. Period. - Users want

- Easy to use graphical interfaces.

- Answers provided in context.

- Fast systems

- Continuously updated list with top-n answers.

- Much more

TextMap 1.0 delivered to MITRE in November 2003

31

Responding to changes in the program

- Answering definition questions

- Answering opinion questions

- Multilingual QA (CLEF-2003)

32

Answering definition questions (Hermjakob)

- Who is Aaron Copland?

- Good

- Aaron Copland's death comes a definitive

biography of America's most important composer - Copland was born in 1900, the son of Russian

Jewish immigrants (original name Kaplan) - Bad

- So she took me to meet Aaron Copland, who was

then in his early thirties. - Each recipient of the honor, known as the Aaron

Copland Awards, will get the run of the place

with a spouse or partner, but no children or pets

are allowed.

Core idea for answering definition

questions Boost the scores of definition-like

answers

33

Lesson 9 Compiled knowledge matters

- 14,414 biographies from biography.com

- Find definition-specific terms

- Nobel, Oxford, poem, knighted, traveled,

studied, edited, painted, poem, Poetry, Painter,

War, Symphony, immigrant, teacher, Music - 966,557 descriptors of people (Fleischman et al.,

2003) - 10 composer , composer , composer , Aaron Copland

- 2 witness , witness , witness , Aaron Copland

- 1 musician , musician , Pulitzer Prize winning

musician , Aaron Copland - 1 composer , composer , classical composer ,

Aaron Copland - Wordnet glosses

- Copland, Aaron Copland United States composer

(1900-1990) - 110 semantic relation patterns

- The anchor is a logical subject of verbs like

write, compose, teach. - The anchor is logical object of verbs like

bear. - The anchor is in a subject-copula-object

relationship with a head noun like composer,

advocate, son.

TREC03 12.2 answers/questions 1663

bytes/question 46.1 accuracy

on NIST metric

34

Answering opinion questions (Hovy, Kim,

Ravichandran)

- Initial study What are opinions? Do people

agree? What are the components of an opinion

answer? - Three anchors Topic, Holder (person, org),

Valency (pos, neutral, neg). Need two out of

three in proximity - Trained opinion recognizer system

- Got Wall Street Journal corpus from Columbia U

(thanks!), separated into opinion/non-opinion

text - Created several models for combining T,H,V

markers - Counted frequencies of words in (pos/neg)

sentences in various T,H contexts. Word

spectrum - Results promising (TREC 2003).

- BUT choose all sentences as opinion is pretty

good too!

35

CLEF2003 (Echihabi, Oard, Marcu, Hermjakob)

- Input Spanish questions

- Translated with Systran module for

automatically correcting systematic errors. - cuanto whichever how many

- Answers found using only TextMap KR Answer

Selection Module. - Official CLEF results

- 77/200 correct answers in top 3

- 56/200 correct answer in top 1

36

Lesson 10 Making your funders understand how

hard everyone in your team worked matters

New components shown in red

QA Typology

Question paraphrasing

Web-based Query Gen.

Reformulation Database

The Web

Inquery IR

Pattern-based Answer Selection

Statistics-based Answer Selection

QA Pattern Database

ME-based ranking

- Definition questions

- Opinion questions

- Multilingual QA

- Paraphrase learning

37

Conclusions

- We learned a lot.

- We had tremendous fun.

- Thank you!