Learning Combination Features with L1 Regularization - PowerPoint PPT Presentation

1 / 1

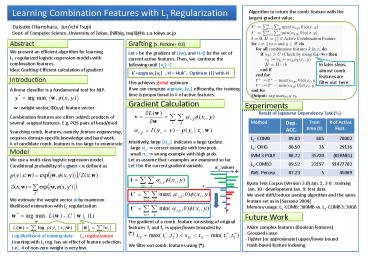

Title: Learning Combination Features with L1 Regularization

1

Learning Combination Features with L1

Regularization

Algorithm to return the comb. feature with the

largest gradient value.

Daisuke Okanohara, Junichi Tsujii Dept. of

Computer Science, University of Tokyo, hillbig,

tsujii_at_is.s.u-tokyo.ac.jp

Abstract

Grafting S. Perkins 03

We present an efficient algorithm for learning

L1-regularized logistic regression models with

combination features. Idea GraftingEfficient

calculation of gradient

Let v be the gradient of L(w), and H be the

set of current active features. Then, we

continue the following until vkltC. This

achieves global optimum. If we can compute

argmaxk vk efficiently, the training time is

proportional to of active features.

In later steps, almost comb. features are filter

out here.

kargmaxkvk , H H?k, Optimize (1) with H

Introduction

A linear classifier is a fundamental tool for

NLP.

Gradient Calculation

Experiments

w weight vector, F(x,y) feature vector

Combination features are often added products

of several original features. E.g. POS pairs of

head/mod

Result of Japanese Dependency Task ()

Searching comb. features, namely feature

engineering, requires domain-specific knowledge

and hard work. of candidate comb. features is

too large to enumerate

Intuitively, large ai,y indicates a large

update. large ai,y ? correct example with low

prob. small ai,y ? wrong example with high

prob. Let us assume that r examples are examined

so far. Let t be the current gradient variable.

The gradient of a comb. feature

consisting of original features f1 and f2 is

upper/lower bounded by () We filter out comb.

features using ().

Model

We use a multi-class logistic regression model.

Conditional probability of y given x is defined

as

ai,y values

Kyoto Text Corpus (Version 3.0) Jan. 1, 3-8

training Jan. 10 development Jan. 9 test

data We used shift/reduce parsing algorithm and

the same feature set as in Sassano 2004 Memory

usage L1-COMB 300MB vs. L2-COMB3 30GB

r

We estimate the weight vector w by maximum

likelihood estimation with L1 regularization

Future Work

- More complex features (Boolean features)

- Grouped Lasso

- Tighter (or approximate) upper/lower bound

- Hash-based feature indexing

Log-likelihood of training data

L1 regularization

Learning with L1 reg. has an effect of feature

selection. i.e., of non-zero weight is very

few.