Outline for Intro to Multiprocessing - PowerPoint PPT Presentation

Title:

Outline for Intro to Multiprocessing

Description:

Not lockstep instructions. Could execute different instructions on different processors ... Lock-step execution on regular data structures. Often requires ... – PowerPoint PPT presentation

Number of Views:34

Avg rating:3.0/5.0

Title: Outline for Intro to Multiprocessing

1

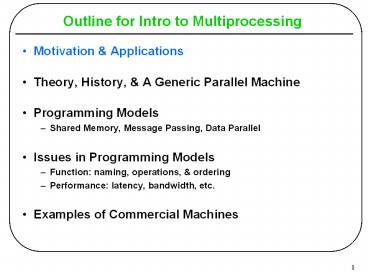

Outline for Intro to Multiprocessing

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Shared Memory, Message Passing, Data Parallel

- Issues in Programming Models

- Function naming, operations, ordering

- Performance latency, bandwidth, etc.

- Examples of Commercial Machines

2

Applications Science and Engineering

- Examples

- Weather prediction

- Evolution of galaxies

- Oil reservoir simulation

- Automobile crash tests

- Drug development

- VLSI CAD

- Nuclear bomb simulation

- Typically model physical systems or phenomena

- Problems are 2D or 3D

- Usually require number crunching

- Involve true parallelism

3

So Why Is MP Research Disappearing?

- Where is MP research presented?

- ISCA International Symposium on Computer

Architecture - ASPLOS Arch. Support for Programming Languages

and OS - HPCA High Performance Computer Architecture

- SPAA Symposium on Parallel Algorithms and

Architecture - ICS International Conference on Supercomputing

- PACT Parallel Architectures and Compilation

Techniques - Etc.

- Why is the number of MP papers at ISCA falling?

- Read The Rise and Fall of MP Research

- Mark D. Hill and Ravi Rajwar

4

Outline

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Issues in Programming Models

- Examples of Commercial Machines

5

In Theory

- Sequential

- Time to sum n numbers? O(n)

- Time to sort n numbers? O(n log n)

- What model? RAM

- Parallel

- Time to sum? Tree for O(log n)

- Time to sort? Non-trivially O(log n)

- What model?

- PRAM Fortune, Willie STOC78

- P processors in lock-step

- One memory (e.g., CREW forconcurrent read

exclusive write)

6

But in Practice, How Do You

- Name a datum (e.g., all say a2)?

- Communicate values?

- Coordinate and synchronize?

- Select processing node size (few-bit ALU to a

PC)? - Select number of nodes in system?

7

Historical View

Join At Program With

I/O (Network) Message Passing

Memory Shared Memory

Processor Data Parallel Single-Instruction

Multiple-Data (SIMD), (Dataflow/Systolic)

8

Historical View, cont.

- Machine ? Programming Model

- Join at network so program with message passing

model - Join at memory so program with shared memory

model - Join at processor so program with SIMD or data

parallel - Programming Model ? Machine

- Message-passing programs on message-passing

machine - Shared-memory programs on shared-memory machine

- SIMD/data-parallel programs on SIMD/data-parallel

machine - But

- Isnt hardware basically the same? Processors,

memory, I/O? - Convergence! Why not have generic parallel

machine program with model that fits the

problem?

9

A Generic Parallel Machine

- Separation of programming models from

architectures - All models require communication

- Node with processor(s), memory, communication

assist

10

Outline

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Shared Memory

- Message Passing

- Data Parallel

- Issues in Programming Models

- Examples of Commercial Machines

11

Programming Model

- How does the programmer view the system?

- Shared memory processors execute instructions

and communicate by reading/writing a globally

shared memory - Message passing processors execute instructions

and communicate by explicitly sending messages - Data parallel processors do the same

instructions at the same time, but on different

data

12

Todays Parallel Computer Architecture

- Extension of traditional computer architecture to

support communication and cooperation - Communications architecture

Shared Memory

Message Passing

Data Parallel

Multiprogramming

Programming Model

User Level

Libraries and Compilers

Communication Abstraction

Operating System Support

System Level

Communication Hardware

Hardware/Software Boundary

Physical Communication Medium

13

Simple Problem

- for i 1 to N

- Ai (Ai Bi) Ci

- sum sum Ai

- How do I make this parallel?

14

Simple Problem

- for i 1 to N

- Ai (Ai Bi) Ci

- sum sum Ai

- Split the loops

- Independent iterations

- for i 1 to N

- Ai (Ai Bi) Ci

- for i 1 to N

- sum sum Ai

15

Programming Model

- Historically, Programming Model Architecture

- Thread(s) of control that operate on data

- Provides a communication abstraction that is a

contract between hardware and software (a la ISA) - Current Programming Models

- Shared Memory

- Message Passing

- Data Parallel (Shared Address Space)

16

Global Shared Physical Address Space

Machine Physical Address Space

load

Pn

Common Physical Addresses

- Communication, sharing, and synchronization with

loads/stores on shared variables - Must map virtual pages to physical page frames

- Consider OS support for good mapping

store

P0

Shared Portion of Address Space

Pn Private

Private Portion of Address Space

P2 Private

P1 Private

P0 Private

17

Page Mapping in Shared Memory MP

Node 0 0,N-1

Node 1 N,2N-1

Keep private data and frequently used shared data

on same node as computation

Interconnect

Node 2 2N,3N-1

Node 3 3N,4N-1

Load by Processor 0 to address N3 goes to Node 1

18

Return of The Simple Problem

- private int i,my_start,my_end,mynode

- shared float AN, BN, CN, sum

- for i my_start to my_end

- Ai (Ai Bi) Ci

- GLOBAL_SYNCH

- if (mynode 0)

- for i 1 to N

- sum sum Ai

- Can run this on any shared memory machine

19

Message Passing Architectures

Node 0 0,N-1

Node 1 0,N-1

- Cannot directly access memory on another node

- IBM SP-2, Intel Paragon

- Cluster of workstations

Interconnect

Node 2 0,N-1

Node 3 0,N-1

20

Message Passing Programming Model

- User-level send/receive abstraction

- local buffer (x,y), process (P,Q), and tag (t)

- naming and synchronization

21

The Simple Problem Again

- int i, my_start, my_end, mynode

- float AN/P, BN/P, CN/P, sum

- for i 1 to N/P

- Ai (Ai Bi) Ci

- sum sum Ai

- if (mynode ! 0)

- send (sum,0)

- if (mynode 0)

- for i 1 to P-1

- recv(tmp,i)

- sum sum tmp

- Send/Recv communicates and synchronizes

- P processors

22

Separation of Architecture from Model

- At the lowest level, shared memory sends messages

- HW is specialized to expedite read/write messages

- What programming model / abstraction is supported

at user level? - Can I have shared-memory abstraction on message

passing HW? - Can I have message passing abstraction on shared

memory HW? - Some 1990s research machines integrated both

- MIT Alewife, Wisconsin Tempest/Typhoon, Stanford

FLASH

23

Data Parallel

- Programming Model

- Operations are performed on each element of a

large (regular) data structure in a single step - Arithmetic, global data transfer

- Processor is logically associated with each data

element - SIMD architecture Single instruction, multiple

data - Early architectures mirrored programming model

- Many bit-serial processors

- Today we have FP units and caches on

microprocessors - Can support data parallel model on shared memory

or message passing architecture

24

The Simple Problem Strikes Back

- Assuming we have N processors

- A (A B) C

- sum global_sum (A)

- Language supports array assignment

- Special HW support for global operations

- CM-2 bit-serial

- CM-5 32-bit SPARC processors

- Message Passing and Data Parallel models

- Special control network

25

Aside - Single Program, Multiple Data

- SPMD Programming Model

- Each processor executes the same program on

different data - Many Splash2 benchmarks are in SPMD model

- for each molecule at this processor

- simulate interactions with myproc1 and myproc-1

- Not connected to SIMD architectures

- Not lockstep instructions

- Could execute different instructions on different

processors - Data dependent branches cause divergent paths

26

Review Separation of Model and Architecture

- Shared Memory

- Single shared address space

- Communicate, synchronize using load / store

- Can support message passing

- Message Passing

- Send / Receive

- Communication synchronization

- Can support shared memory

- Data Parallel

- Lock-step execution on regular data structures

- Often requires global operations (sum, max,

min...) - Can support on either shared memory or message

passing

27

Review A Generic Parallel Machine

- Separation of programming models from

architectures - All models require communication

- Node with processor(s), memory, communication

assist

28

Outline

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Issues in Programming Models

- Function naming, operations, ordering

- Performance latency, bandwidth, etc.

- Examples of Commercial Machines

29

Programming Model Design Issues

- Naming How is communicated data and/or partner

node referenced? - Operations What operations are allowed on named

data? - Ordering How can producers and consumers of data

coordinate their activities? - Performance

- Latency How long does it take to communicate in

a protected fashion? - Bandwidth How much data can be communicated per

second? How many operations per second?

30

Issue Naming

- Single Global Linear-Address-Space (shared

memory) - Multiple Local Address/Name Spaces (message

passing) - Naming strategy affects

- Programmer / Software

- Performance

- Design complexity

31

Issue Operations

- Uniprocessor RISC

- Ld/St and atomic operations on memory

- Arithmetic on registers

- Shared Memory Multiprocessor

- Ld/St and atomic operations on local/global

memory - Arithmetic on registers

- Message Passing Multiprocessor

- Send/receive on local memory

- Broadcast

- Data Parallel

- Ld/St

- Global operations (add, max, etc.)

32

Issue Ordering

- Uniprocessor

- Programmer sees order as program order

- Out-of-order execution

- Write buffers

- Important to maintain dependencies

- Multiprocessor

- What is order among several threads accessing

shared data? - What affect does this have on performance?

- What if implicit order is insufficient?

- Memory consistency model specifies rules for

ordering

33

Issue Order/Synchronization

- Coordination mainly takes three forms

- Mutual exclusion (e.g., spin-locks)

- Event notification

- Point-to-point (e.g., producer-consumer)

- Global (e.g., end of phase indication, all or

subset of processes) - Global operations (e.g., sum)

- Issues

- Synchronization name space (entire address space

or portion) - Granularity (per byte, per word, ... gt overhead)

- Low latency, low serialization (hot spots)

- Variety of approaches

- Testset, compareswap, LoadLocked-StoreConditiona

l - Full/Empty bits and traps

- Queue-based locks, fetchop with combining

- Scans

34

Performance Issue Latency

- Must deal with latency when using fast processors

- Options

- Reduce frequency of long latency events

- Algorithmic changes, computation and data

distribution - Reduce latency

- Cache shared data, network interface design,

network design - Tolerate latency

- Message passing overlaps computation with

communication (program controlled) - Shared memory overlaps access completion and

computation using consistency model and

prefetching

35

Performance Issue Bandwidth

- Private and global bandwidth requirements

- Private bandwidth requirements can be supported

by - Distributing main memory among PEs

- Application changes, local caches, memory system

design - Global bandwidth requirements can be supported

by - Scalable interconnection network technology

- Distributed main memory and caches

- Efficient network interfaces

- Avoiding contention (hot spots) through

application changes

36

Cost of Communication

- Cost Frequency x (Overhead Latency Xfer

size/BW - Overlap) - Frequency number of communications per unit

work - Algorithm, placement, replication, bulk data

transfer - Overhead processor cycles spent initiating or

handling - Protection checks, status, buffer mgmt, copies,

events - Latency time to move bits from source to dest

- Communication assist, topology, routing,

congestion - Transfer time time through bottleneck

- Comm assist, links, congestions

- Overlap portion overlapped with useful work

- Comm assist, comm operations, processor design

37

Outline

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Issues in Programming Models

- Examples of Commercial Machines

38

Example Intel Pentium Pro Quad

- All coherence and multiprocessing glue in

processor module - Highly integrated, targeted at high volume

- Low latency and bandwidth

39

Example SUN Enterprise (pre-E10K)

- 16 cards of either type processors memory, or

I/O - All memory accessed over bus, so symmetric

- Higher bandwidth, higher latency bus

40

Example Cray T3E

- Scale up to 1024 processors, 480MB/s links

- Memory controller generates comm. request for

non-local references - No hardware mechanism for coherence (SGI Origin

etc. provide this)

41

Example Intel Paragon

Intel

i860

i860

Paragon

node

L

L

1

1

Memory bus (64-bit, 50 MHz)

Mem

DMA

ctrl

Driver

NI

4-way

Sandia

s Intel Paragon XP/S-based Super

computer

interleaved

DRAM

8 bits,

175 MHz,

bidir

ectional

2D grid network

with pr

ocessing node

attached to every switch

42

Example IBM SP-2

- Made out of essentially complete RS6000

workstations - Network interface integrated in I/O bus (bw

limited by I/O bus)

43

Summary

- Motivation Applications

- Theory, History, A Generic Parallel Machine

- Programming Models

- Issues in Programming Models

- Examples of Commercial Machines