205CSC316 High Performance Computing - PowerPoint PPT Presentation

1 / 18

Title:

205CSC316 High Performance Computing

Description:

PASCAL - DATA STRUCTURES (ZURICH) SIMULA - MODULE (OSLO) ... COMBINATION OF THE PROCESSORS MAY EXECUTE IN LOCKSTEP OR. SYNCHRONISED MODE. ... – PowerPoint PPT presentation

Number of Views:29

Avg rating:3.0/5.0

Title: 205CSC316 High Performance Computing

1

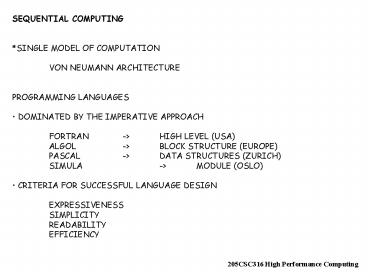

SEQUENTIAL COMPUTING SINGLE MODEL OF

COMPUTATION VON NEUMANN ARCHITECTURE

PROGRAMMING LANGUAGES DOMINATED BY THE

IMPERATIVE APPROACH FORTRAN -gt HIGH LEVEL

(USA) ALGOL -gt BLOCK STRUCTURE

(EUROPE) PASCAL -gt DATA STRUCTURES

(ZURICH) SIMULA -gt MODULE (OSLO) CRITERIA

FOR SUCCESSFUL LANGUAGE DESIGN EXPRESSIVENESS

SIMPLICITY READABILITY EFFICIENCY

205CSC316 High Performance Computing

2

PARALLEL COMPUTING NO WIDELY AGREED

MODEL MORE COMPONENTS TO CONSIDER - ARCHITEC

TURE - SOFTWARE - ALGORITHMS WHY

BOTHER WITH PARALLELISM? TECHNOLOGY IS

AVAILABLE COMMERCIALLY VIABLE USER

BENEFITS

205CSC316 High Performance Computing

3

ISSUES FOR HPC SYSTEMS 1) ARCHITECTURE HOW

TO ORGANISE AND IMPLEMENT MULTIPLE

PROCESSORS 2) SOFTWARE PROGRAMMING

APPROACH CORRECTNESS IMPLEMENTATION TOOLS 3)

ALGORITHMS RETHINK IN PARALLEL -gt MAJOR

PERFORMANCE IMPROVEMENTS

205CSC316 High Performance Computing

4

ARCHITECTURE ARRAY PROCESSORS A PROCESSOR

EQUIVALENT TO THAT USED IN A SEQUENTIAL COMPUTER

IS DUPLICATED A NUMBER OF TIMES AND FORCED TO

ACT IN UNISON. EACH PROCESSOR GIVEN ITS OWN

LOCAL MEMORY. THE CONTROL UNIT BROADCASTS AN

INSTRUCTION WHICH ANY COMBINATION OF THE

PROCESSORS MAY EXECUTE IN LOCKSTEP OR

SYNCHRONISED MODE. SINGLE CONTROL UNIT -gt

LESS FLEXIBILITY

205CSC316 High Performance Computing

5

ARCHITECTURE VECTOR PROCESSORS AN ARITHMETIC

OPERATION IS DIVIDED INTO 4 DISTINCT STAGES

AFTER THE OPERANDS ARE REPRESENTED IN THEIR

BINARY EQUIVALENTS. EACH STAGE CAN BE

OPERATING ON A DIFFERENT PAIR OF OPERANDS -

PIPELINING. AS A RESULT THE NUMBER OF

INSTRUCTIONS EXECUTED PER SECOND IS GREATLY

INCREASED. THIS PRINCIPLE OF PIPELINING CAN

BE APPLIED TO ALL OPERATIONS IN THE COMPUTER AND

A SEPARATE UNIT INTRODUCED TO HANDLE EACH

OPERATION. IN ADDITION SEVERAL OF THE UNITS

CAN BE OPERATING IN PARALLEL.

205CSC316 High Performance Computing

6

ARCHITECTURE SHARED MEMORY PROCESSORS

SEVERAL PROCESSORS EACH WITH ITS OWN

INSTRUCTION SET AND CAPABLE OF INDEPENDENT

ACTION SHARE A COMMON MEMORY DIFFERENT PARTS OF

AN APPLICATION CAN BE ASSIGNED TO DIFFERENT

PROCESSORS WITH THE RESULT THAT THE TIME FOR

EXECUTION OF THE COMPLETE PROGRAM IS REDUCED.

PERFORMANCE IS AFFECTED BY SOME PROCESSORS

IDLING WAITING FOR OTHERS REQUIREMENTS FOR A

PARALLEL ALGORITHM, CONTENTION FOR

RESOURCES. SHARED MEMORY -gt BANDWIDTH MEMO

RY CONTENTION

205CSC316 High Performance Computing

7

ARCHITECTURE DISTRIBUTED MEMORY

PROCESSORS PROCESSORS HAVE THEIR OWN LOCAL

MEMORY AND ARE CONNECTED THROUGH AN

INTERCONNECTION NETWORK OR SWITCH. WHEN TWO

PROCESSORS WISH TO COMMUNICATE THEY SEND

MESSAGES TO EACH OTHER. DISTRIBUTED SYSTEMS

-gt INTERCONNECTION TOPOLOGY COMMUNICATIONS

OVERHEAD

205CSC316 High Performance Computing

8

ARCHITECTURE DATA FLOW PROCESSORS (AS OPPOSED

TO CONTROL FLOW) THE DATA DETERMINES THE ORDER

IN WHICH THE INSTRUCTIONS ARE EXECUTED. IF

THE DATA IS AVAILABLE FOR SEVERAL INSTRUCTIONS

THEY CAN BE EXECUTED IN PARALLEL. "DATA

DRIVEN" PROCESSORS SCHEDULE OPERATIONS FOR

EXECUTION WHEN THEIR OPERANDS BECOME AVAILABLE.

SCALAR DATA VALUES FLOW IN TOKENS OVER AN

INTERCONNECTION NETWORK TO THE INSTRUCTIONS THAT

WORK UPON THEM (HENCE THE TERM DATA FLOW").

WHEN A TOKEN ARRIVES, THE HARDWARE CHECKS THAT

ALL OPERANDS ARE PRESENT, AND IF THEY ARE,

SCHEDULES THE INSTRUCTION FOR EXECUTION.

205CSC316 High Performance Computing

9

PARALLELISM IUSSES CONCURRENCY COMMUNICATION

SYNCHRONISATION NETWORK TOPOLOGY PROCESSOR

SCHEDULING COMMUNICATION/COMPUTATION RATIO LOAD

BALANCING SCALEABILITY ARCHITECTURE

AWARENESS NON-DETERMINACY DEADLOCK TERMINATIO

N THINKING PARALLEL ETC. EDUCATIONAL

ISSUE

205CSC316 High Performance Computing

10

CURRENT SITUATION Traditional HPC

manufacturers Cray, Fujitsu - special

processors technology specially designed for

high performance computing - built from the

fastest ECL and GaA circuit technology

Workstation vendors IBM - commodity

processors - extension of existing product

range

205CSC316 High Performance Computing

11

CURRENT SITUATION Projects UK, EU, US, Japan

initiatives ASCI (Accelerated Strategic

Computing Initiative) 1995 10 year US DoD 1

billion programme Simulate nuclear

devices 'promote the preservation of

competitiveness in HPC through financial

support for the development of advanced US

technology'

205CSC316 High Performance Computing

12

CURRENT SITUATION Projects ASCI Red Intel

Paragon machine breaking teraflop barrier in 1997

ASCI Blue Pacific Sustained teraflop

performance on an actual application by 1998 IBM

project - scaling in a distributed architecture

- 93M, 512 RS/6000 processors - clusters of

shared memory processors ASCI Blue

Mountain CRAY/SGI Origin 2000 - new

microprocessor - 1998 3072 processor system rated

at 3 teraflop/s

205CSC316 High Performance Computing

13

CURRENT SITUATION Projects ASCI WHITE IBM -

Power Processor -8192 processors rated at 7.2

teraflop/s ASCI Q HEWLETT-PACKARD -Alpha

processor -4096 system rated at 7.7 teraflop/s

205CSC316 High Performance Computing

14

TOP500 SUPERCOMPUTER SITES The TOP500 project

was started in 1993 to provide a reliable basis

for tracking and detecting trends in

high-performance computing. Twice a year, a

list of the sites operating the 500 most powerful

computer systems is assembled and released.

The best performance on the Linpack benchmark

is used as performance measure for ranking the

computer systems.

205CSC316 High Performance Computing

15

TOP500 SUPERCOMPUTER SITES The Linpack

Benchmark The benchmark used in the LINPACK

Benchmark is to solve a dense system of linear

equations. The benchmark allows the user to

scale the size of the problem and to optimise

the software in order to achieve the best

performance for a given machine. This

performance does not reflect the overall

performance of a given system, as no single

number ever can. It does, however, reflect the

performance of a dedicated system for solving a

dense system of linear equations.

205CSC316 High Performance Computing

16

TOP500 SUPERCOMPUTER SITES The Linpack

Benchmark By measuring the actual performance

for different problem sizes n, a user can get

not only the maximal achieved performance (Rmax)

for the problem size (Nmax) but also the problem

size (N1/2) where half of the performance Rmax

is achieved. These numbers together with the

theoretical peak performance (Rpeak) are the

numbers given in the TOP500.

205CSC316 High Performance Computing

17

TOP500 SUPERCOMPUTER SITES The Linpack

Benchmark Rank Position within the TOP500

ranking Manufacturer Manufacturer or

vendor Computer Type indicated by manufacturer

or vendor Installation Site Customer Location

Location and country Year Year of

installation/last major update Installation

Area Field of Application Processors Number

of processors Rmax Maximal LINPACK

performance achieved Rpeak Theoretical peak

performance Nmax Problem size for achieving

Rmax N1/2 Problem size for achieving half of

Rmax

205CSC316 High Performance Computing

18

TOP500 SUPERCOMPUTER SITES (http//www.top500.org)

November 2005

205CSC316 High Performance Computing