Review of: Sizing Router Buffers - PowerPoint PPT Presentation

Title:

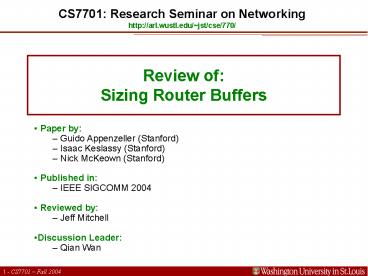

Review of: Sizing Router Buffers

Description:

Drops will occur at the same time. ... Slow-start also has no effect on our buffering needs (length only, not transmission rate) ... – PowerPoint PPT presentation

Number of Views:112

Avg rating:3.0/5.0

Title: Review of: Sizing Router Buffers

1

Review of Sizing Router Buffers

CS7701 Research Seminar on Networking http//arl.

wustl.edu/jst/cse/770/

- Paper by

- Guido Appenzeller (Stanford)

- Isaac Keslassy (Stanford)

- Nick McKeown (Stanford)

- Published in

- IEEE SIGCOMM 2004

- Reviewed by

- Jeff Mitchell

- Discussion Leader

- Qian Wan

2

Outline

- Introduction and Motivation

- Buffering for a Single Long-Lived TCP Flow

- Buffering for Many Long-Lived TCP Flows

- Buffering for Short Flows

- Simulation Results

- Conclusion

3

Introduction and Motivation

- The Evil Rule of Thumb

- Router buffers today are sized based on a

rule-of-thumb, which was derived from experiments

conducted on at most 8 TCP flows on a 40Mb/sec

link. - States that

- Buffer size RTT Capacity (all network

interfaces) - Unfortunately, reality is worse, because network

operators often require 250ms or more of

buffering. - Typical case in a new high-speed, 10Gbit router,

then - 250ms 10 Gb/s 2.5 Gbits of buffering PER PORT

4

Introduction and Motivation

- This much buffering requires us to use off-chip

SDRAM. Why is this bad? - Price

- Typical 512Mb PC133 SDRAM part is 105. For a

10-port 10Gbit router, the memory alone would

cost about 650. - Latency

- Typical DRAM has latency of about 50ns.

- However, a minimum length packet (40 bytes) can

arrive every 32ns on a 10Gbit link. - Currently

- Lots of memory accessed in parallel or cache

- Either way requires a very wide bus and huge

number of very fast data pins.

5

Buffering for a Single Long-Lived TCP Flow

- Where did this rule come from?

- Router buffers sized so that they dont underflow

and lose throughput. - Based on the characteristics of TCP.

- Senders window size is steadily increasing until

it fills the routers buffer. - When the buffer is filled it must drop the latest

packet. - Just under one RTT later, the sender times out

waiting for an ACK from the dropped packet and

halves its window size. - (contd on next slide)

6

Buffering for a Single Long-Lived TCP Flow

- Now the sender has too many outstanding packets

and must wait for ACKs. Meanwhile the router is

emptying its buffer forwarding sent packets to

the receiver. - Buffer sized so the buffer runs out of packets to

send just as the sender starts sending new

packets. - In this way, the buffer never goes empty no

underflow. - This size is equal to the bandwidth-delay

product. - A pictorial example is on the next slide the

math proving this is available in the paper.

7

Buffering for a Single Long-Lived TCP Flow

One RTT later, sender realizes its missing an

ACK and cuts its window size in half.

Sender has too many outstanding packets and must

wait for ACKs

TCP Flow through an underbuffered router

TCP Flow through a rule-of-thumb buffered router

TCP Flow through an overbuffered router

Buffer fills and drops one packet

Buffer empties its queue

Packets received from sender again just as the

buffer becomes empty.

8

Buffering for a Single Long-Lived TCP Flow

- Thats where the rule-of-thumb comes from.

- But is it realistic?

- Do we normally have 8 or less flows in a backbone

link? - No, obviously. Typical OC-48 link carries over

10,000 flows at one time. - Are all flows long-lasting?

- Many flows only last a few packets, never leave

slow-start, and thus never reach their

equilibrium sending rate.

9

Buffering for Many Long-Lived TCP Flows

- Synchronized flows where the sawtooth patterns

of the individual flows line up are possible

for small numbers of flows. - Drops will occur at the same time.

- Therefore we still need rule-of-thumb buffering

to achieve perfect utilization.

10

Buffering for Many Long-Lived TCP Flows

- Simulation and real-world experiments has shown

that synchronization is very rare above 500 flows

and backbone routers typically have 10,000 - This leads to some interesting results.

- The peaks and troughs of the various sawtooth

waves tend to cancel each other out, leaving a

uniform peak with only slight variation. - We can model the total window size as a bounded

random process made up of the sum of the

independent sawtooths. - Central limit theorem states that the aggregate

window size will converge to a gaussian process,

as shown by the figure on the next slide.

11

Buffering for Many Long-Lived TCP Flows

- Because the probability distribution of the sum

of the congestion windows of the flows (left,

above), is a normal distribution, the number of

packets in the queue itself has a normal

distribution shifted by a constant (right,

above). - This is useful to know, since it means we now

know the probability that any chosen buffer size

will underflow and lose throughput.

12

Buffering for Many Long-Lived TCP Flows

- With some more probability math (omitted here for

brevity), a utilization function is derived that

gives a lower bound for utilization - Where (2TpC) is the number of outstanding bytes

on the link (bandwidth-delay product), n is the

number of concurrent flows passing through it at

any given time, and B is the buffer size.

13

Buffering for Many Long-Lived TCP Flows

- Numerical examples of utilization, with 10,000

concurrent (mostly long) flows - Near-full utilization is seen with routers using

buffers that are only 1 of the bandwidth-delay

product. At 2, near-perfect utilization is

seen. - As the number of concurrent flows increases, the

utilization gets even better.

14

Buffering for Short Flows

- Many flows are not long-lived and never reach

their equilibrium sending rate. - Define a short flow as one that never leaves

slow-start (typically a flow with fewer than 90

packets). - It is well-known that new short flows arrive

according to a Poisson process (the time between

the new flow arrivals is exponentially

distributed). - Short flows will be modeled by bursts of packets

(as they will exhibit this behavior during

slow-start) the arrival of these bursts is also

assumed (with theory) to be Poisson. - This allows us to model the router buffer as a

M/G/1 queue with a FIFO service discipline. - Note that non-TCP packets (UDP, ICMP, etc.) are

modeled as 1-packet short flows.

15

Buffering for Short Flows

- The average number of jobs in a M/G/1 queue is

well-known. - Interestingly, queues exhibiting this behavior

are not dependent on the bandwidth of the link or

the number of flows, only on the load of the link

and the length of the flows. - Equations and math supporting these statements

are available in the extended version of the

paper. - This is good because it means we need choose our

buffer size based solely on the length of short

flows on our network, not the speed of the

network, propagation delay, or the number of

flows.

16

Buffering for Short Flows

- Therefore, a backbone router needs the same

amount of buffering for many (say, thousands) of

short-lived flows as for a few. - Slow-start also has no effect on our buffering

needs (length only, not transmission rate). - It gets better.

- Experimental evidence shows that when there is a

mix of short and long flows, the number of long

flows ends up dictating the buffering

requirement the short flows have little effect

and their quantity does not matter. - Moreover, the model explored in this paper

assumes worst-case theory and experimental

evidence shows that short flows may exhibit

behavior that limits their impact on buffering

requirements even further.

17

Simulation Results

- Over 10,000 ns2 simulations, each simulating

several minutes of network traffic through a

router to verify the model over a range of

possible settings. - Other experiments on a real backbone router with

real TCP sources (4 OC3 ports). - Unfortunately, they have so far been unable to

convince a network operator to test their results

until such a time their results cannot be

proven for the general Internet.

18

Simulation Results ns2, Long Flows

- For long-lived TCP

- flows, results as

- predicted once the

- number of flows is

- above about 250.

- Model holds over a wide range of settings

provided there are a large number of flows,

little or no synchronization, and the congestion

window is above two (if less, flows encounter

frequent timeouts and require more buffering).

19

Simulation Results ns2, Long Flows

- If the buffer size is made very small, loss can

increase. - Not necessarily a problem.

- Most flows deal with loss just fine TCP uses it

as feedback, after all. - Applications which are sensitive to loss are

usually more sensitive to queuing delays, which

smaller buffers decreases. - Goodput not really affected.

- Fairness decreases as buffers get smaller.

- All flows RTTs decrease, so all flows send

faster. - This causes relative differences of sending rates

to decrease.

20

Simulation Results ns2, Short Flows

- For simulations, used the common metric for short

flows average flow completion time (AVFT). - Found that the M/G/1 model closely matches the

simulation results (as an upper bound). - Simulation results verify that amount of

buffering needed does not depend on the number of

flows, bandwidth, or RTT, but only on the load of

the link and length of the bursts.

21

Simulation Results ns2, Mixed Flows

- Results show that long flows dominate.

- General result holds for different flow length

distributions if at least 10 of traffic is from

long flows. - Measurements on commercial networks indicate 90

of traffic is from long flows. - At about 200 flows synchronization has all but

disappeared and we are achieving high or perfect

utilization. - Whats more, AFCT for short flows is better than

using the rule-of-thumb. - Shorter flows complete faster because of less

queuing delay.

22

Simulation Results Router

- Short flow results match the model remarkably

well. Long flows do too the model predicts the

utilization within the measurement accuracy of

about /- 0.1.

23

Conclusion

- Router buffers are much larger than they need to

be. Currently they are RTTC (C sending rate),

when they should really be RTTC/sqrt(n) (n

number of flows). - Although this cannot be proven in a commercial

setting until a network operator agrees to test

it out (not likely), eventually router

manufacturers will be forced to abandon the

rule-of-thumb and use less RAM simply because of

the costs and problems associated with putting so

much RAM on a router. - The authors hope that when this happens they will

be proven correct.

24

What Next?

- Now (Questions True) ? Ask Discuss