Ontologies - PowerPoint PPT Presentation

Title:

Ontologies

Description:

Recall the variety of knowledge representations used in AI during the ... lump of peanut butter in half, each half is also a lump of peanut butter, but if ... – PowerPoint PPT presentation

Number of Views:60

Avg rating:3.0/5.0

Title: Ontologies

1

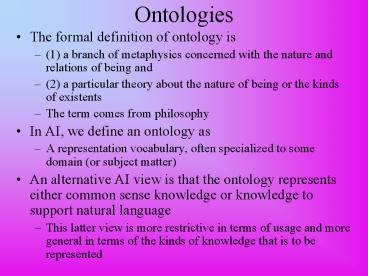

Ontologies

- The formal definition of ontology is

- (1) a branch of metaphysics concerned with the

nature and relations of being and - (2) a particular theory about the nature of being

or the kinds of existents - The term comes from philosophy

- In AI, we define an ontology as

- A representation vocabulary, often specialized to

some domain (or subject matter) - An alternative AI view is that the ontology

represents either common sense knowledge or

knowledge to support natural language - This latter view is more restrictive in terms of

usage and more general in terms of the kinds of

knowledge that is to be represented

2

Why Ontologies?

- Recall the variety of knowledge representations

used in AI during the 1960s and 1970s - Imagine that expert system 1 performs automotive

engine diagnosis and expert system 2 designs new

automotive engines that have superior gas mileage - Can the two systems communicate with each other?

Can one draw upon knowledge from the other? - probably not, but why not?

- Given the number of expert systems, and the sheer

amount of knowledge modeled, it seems ridiculous

to not be able to share it across problem solvers - The ontology is supposed to provide a mechanism

for knowledge sharing - knowledge-based systems

- search engines

- Internet agents

- natural language reasoners

- CYC (common sense reasoner) and the applications

that it supports

3

Ontology as Content Theory

- AI researchers have often viewed AI from a

mechanism theory point of view - To get AI to work, we have to implement systems

that do things so that AI should be the study of

how people solve problems - On the other hand, some view the central problem

of AI in terms of representation - What knowledge do we need to solve the given

problem? - The ontology promotes this latter view, AI as a

study of content theory - Content theory means that we study and theorize

over the nature of the content needed for a

system, that is, the nature of knowledge - This is not to say that the knowledge

representation problem is solved - We must still make a commitment in our ontology

in terms of the form of representation, but we

may select unwisely - In the past, the primary representations for

ontologies have been - predicate calculus

- frame systems

- semantic networks

4

Types of Knowledge

- One of the problems that early expert systems had

was that there was no way to distinguish between

what a system knew from what a system used in

terms of knowledge - Consider the auto-mechanic diagnostic system,

while we can ask it questions like what is the

cause of these symptoms? we cannot ask it do

you know what a spark plug does? or tell me

everything you know about car engines - There are different ways to define what a system

knows - David Marr defined 3 levels of knowledge

- what problems can be solved by the system

(computational level) - the strategy that is used (algorithmic level)

- how the knowledge is represented/implemented

(implementation level) - Allen Newell instead defined 2 levels

- what the system knows (knowledge level)

- how the system knows it (symbol level)

- With distinctions such as these, we can

differentiate between what is in an ontology and

how the ontology is going to work

5

What Should an Ontology Store?

- Since an ontology is an attempt to store

knowledge neutral of its use, we need to define

the types of knowledge that an ontology will

store - The ontology needs to know about things in the

domain - classes and the relationships between classes

(class/subclass for instance, but also other

relationships such as classes that are siblings

of the same parent class), and also instances of

classes - features/attributes, the values that can be

stored in those features, the range and types for

those values as well as restrictions that might

be placed on those values - properties found within the domain (e.g., laws of

the domain, relationships between classes and

features, inference rules) - Domain specific elements may also be applied

- What we dont want to do is place only

problem-specific knowledge in the ontology - For instance, if we are creating an ontology of

medicine for diagnostic purposes, the medical

knowledge should be in a neutral form so that it

can also be used for reasoning over drug

interactions, drug development, and medical

experiments

6

Upper Ontology

- An ontology will start with the most basic

concepts at the root of the tree - Entity or Thing is commonly used as a root node

- There is disagreement over the root nodes

children - CYC individual object, intangible, represented

- GUM configuration, element, sequence

- Wordnet living, nonliving

- Sowa concrete, process, object abstract

- Given such divergent approaches for upper

ontology, how can we possibly agree upon an

entire domain ontology? - There are some concepts that do seem universal

- objects exist, objects have attributes which have

values which can change over time, values have

properties - objects have relationships with other objects,

contain parts, and participate in or perform

processes - there are states that objects can be in, and

states can change over time - there are events that occur during time instants

where events cause other events and/or cause

objects to change states

7

Beyond an Upper Ontology

- We will typically want to represent a specific

domain of interest which will be the entities in

the domain and how the entities relate - Entities can be at a

- class level (e.g., living thing, emotional state,

event) - specific classes (e.g., human being, love, rock

concert) - And instances (e.g., Frank Zappa, my love of

Frank Zappa music, the Frank Zappa concert that I

saw in 1988) - Attributes (features) of each class (as discussed

two slides back) - The vocabulary of the domain

- Relations for how entities relate to each other

- is-a class/subclass relationship

- instance class/instance relationship (or should

we have both class/subclass and class/instance

relationships be denoted with is-a?) - has-a component/part relationship

(alternatively, we could use part-of or contains) - is-in spatial relationship (or possibly

temporal relationship)

8

Example Ontology Sports

- Question who decides what should be represented

and how? - here, the ontology is tangled (multiple parents)

- we may disagree about whether there should be

more than events here (players are not events,

sports are not events)

9

Example Ontology CS Dept.

Organization Department School

University Program ResearchGroup

Institute Publication Article

TechnicalReport JournalArticle

ConferencePaper UnofficialPublication

Book Software Manual

Specification Work Course

Research Schedule

Person Worker Faculty

Professor AssistantProfessor

AssociateProfessor VisitingProfessor Lecture

r PostDoc Assistant

ResearchAssistant

TeachingAssistant AdministrativeStaff

Director Chair Professor Dean

Professor ClericalStaff SystemsStaff

Student UndergraduateStudent

GraduateStudent

10

Example Continued Relationships

Relation Argument 1

Argument 2

publicationAuthor Publ

ication Person publicationDate Publication .DAT

E publicationResearch Publication Research softwa

reVersion Software .STRING softwareDocumentatio

n Software Publication teacherOf Faculty Cou

rse teachingAssistantOf TeachingAssistant Course

takesCourse Student Course age Person .NUMB

ER emailAddress Person .STRING head Organizat

ion Person undergraduateDegreeFrom Person Unive

rsity doctoralDegreeFrom Person University advis

or Student Professor alumnus Organization P

erson affiliateOf Organization

Person researchInterest Person Research

researchProject ResearchGroup Research listedCou

rse Schedule Course tenured

Professor .TRUTH

11

Example Continued Sample Inferences

- Suborganizations are transitive

- If subOrganization(x,y) and subOrganization(y,z),

then subOrganization(x,z). - Affiliates are invertable

- If affiliatedOrganization(x,y), then

affiliatedOrganization(y,x). - Membership transfers through suborganizations

- If member(x,m) and subOrganization(x,y), then

member(y,m). - These are domain-independent properties

- On the previous slide, we had used already

defined classes to help us define the

relationships Person, .DATE, .STRING, .NUMBER,

University, etc - Domain independent inferences should be available

for these domain-independent properties but we

have to make sure we apply them correctly - For instance, teacher-student relationships are

not transitive, and a student being enrolled in a

course may or may not represent a membership

relation

12

Representing Methods

- An ontology does not have to be limited to domain

knowledge - we may also include how to perform processes in

the domain - we often refer to such processes as methods

- Below we see several ontologies which draw upon

various problem solving methods (PSMs) - some of the methods available are Propose

Revise (PR) and Cover and Differentiate (CD)

13

Representational Format

- In order to create a machine-usable ontology

- we need algorithms to manipulate and infer over

the data - algorithms require a specific form of data

structure - what structures (representations) should we use?

- Cyc uses a first-order predicate calculus form

- (Teacher_of CSC_625 Fox)

- (Musician Frank_Zappa)

- (Band_member Genesis Phil_Collins)

- OWL uses an XML approach with tags defined by the

ontology author(s) - Should inferences be captured in rule-based form

or other? - A Cyc inference might look like this

- (implies (hasDegreeIn ?PERSON ?DEGREE)

(hasAttribute ?PERSON CollegeGraduate)) - These issues have not been resolved

- nor do they seem likely to be resolved because

people are using different representations in

their various ontological research (Ontolingua,

Cycl, OWL, KQML, KIF, Protégé-II, etc)

14

Ontological Commitments

- As stated earlier, the various ontology formats

make different commitments to the upper ontology - This means that they are already somewhat

incompatible - The question is what primitives need to be

predefined for an ontology? - Just about everyone agrees that ontologies should

support class/subclass relationship and therefore

there should be some mechanism for inheritance - What other primitives should our ontology have?

- OWL for instance makes a commitment to the

following primitives - URIs, class, properties (domain, range, type),

equivalences (i.e., setting two classes to be

equivalent) - Cyc has a greater set of primitives that include

- spatial primitives, temporal primitives, language

primitives (e.g., grammatical roles)

15

Are Primitives Universal?

- Early work in knowledge representation in AI

already demonstrated that researchers want to use

different primitives - Semantic networks contained these primitives

- isa (class/subclass), instance, has-part, object

(meaning a physical object), agent, beneficiary,

state, and properties, also mathematical

relations () - Roger Schank defined primitives that were all

verb-related for his Scripts/Conceptual

Dependencies - ATRANS (transfer of abstract relationship),

PTRANS (transfer of physical location), PROPEL,

MOVE, GRASP, INGEST, EXPEL, MTRANS (transfer of

mental information), MBUILD (build new

information) SPEAK, ATTEND - Upper ontology implies that there is a set of

primitive primitives - That is, primitives that should underlie all

knowledge and uses of knowledge

16

The Envisioned Uses of Ontologies

- Sharing common understanding of the structure of

information - consider web sites that contain medical

information and e-commerce services computer

agents could extract and aggregate information

from different sites to answer user queries or to

use the data as input to other applications - Enabling reuse of domain knowledge

- when one organization of researchers or

developers creates an ontology, others can reuse

it for their own domains and purposes - Making explicit domain assumptions

- hard-coding assumptions about the world in

programming-language code makes assumptions hard

to find, hard to understand, hard to change - Separating domain knowledge from operational

knowledge - Analyzing domain knowledge

17

Using an Ontology

- How will we use our ontologies?

- To enhance search engines beyond keyword searches

- I want to search for peer-reviewed publications

and so I search for articles, but someone has

defined their peer-reviewed papers as journal

articles and their articles include technical

reports - To annotate multimedia data files

- we cant currently search for the content of

image or sound files - To annotate design components

- imagine an expert system that needs to replace

component 1 with component 2 based on the

component functions and sizes - Intelligent agents

- so that two agents can find a common vocabulary

to communicate together - To support ubiquitous computing endeavors

18

Examples How Ontologies are Used

- Question answering

- If coded in the ontology, simply look up and

respond - class/subclass information (primarily through

inheritance) - attribute value response/database extraction

- If inferences are available then select and apply

appropriate inference - for example, given that A isa B and B is part of

a C, we might want to know if A is part of a C

19

Continued

- Processing

- We might want to transform or manipulate some set

of knowledge - this will again require some special purpose

inference or process, for example from a

biological ontology - if either X biosynthesis or X catabolism

exists then the parent X metabolism must also

exist - Translation

- The knowledge of one ontology might be used in

conjunction with another ontology and therefore

terms in one ontology might need translating into

the proper format/language/terms of the other - special purposes methods might be available to

translate terms - otherwise, common terms must be identified

between the ontologies so that related terms can

then be recognized even if the related terms go

by different names

20

Cyc Common Sense Reasoning

- An attempt to construct a common sense

knowledge-base - Effort of about 20 years of coding, a

person-century according to the earlier article

(more than that to this point) - 5,000,000 common sense facts and rules

- General-purpose knowledge-base to be used with

other applications - other applications are to sit on top of CYC so

that, when necessary, these system can delve

deeper into common sense/general-purpose

knowledge when the special-purpose knowledge is

not adequate - CycL is the language used to encode Cycs KB

- Originally, it was a frame-like system (loosely

organized objects), but modified to use predicate

calculus - Rules represented in free-form ways depending on

their specific intentions with uncertainty

represented only through relative rankings - P is more likely than Q

- Categories of facts and rules arranged

hierarchically using a directed graph (instead of

a tree)

21

Cyc Ontology

- Categories of categories

- Categories of individuals (tangible and

intangible objects) - Categories of scripts (typical actions and

events) - physiological actions, problem solving actions,

communications, rites of passage, work, hobby,

natural phenomena - Entities are categorized as

- Relationships (predicates)

- Attributes

- Lexical items (words, parts of speech/tense,

etc) - Proper nouns (people, places, )

- Microtheories (explained on the next slide)

- Miscellany

- Special-purpose inferences and heuristics (for

efficiency) - Temporal and spacial reasoning using qualitative

or naïve physics - Domain specific axioms (e.g., medical diagnostic

rules) - Inferences for specific syntactic structures

- General-purpose axioms to be applied when

special-purpose axioms are not available or do

not work

22

Naïve Physics

- A well researched problem is how do humans reason

naively - if I toss something in the air, what happens to

it? You dont apply physics, you use common

sense - Naïve Physics, also called qualitative reasoning,

is something that we might want to model in an

ontology - when I pour liquid from one cup into another half

filled cup, what is the result? - when I let go of an object, what happens?

- In these cases we use naïve reasoning ideas

instead of physics - We can devise some simple rules

- pouring from a non-empty cup into a non-empty cup

results in the second cup containing more and

possibly overfilling - unless supported, items will fall

- There are qualitative equations to model these

situations that we might want to use in place of

quantitative (physics) equations

23

Qualitative Reasoning Examples

- We will use qualitative state changes to describe

the current state of an entity - Physics reasoning might include such states as

increase, decrease, steady, empty, between and

full and use simple inference rules like - Empty Empty Empty Empty Full Full

- Empty Between Between Full Between

Overflow - Between Between Between, Full

- Temporal reasoning might include states like

before, during, after, overlap, coincide, etc

with rules like - If X is before Y and Y is before Z then X is

before Z - If X coincides with Y then X begins at the same

time Y begins and X ends at the same time Y ends - If X is during Y then Y begins before X and Y

ends after X - Spatial reasoning might have states like left-of,

right-of, on-top-of, underneath, obstructed-by,

inside-of, etc and rules like - If A is on top of B and A is heavy, then B cannot

be moved - If A is inside of B and B is moved to position

P1, then A is moved to P1

24

Microtheories

- Microtheories partition CYCs knowledge base into

different domains/concepts/problems and belief

states - microtheories are also called contexts

- Examples include medical diagnosis,

manufacturing, weather during the winter, what to

look for when buying a car, etc - information can be lifted from one context to

another - Each microtheory has its own

- categories (hierarchy of related items)

- predicates (some are shared between

microtheories, however one might use the same

predicate as another with different numbers of

parameters!) - axioms/inference methods

- assumptions

- Reasoning within a microtheory might be thought

of as a separate belief state although in reality

a microtheory works much like a partitioned name

space - Microtheories may overlap or be independent

25

Using Contexts

- Assertions are true within a given context but

not universally - the rules behind dining in restaurants differ

from those of dining at home - The context is specified in a statement

- A statement may be true in one context and false

in another - for instance, an assumption that apples cost 30

cents may be false in the context of the

depression-era US - We might have to lift elements from one context

into another - this provides a mechanism for reasoning about

items in different contexts - when lifting items from one context to another,

assumptions, vocabulary, axioms and other

elements that differ must be resolved in the new

context - For example

- a mother with a child will be expected to behave

a certain way - but she would be expected to behave like anyone

else in a grocery store - under exceptional situations, we lift behavior

from the mother/child context to override the

behavior in the grocery store

26

What Does Cyc Know?

27

Some of Cycs Entries

Thing is the "universal collection" the

collection which, by definition, contains

everything there is. Every individual object,

every other collection. Everything that is

represented in the Knowledge Base ("KB") and

everything that could be represented in the KB.

Cyc is designed to support representing any

imaginable concept in a form that is immediately

compatible with all other representations in the

KB, and directly usable by computer

software. Spatial Things have a spatial extent or

location relative to some other Spatial Thing or

in some embedding space. Note that to say that an

entity is a member of this collection is to

remain agnostic about two issues. First, a

Spatial Thing may be partially tangible (e.g.

Texas-State) or wholly intangible (e.g. the

Arctic Circle or a line mentioned in a geometric

theorem). Second, although we do insist on

location relative to another spatial thing or in

some embedding space, a Spatial Thing might or

might not be located in the actual physical

universe. It is far from clear that all Spatial

Things are so located an ideal platonic circle

or a trajectory through the phase space of some

physical system (e.g.) might not be.

28

An organization is a group whose group-members

are intelligent agents. In each organization,

certain relationships and obligations exist

between the members of the organization, or

between the organization and its members.

Organizations include both informal and legally

constituted organizations. Each organization can

undertake projects, enter into agreements, own

property, and do other tasks characteristic of

agents consequently, an organization is a kind

of agent. Notable organizations include legal

government organization, commercial

organization, and geopolitical entity. Social

Behavior, in Cyc, is made up of social

occurrences. A social occurrence is an action in

which two or more agents take part. In many

cases, social occurrences involve communication

among the participating agents. Some social

occurrences have very elaborate role structures

(e.g. a typical lawsuit), while others have

fairly simple role structures (e.g. greeting a

colleague at work).

29

Part of Cycs Ontology

30

Example Cyc Code

Predicates (objectHasColor Rover

TanColor)(memberStatusOfOrganization

Norway Nato FoundingMember) Defining a

predicate (isa residesInDwelling

BinaryPredicate) (arg1Isa residesInDwelling

Animal) (arg2Isa residesInDwelling

ShelterConstruction) Functions (BorderBetwe

enFn Sweden Norway) returns T or

F (GroupFn Agent) returns the group storing

Agent Using a quantifier (forAll ?X

(implies (owns Fred ?X)

(objectFoundInLocation ?X FredsHouse)))

(implies (isa ?A Animal) (thereExists

?M (and (mother ?A ?M) (isa ?M

FemaleAnimal))))

31

Some Examples of Cyc Inferences

- You have to be awake to eat

- You can usually see peoples noses but not their

hearts - You cannot remember events that have not yet

happened yet - If you cut a lump of peanut butter in half, each

half is also a lump of peanut butter, but if you

cut a table in half, neither half is a table - If you are carrying a container that's open on

one side, you should carry it with the open end

up - Vampires dont exist (but one microtheory states

that Dracula is a vampire) - The U.S.A. is a big country

- When people die, they stay dead

32

Cyc Today and Tomorrow

- Cyc exists in two formats, Cyc as we have

discussed, and OpenCyc - OpenCyc is a public domain portion of Cyc that

has been debugged - It consists of about 300,000 items rather than

the 5 million of Cyc - Cyc is currently used as a platform for which

larger applications are constructed where

applications can fall back on the common sense

and ontological knowledge of Cyc - This is being used in a variety of situations

including - military software and national security (see for

instance http//www.cyc.com/doc/white_papers/TKB-I

A2005.pdf) - network security (see http//www.cyc.com/doc/white

_papers/IAAI-05-CycSecure.pdf) - in support of the semantic web and natural

language understanding (for instance, see

http//www.cyc.com/doc/white_papers/FLAIRS06-Appli

cationOfCycToWordSenseDisambiguation.pdf) - Cyc contains a learning environment that allows

users to help it add knowledge and refine

knowledge - go to http//game.cyc.com/

- Beyond this, Cyc is attempting to learn new

information by examining web pages and capturing

the knowledge in its ontology

33

Another Ontology WordNet

- WordNet, like Cyc, is a massive listing of terms

that encode general worldly knowledge - In the case of WordNet, all knowledge pertains to

English words - definitions

- synonyms

- hypernyms and hyponyms

- The knowledge is in a natural language form

rather than a machine understandable form - But like Cyc, WordNet is intended to be used by

other software (agents) however what they can use

WordNet for is limited - E.g., seeing how related two words are by

following and counting synonym links

34

And Another MikroKosmos

- Processes Spanish text dealing with mergers and

acquisitions of companies - The ontology covers

- domain knowledge

- worldly knowledge that might be applicable to the

domain - language-specific knowledge and NLU-related

knowledge

35

OWL Ontology

- One of the leading competitors to Cyc

- OWL is an attempt at an ontology outside of the

commonsense domain - So there are fewer upper ontology commitments

- meaning fewer primitives than in CYC

- OWL is based on XML and RDF (Resource Description

Framework) syntax - OWL comes in three versions, OWL Full, OWL DL and

OWL Lite depending on your needs - We will only consider OWL Full

- The basic idea behind an OWL ontology is that you

define class/subclass relationships, instances of

classes, and class/instance properties - The code that uses the ontology will implement

how to reason over the classes

36

OWL Class Definitions

- You can define a class using one of six methods

- A new class definition with a specific URI

- An exhaustive enumeration of specific instances

that make up the class population - Restrictions on a previously defined class (thus,

you narrow a prior class by restricting the

available elements) - restrictions can be based on values or

cardinality - Taking previously defined classes and union or

intersect them together - Taking a previously defined class and complement

it (everything not in that class is in this

class) - for the latter five cases, the class can be an

anonymous class (without name or URI) - OWL has two predefined classes to start with,

THING and NOTHING

37

Some Example Code

rdfparseType"Collection"

A class that is the intersection Of the two

classes that contain Tosca, Salome and

Turandon, Tosca

"

rdfabout"NorthAmerica"/ rdfabout"SouthAmerica"/ rdfabout"Australia"/ rdfabout"Antarctica"/ ss

The class of everything that is not meat

rdfabout"Meat"/ ass

The class of continents defined by enumeration

38

Defining Classes Using Restrictions

- allValuesFrom like for all in logic, includes

all elements of a class that match the given

value - rdfresource"hasParent" /

rdfresource"Human" / - this restriction forms a class of individuals

that has a parent whose value is human - someValuesFrom to be in this class, the

instance has to have the associated value as one

of its attributes - hasValue same as someValuesFrom but the value

has to be semantically equivalent - this might be used to define a specific instance

as in - maxCardinality, minCardinality to define valid

ranges of numeric values - rdfresource"hasParent" /

rdfdatatype"xsdnonNegativeInteger"2

ardinality - this defines the class of entities that has no

more than 2 parents where parent is a

non-negative integer data type - cardinality is used to define a specific

expected value - that is, both minimum and maximum

39

More Class Definitions

- Aside from defining a class, you can define

classes to have certain relationships with other

classes - subClassOf

- equivalentClass to equate two classes as being

equivalent (although not necessary equal) - disjointWith mutual exclusiveness

- Properties (attributes) can have their own

specifiers - ObjectProperty

- DatatypeProperty

- InverseFunctionalProperty

- subPropertyOf

- equivalentProperty

- inverseOf

- TransitiveProperty

- subRegionOf

- SymmetricProperty

- And instances can have their own specifiers

- sameAs

- differentFrom

- AllDifferent

40

More OWL Examples

tiveProperty

ty rdfID"hasMother" rdfresource"hasParent"/

rdfresource"owlFunctionalProperty" /

/

41

Lets Develop an Example

- We will develop a food and wine ontology

- based on the web site http//protege.stanford.edu/

publications/ontology_development/ontology101-noy-

mcguinness.html - First, determine the domain and scope

- types of wine, main types of food, which wines go

with which foods - Second, see if there is an ontology available so

that we can reuse (some of) the knowledge - also see if the knowledge is available in an easy

to access or use form, for instance, go to

www.wines.com to get a list of wines and their

properties - Enumerate the important terms of the domain

- for wines and food, we might start with a list

like wine, grape, winery, location, (wine) color,

body, flavor, sugar content, food, white meat,

red meat - Now define the classes and develop a class

hierarchy from the terms - top-down versus bottom-up approach, or some

combination - Define class properties

- note that some of the terms earlier are actually

properties such as flavor, sugar content and

location, color might be a class or a property - define the facets of the properties (legal

values, range of values, how values can be

derived/computing or found) - Create class instances

42

Example Continued