Web Scraping Using Nutch and Solr 2/3 - PowerPoint PPT Presentation

Title:

Web Scraping Using Nutch and Solr 2/3

Description:

A short presentation ( part 2 of 3 ) describing the use of open source code nutch and solr to web crawl the internet and process the data. – PowerPoint PPT presentation

Number of Views:104

Title: Web Scraping Using Nutch and Solr 2/3

1

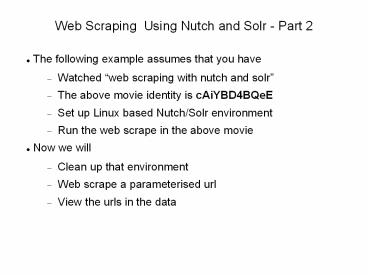

Web Scraping Using Nutch and Solr - Part 2

- The following example assumes that you have

- Watched web scraping with nutch and solr

- The above movie identity is cAiYBD4BQeE

- Set up Linux based Nutch/Solr environment

- Run the web scrape in the above movie

- Now we will

- Clean up that environment

- Web scrape a parameterised url

- View the urls in the data

2

Empty Nutch Database

- Clean up the Nutch crawl database

- Previously used apache-nutch-1.6/nutch_start.sh

- This contained -dir crawl option

- This created apache-nutch-1.6/crawl directory

- Which contains our Nutch data

- Clean this as

- cd apache-nutch-1.6 rm -rf crawl

- Only because it contained dummy data !

- Next run of script will create dir again

3

Empty Solr Database

- Clean Solr database via a url

- Book mark this url

- Only use it if you need to empty your data

- Run the following ( with solr server running )?

- http//localhost8983/solr/update?committrue -d

'ltdeletegtltquerygtlt/querygtlt/deletegt'

4

Set up Nutch

- Now we will do something more complex

- Web scrape a url that has parameters i.e.

- http//ltsitegt/ltfunctiongt?var1val1var2val2

- This web scrape will

- Have extra url characters '?'

- Need greater search depth

- Need better url filtering

- Remember that you need to get permission to

scrape a third party web site

5

Nutch Configuration

- Change seed file for Nutch

- apache-nutch-1.6/urls/seed.txt

- In this instance I will use a url of the form

- http//somesite.co.nz/Search?DateRange7industry

62 - ( this is not a real url just an example )?

- Change conf regex-urlfilter.txt entry i.e.

- skip URLs containing certain characters

- -!_at_

- accept anything else

- http//(a-z0-9\.)somesite.co.nz\/Search

- This will only consider some site Search urls

6

Run Nutch

- Now run nutch using start script

- cd apache-nutch-1.6 ./nutch_start.bash

- Monitor for errors in solr admin log window

- The Nutch crawl should end with

- crawl finished crawl

7

Checking Data

- Data should have been indexed in Solr

- In Solr Admin window

- Set 'Core Selector' collection1

- Click 'Query'

- In Query window set fl field url

- Click Execute Query

- The result ( next ) shows the filtered list of

urls in Solr

8

Checking Data

9

Results

- Congratulations you have completed your second

crawl - With parameterised urls

- More complex url filtering

- With a Solr Query search

10

Contact Us

- Feel free to contact us at

- www.semtech-solutions.co.nz

- info_at_semtech-solutions.co.nz

- We offer IT project consultancy

- We are happy to hear about your problems

- You can just pay for those hours that you need

- To solve your problems