Parallelization of Cumulative Reaction Probabilities (CRP) - PowerPoint PPT Presentation

Title:

Parallelization of Cumulative Reaction Probabilities (CRP)

Description:

Advanced Software for the Calculation of Thermochemistry, Kinetics, and Dynamics Stephen Gray, Ron Shepard, Al Wagner, Mike Minkoff, Argonne National Laboratory – PowerPoint PPT presentation

Number of Views:23

Avg rating:3.0/5.0

Title: Parallelization of Cumulative Reaction Probabilities (CRP)

1

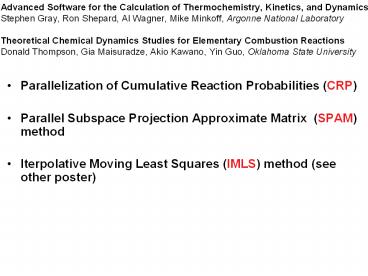

Advanced Software for the Calculation of

Thermochemistry, Kinetics, and DynamicsStephen

Gray, Ron Shepard, Al Wagner, Mike Minkoff,

Argonne National LaboratoryTheoretical Chemical

Dynamics Studies for Elementary Combustion

ReactionsDonald Thompson, Gia Maisuradze, Akio

Kawano, Yin Guo, Oklahoma State University

- Parallelization of Cumulative Reaction

Probabilities (CRP) - Parallel Subspace Projection Approximate Matrix

(SPAM) method - Iterpolative Moving Least Squares (IMLS) method

(see other poster)

2

- CRP (Stephen Gray, Mike Minkoff, and Al Wagner)

- computationally intensive core of reaction rate

constants - mathematical kernel (all matrices are sparse with

some structure) - - method 1 - iterative eigensolve (imbedded

iterative linearsolves) - - clever preconditioning important

- - portability based on ANL PETSc library of

kernels - - method 2 - Chebyschev propagation (gt matrix

vector multiplies) - - novel finite difference representation

(helps parallelize) - programming issues

- - parallelization

- - exploiting data structure (i.e.,

preconditioning)

3

- SPAM (Ron Shepard and Mike Minkoff)

- novel iterative method to solve general matrix

equations - eigensolve - linear solve - nonlinear solve

- applications are widespread

- - in chemistry CRP, electronic structure (SCF,

MRSDCI,) - mathematical kernel

- - related to Davidson, multigrid, and conjugate

gradient methods - - subspace reduction (requiring usual matrix

vector multiplies) - - projection operator decomposition of matrix

vector product - - substitution of user-supplied approximate

matrix - in computationally intensive part of

decomposition - - sequence of approx. matrices gt multilevel

method - programming issues

- - generalization of approach (only done for

eigensolve) - - incorporation into libraries (connected to

TOPS project part at ANL) - - test of efficacy in realistic applications

(e.g., CRP)

4

Parallelization of Cumulative Reaction

Probabilities (CRP) Stephen Gray, Mike Minkoff,

and Al Wagner (Argonne National Laboratory)

- Cumulative Reaction Probabilities (CRP)

- - computational core of reaction rate constants

- - exact computation computational intensive

- - approximate computation underlies

- all major reaction rate theories in use

- gt efficient exact CRP code will

- - give exact rates (if the computed forces are

accurate) - - calibrate ubiquitous approximate rate methods

- Two methods

- Time Independent (Miller and Manthe, 1994, and

others) - Time Dependent (Zhang and Light, 1996, and

others) - Highly parallel approaches to both methods being

pursued

5

Parallelization of Cumulative Reaction

Probabilities Time Independent Approach

- Mike Minkoff and Al Wagner

- N(E) ?k pk(E,J)

- where pk(E,J) eigenvalues of Probability

Operator - P(E) 4 ?r1/2 (Hi?-E)-1??p (H-i?-E)-1??r1/2

- where i?x absorbing diagonal potentials

- imaginary potentials

- H hamiltonian (differential operator)

- gt for realistic problems

- size 105x105 or much larger

- number of eigenvalues lt 100

- iterative approach

- macrocycle of iteration for eigenvalue

- microcycle of iteration for

- action of Greens function (H-i?-E)-1

- gt linear solve

6

Parallelization of Cumulative Reaction

Probabilities Time Independent Approach

Code built on PETSc (http//www.mcs.anl.gov/pets

c) -gt TOPS PETSc data structure, GMRES linear

solve, preconditioners USER Lanczos method for

eigensolve Future user supplied

preconditioners

7

Parallelization of Cumulative Reaction

Probabilities Time Independent Approach

- Performance

- model problem

- Optional number of dimensions

- Eckhart potential along rxn coord.

- parabolic potential perpendicular

- to reaction coord.

- DVR representation of H

- Algorithm options

- Diagonal preconditioner

- Computers

- NERSC SP

- others include SGI Power Chanllenge,

- Cray T3E

Performance vs. processors for model with

increasing dimensions

8

Parallelization of Cumulative Reaction

Probabilities Time Independent Approach

Good performance bad scaling

- Future Preconditioners

- Banded

- SPAM

- Sparse optimal similarity transforms (Poirier)

- IF Q THEN

find optimal Q such that QHQT - optimal block diagonal -gt

fat band precond. SPAM precond.

can SPAM get scalable performance?

9

Parallelization of Cumulative Reaction

Probabilities Time Dependent Approach

Dimitry Medvedev and Stephen Gray N(E) can be

found from time dependent transition state

wavepackets (TSWP) (see Zhang and Light, J. Chem.

Phys. 104, 6184 (1996)) N(E) SM Ni(E)

where Ni(E) a Imltfi(E)Ffi(E)gt

where F differential flux

operator fi(E) a ?exp(iEt)?i(x,t)

dt where TSWP ?i(x,t) from i?/?t ?i(x,t) H

?i(x,t) where H is Schroedinger

Eq. Operator Work M different TSWPs (each

independent of other) each TSWP - propagated

over time - Nt time steps - each time step

propagation dominated by H ?i multiply gt CPU

work M Nt (work of H ?i multiply)

10

Parallelization of Cumulative Reaction

Probabilities Time Dependent Approach

- Solution Strategy

- Real Wavepackets (TS-RWP)

- (K. M. Forsythe and S. K. Gray, J. Chem. Phys.

112, 2623 (2000)) - - half the storage, twice as fast relative to

complex wavepackets - - Chebyshev iteration for propagation

- - H?i work -gt action of second order

differential operators

11

Parallelization of Cumulative Reaction

Probabilities Time Dependent Approach

- Solution strategy (more)

- Dispersion Fitted Finite Difference (DFFD)

- (S. K. Gray and E.M. Goldfield, J. Chem. Phys.

115, 8331 (2001)) - - finite difference evaluation of action of

differential operators - - optimized constants to reproduce dispersion

relation - (dispersion related momentum to kinetic

energy) - - different optimized constants

- for selected propagation error e

- ??x.error for 3D HH2

- Reaction

Probability vs. - order of

finite difference - - parallelize via decomposition of ?i in x

- DFFD gt less edge effects

12

Parallelization of Cumulative Reaction

Probabilities Time Dependent Approach

- Solution strategy (more)

- Trivial parallelization over M wavepackets

- Nontrivial parallelization for wavepacket

propagation - - propagation requires repeated actions of

Hamiltonian matrix on a vector - - DFFD allows for facile cross-node

parallelization - - mixed OpenMP/MPI Parallel program for ABCD

chemical reaction dynamics - wavefunction distributed over multiprocessor

nodes according - to the value of one of the coordinates

- One OpenMP thread performs internode

communication - Remaining OpenMD threads do local work which

dominates - Future directions

- - parallelize over three radial degrees of

freedom - - develop distributable version(s) of code for

different parallel environments - - investigate more general (e.g., Cartesian)

representations.

13

Parallelization of Cumulative Reaction

Probabilities Time Dependent Approach

- Results

- OHCO reaction

- - 6 dimensions

- - zero total ang. mom.

- Wavepacket propagated

- reaction probability

- RWP DFFD propagation

- technique

- grid/basis dimensions gt 109

- run at NERSC

14

Advanced Software for the Calculation of

Thermochemistry, Kinetics Dynamics Subspace

Projected Approximate Matrix SPAMRon Shepard

and Mike Minkoff (Argonne National Laboratory)

- New iterative method to solving general matrix

equations - Eigenvalue

- Linear

- Non linear

- Method

- Based on subspace reduction, projection

operators, decomposition, sequence of one or more

approximate matrices - Extensions

- Demonstrated for symmetric real eigensolves

- Demonstrations on linear and non-linear equations

planned - Applications

- Applicable to problems with convergent

- sequences of physical or numerical

approximations - Parallelizable, multi-level library

implementations via TOPS

15

Subspace Projected Approximate Matrix SPAM

Method

- Subspace iterative solution to eigenvalue,

linear, and nonlinear problems - e.g. eigenvalue problem (H ?j )vj 0

- Subspace iterative solutions have form vj Xn

cj - where Xn x1,x2,,xn

- cj is solved in a subspace Hn cj ?nj cj

- where Hn (Wn)T Xn

- where Wn H Xn lt---for Ngtgtn, where all

the work is - New xn1 vector from residual rn1 (Wn-?nj

Xn)cj - SPAM gives more accurate or faster converging

way to get xn1 in 3 steps

16

Subspace Projected Approximate Matrix SPAM

Method

Step 1 Assemble and apply Projections Operators

on current vector Pn xn1 XnXnT xn1 Qn

xn1 xn1 - Pn xn1 where n trial vectors are

already processed, n1 trial vector desired Step

2 Decomposition and Approximation of H xn1

Decompose H xn1 (PnQn) H (PnQn) xn1

where (PnHPn PnHQn QnHPn) xn1 gt cheap

subspace operations (QnHQn) xn1 gt

expensive full space operation Approximate

(QnHQn) xn1 by (QnH1Qn) xn1 that is cheap to

do Step 3 Solve approximate subspace

problem - solution is xn1

17

Subspace Projected Approximate Matrix SPAM

Method

- SPAM properties

- projection operators gt convergence from any

approx. matrix - multi-level SPAM with dynamic tolerances

- QnHQn approximated by Q(1)nH(1)Q(1)n

- Q(1)nH(1)Q(1)n approximated by Q(2)nH(2)Q(2)n

- Q(2)nH(2)Q(2)n approximated by

Q(3)nH(3)Q(3)n - tens of lines of code added to existing iterative

subspace eigensolvers - highly parallelizable

- applicable to any subspace problem

18

Subspace Projected Approximate Matrix SPAM

Method

- SPAM properties (broad view)

- Relation to other subspace methods (e.g.,

Davidson) - More flexible (sequence of approx. matrices - no

sequence gt SPAM Davidson) - If iteration tolerances are correct, always no

worse than Davidson - Relation to multigrid methods

- SPAM sequences of approx. matrices multigrid

sequences of approx. grids - Subspace method gt solution vector composed of

multiple vectors - Multigrid methods gt single solution vector that

is updated - Relation to preconditioned conjugate gradient

(PCG) methods - SPAM has multiple vectors and approximations

always improved by projection - PCG has a fixed single preconditioner and single

vector improved by projection - Deep injection of physical insight into numerics

- Application experts can design physical

approximation sequences - SPAM maps approximation sequences onto numerics

sequences - Projection operators continually improve the

approximations

19

Subspace Projected Approximate Matrix

SPAMExtensions

- Mathematical Extensions

- Eigensolves

- Symmetric real or complex-hermitian

- Done with many applications

- (http//chemistry.anl.gov/chem-dyn/Section-C-RonSh

epard.htm) - Code available

- (ftp ftp.tcg.anl.gov/pub/spam/README,spam.t

ar.Z) - Generalized symmetric planned

- General complex non-hermitian planned

- Linear solves planned

- Nonlinear solves planned

- Formal connection to multigrid and conjugate

gradient methods in progress

20

Subspace Projected Approximate Matrix

SPAMApplications

- broad view

- Any iterative problem solved in a subspace

- with a user-supplied cheap approximate matrix

(TOPS connection) - What is a cheap approximate matrix?

- Sparser

- Lower-order expansion of matrix elements

- Coarser underlying grid

- Lower-order difference equation

- Tensor-product approximation

- Operator approximation

- More highly parallelized

approximation - Lower precision

- Smaller underlying basis

- Terascale Optimal PDE Siumlations (TOPS)

connection - Basic parallelized multi-level code with

user-supplied approx. matrix - Template approximate matrices stored in library

21

Subspace Projected Approximate Matrix

SPAMApplications

- Model Applications

- Perturbed Tensor Product Model (4x4 tensor

products tridiag. perturbation) - - tensor product approximation

- - 80 to 90 savings of SPAM over Davidson

- Truncated Operator Expansion Model

- - 50 to 75 savings of SPAM over Davidson

- Two Chemistry Applications

- Rational-Function Direct-SCF

- - tensor product approximation to hessian matrix

using Fock matrix elements - - 10 to 70 savings of SPAM over Davidson

- MRSDCI

- - Bk approximation

- - 10 to 20 savings of SPAM over Davidson

approx. matrix

exact matrix

22

Subspace Projected Approximate Matrix

SPAMApplications

- Another Chemistry Application (in progress)

- Vibrational Wave Function Computation

- Based on the TetraVib program of H-G. Yu and J.

Muckerman - J. Molec. Spec. 214, 11-20 (2002)

- Six choices of internal coordinates (3 angles and

3 radial coordinates) - Angular degrees of freedom expanded in

parity-adapted product basis Ylm(?1,

f1)Yl?-m(?2, f2) - Radial coordinates are represented on a 3D DVR

grid - Allows arbitrary potential functions to be used

- (loose modes, multiple minima, etc.)

23

Subspace Projected Approximate Matrix

SPAMApplications

- Another Chemistry Application (in progress)

- Vibrational Wave Function Computation

- Approximate matrix

- - single precision version of exact double

precision matrix - - lowest eigen value for H2CO

- - 10 to 30 savings

24

Subspace Projected Approximate Matrix

SPAMApplications

- Another Chemistry Application (in progress)

- Vibrational Wave Function Computation

- Approximate matrix

- - smaller basis representation of large basis

exact matrix - - simple interpolates (care with DVR weights)

- - work in progress

25

Common Component Architecture (CCA) Connection

In collaboration with CCTTSS ISIC, we have

examined CCA CCA allows inter-operability

between a collection of codes - controls

communication between programs and sub-programs

- has script that allows assembly of full program

at execution - requires up-front effort

POTLIB is a library of potential energy surfaces

(PES) - each PES book in library obeys an

interface protocol - translators connect codes

requiring PES to any book in library We

intende to CCA POTLIB and some codes that use

POTLIB (e.g., VariFlex, Venus, Trajectory

program of D. Thompson - How much effort is

required (being trained by CCTTSS people) - How

robust is CCA software - How easy is it to have

a GUI to organize CCA script

26

Future Work

CRP - time-independent - improve

performance with new preconditioners -

distribute the code - time-dependent -

improve parallelization and distribute - develop

Cartesian coordinate versions SPAM - test

generic approximate matrix strategies on

application problems - extend to linear solves

- distribute via TOPS