Welcome to Principles of Computer - PowerPoint PPT Presentation

1 / 61

Title:

Welcome to Principles of Computer

Description:

Increasing clock rate runs into power dissipation problem (heat) ... CPU Clock Cycles = (Instructions for ... World's Fastest Computer System. CPU Performance ... – PowerPoint PPT presentation

Number of Views:216

Avg rating:3.0/5.0

Title: Welcome to Principles of Computer

1

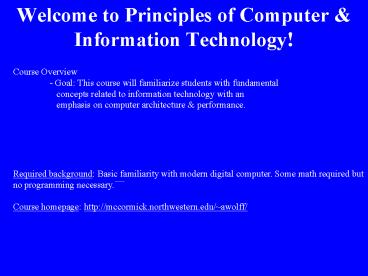

Welcome to Principles of Computer Information

Technology!

Course Overview - Goal This course will

familiarize students with fundamental

concepts related to information technology with

an emphasis on computer architecture

performance. Required background Basic

familiarity with modern digital computer. Some

math required but no programming

necessary. Course homepage http//mccormick.nort

hwestern.edu/awolff/

2

What 421 is about

- 411 deals with how data is communicated.

- 412 deals with what happens at the end points

how data is stored, displayed, and processed.

421

411

3

Grading

- Absolute Scale

- 91.50 100.00 A

- 90.00 91.49 A-

- 88.50 89.99 B

- 81.50 88.49 B

- 80.00 81.49 B-

- 78.50 79.99 C

- 71.50 78.49 C

- 70.00 71.49 C-

Grade Components 40 Midterm exam 10 HW 25

Final exam 25 Project Homework is to be done

in groups. One HW turned in per group. Discuss

with group members before coming to me. - The

midterm will be held on the 6th week of class.

4

MSIT 421 Schedule

5

Other Information

Textbooks Computer Organization Architecture,

William Stallings, 7th Edition, 2006. How

Computers Work, Ron White, Ninth Edition,

2008. Other Books Computer Architecture A

Quantitative Approach, John L. Hennesy, David A.

Patterson, 4th Edition, 2006. Introduction to

Information Technology, Third edition, Turban,

Rainer, Potter, 2005. Instructor Alan Wolff,

Ph.D. Phone number 847- 491-4443 (W),

847-502-1680 (Cell) Email a-wolff_at_northwestern.ed

uRoom Number L293 of TechEmail is the best way

to get in touch with me and get a quick

response!

6

Class 1 Intro / Computer Performance

- Intro to Computer Systems

- A. IT Matters

- B. Performance Basics

- C. Hierarchy, Structure and Function

- II. Technological Developments

- A. Generations

- B. Moores Law

- C. Enhancing Performance

- IV. Performance Measures

- A. MIPS, MFLOPS

- B. Price/ Performance

- C. Speedup Amdahls Law

7

Does IT Matter?

- Perspectives

- The Digital Age Storms the Corner Office

- Business Week, September 6, 2001.

- Info tech is so crucial to business operations

today -- and so expensive -- that CEOs have no

choice but to understand it. - "IT Doesn't Matter," Harvard Business Review,

Vol. 81, No. 5, May 2003. - This provocative Harvard Business Review excerpt

suggests that IT no longer conveys competitive

advantage, so invest your capital elsewhere. - Worrying about what might go wrong may not be

as glamorous a job as speculating about the

future, but it is a more essential job right

now. - The End of Corporate Computing, Spring 2005 issue

of the MIT Sloan Management Review - As with the factory-owned generators that

dominated electricity production a century ago,

today's private IT plants will be supplanted by

large-scale, centralized utilities.

8

IT Creates Sustains Wealth

Americas Richest People Forbes, 9/08

- IT gives people what they want. Thus there is a

lot - of money in this business.

Seven of the 15 richest people in America are

directly linked to IT.

9

Performance Basics

Commercial Airplane Performance

Which Airplane has the best performance?

Computing Performance

Which data center has the best performance?

10

Computer Performance

- Response time and throughput are common ways to

measure computer performance - For the time being we will use response time as a

measure of performance

PerformanceX 1/(Execution Time)X

11

Performance Comparison

- What does X is n faster than Y mean?

- Example

- If machine A runs a program in 10 seconds, and

machine B - runs the same program in 15 seconds, which of the

following - statements is true?

- A is 50 faster than B

- A is 33 faster than B

Solution PA 1/10 0.10 PB 1/15

0.06667 PA/PB 0.10/0.06667 1.50 n 50

12

What is a Computer?

- Webster a programmable usually electronic

device that can store, retrieve, and process

data. - Date 1646

- A digital computer is a machine that can solve

problems by carrying out instructions given to

it. - A sequence of instructions program

- The electronic circuits of each computer can

recognize and directly execute a limited set of

simple instructions into which all its programs

must be converted before they can be executed. - The instructions are rarely more complicated

than - Add two numbers

- Check a number to see if it is zero

- Copy a piece of data from one part of the

computers memory to another

13

Machine Language

- A computers primitive instructions form a

language called machine language. - What is convenient for computers and what is

convenient for people are not the same. - Two languages instructions for computer form

language L0 instructions that are more

convenient for people L1. - Two approaches for programs written in L1 to be

executed by L0 - Translation

- Interpretation

14

Translation

program gcd(input, output) var i, j

integer begin read(i, j) while i j

do if i j then i i j else j j

i writeln(i) end.

- Goal Preserve original tone and meaning.

- Example Translation of classic literature from

Greek or Latin to English.

15

Interpretation

- A compiler is a program that translates

high-level source programs into target program

- Fuzzy difference

- A language is interpreted when the initial

translation is simple - A language is compiled when the translation

process is complicated

16

Examples Translation Interpretation

- Low Level Languages

- emphasize detailed control, close to the machine

- programs are often slow to write but should run

fast - the translation (compilation) takes place before

the program can be run - programs are distributed as binaries

- used where performance and accuracy is important

- Examples C, C, Pascal, Fortran, Ada, Modula-2

- High Level Languages

- a.k.a. scripting languages

- emphasize broad detail and abstractions

- programs can be written quickly but ok to run

slow - the translation (interpretation) takes place

while the program is run - programs are distributed as source code

- used for one-shot programs, text processing,

extensions internal langauges, macros. Programs

are often called "scripts". - Examples Python, Perl, Basic, Lisp, Scheme,

Java, Tcl, R

17

Multilevel approach

18

Functional Perspective

- Generally, all computer functions can be

classified into four categories - Data processing arithmetic or logic operation

(Add, True, etc). - Data storage internal/external memory (RAM, HD,

DVD, etc). - Data movement between components or computers

(ex RAM-CPU) - Control Orchestrates the other functions listed

above.

19

Structure - Top Level

Computer

Peripherals

Central Processing Unit

Main Memory

Computer

Systems Interconnection

Input Output

Communication lines

20

Structure - The CPU

CPU

Arithmetic and Login Unit

Computer

Registers

I/O

CPU

System Bus

Internal CPU Interconnection

Memory

Control Unit

21

Computer Model----Von Neumann Model

- Stored Program concept

- Heard of punch card ?

- Main memory storing programs and data

- Control unit interpreting instructions from

memory and executing them. - ALU operating on binary data

- Input and output equipment transmit information.

- All of todays computer designs are using Von

Neumann model !

22

Computer History

- Generation 0 Mechanical

- Generation 1 Vacuum Tubes

- Generation 2 Transistors

- Generation 3 Integrated Circuits

- Generation 4 Very Large Scale Integration

- Generation 5 ??

23

Generation 0 Mechanical Implementation

- The mechanical implementation of computers used

gears and wheels and other mechanical movements. - There are inherent limitations and shortcomings

of mechanical computation including - Complex design and construction

- Wear, breakdown, and maintenance and mechanical

parts - Limits on operating speed

24

Early Mechanical Computers

- Early pioneers

- William Schickard (1623) mechanical (addition,

subtraction, multiplication, division) - Blaise Pascal (1642) mechanical (addition

subtraction). - Gottfreid Wilhelm von Leibnitz (1670s)

mechanical (also multiplication division) - Charles Babbage 1820s

- Difference engine mechanical, addition

subtraction navigation tables - Analytical machine mechanical (never functional,

poorly documented)- first general purpose

computer controlled by punched card programs

(worlds first computer programmer Ada Augusta

Lovelace)

25

Generation 1 Vacuum Tubes

- Necessity is the mother of invention. Plato

in Republic - Colossus 1943, GB

- First electronic digital computer

- Cracking the ENIGMA cyphers

- ENIAC 1946, US

- Electronic computer (18,000 vacuum tubes),

- 20 registers with 10 digit decimal numbers (not

binary) - Computation of trajectory tables for heavy

artillery - 1945, Legend of First Computer Bug at Harvard

(Mark I) - von Neumann Machine John von Neumann, Princeton

1950. the IAS computer - Basis of today architectures stored program

concept - Memory, ALU, control unit, input, output, binary

- 1953 first computer by IBM

26

IAS Memory/Instructions

Address Contents 08D 010AA210AB In

the program at address 08D, the two lines

are LOAD(0AA) STOR(0AB)

Note 01 in hex is 00000001 in binary, 21 in hex

is 00100001 in binary

27

Generation 2 Transistors

- The transistor was invented in 1948 at Bell Labs

- Made of silicon (sand)- mixed with certain

chemical - semiconductor (Nobel Prize in Physics, 1956)

- Less heat, lower cost, more reliable than vacuum

tubes - PDP-1 Digital Equipment Corporation, 1961

- First commercial transistorized computer

(mini-computer) - Visual display with 512 x 512 pixels

- PDP-8 DEC, 1965

- Single bus

- connected components

- 50,000 units sold

- Established DEC as a major player

- The first super-computers emerged

- Control Data Corporation (CDC)

- Seymour Cray CDC 6600, CDC 7600, Cray-1

28

Generation 3 Integrated Circuits

- Silicon integrated circuit (chip) was invented in

1958 - Dozens of transistors could be put on a single

chip - Computers became smaller, faster, cheaper

- System /360 series, IBM 1964.

- Both scientific and commercial applications

- Replaced two separate strands of system designs

at IBM - First machine that could emulate other computers

by microprograms - 32 bit computer whose memory was byte addressed

- PDP-11, DEC, end of 1960s

- - Highly successful, especially at universities

29

Generation 4 Very Large Scale Integration (VLSI)

- In the 1970s 1980s, VLSI emerged

- Tens of thousands of transistors could be put on

a single chip - Millions of transistors

- Computers became even faster and cheaper

- PC the personal computer

- Originally kits without software

- Xerox PARC graphical user interfaces, windows,

mouse - Steve Jobs, Steve Wozniak, Apple, 1977- first

assembled computer - IBM PC 1981 got into PC business,

- used Intel and a small company called Microsoft

- PC clones industry emerged

- Mid-1980s new processor designs

- - RISC architectures, super-scalar CPUs

30

Generation 5 ?

- What will come next?

- 3-dimensional circuit designs

- pack transistors in cubes or layers instead of

chips - Optical Computing

- Replace wires electronics by optics

- Molecular computing (DNA Computer)

- Use chemical/biological processes for computing

- Quantum computing

- Sub-atomic particle- quantum- two states

simultaneously qubit, can do base 4 instead of

base 2 - Special applications only, e.g. quantum

cryptography

31

Technologies Performance

What if technology related to energy advanced at

the same rate??

32

Moores Law

- Increased density of components on chip

- Gordon Moore - cofounder of Intel

- Number of transistors on a chip will double every

year - Since 1970s development has slowed a little

- Number of transistors doubles every 18 months

- Cost of a chip has remained almost unchanged

- Higher packing density means shorter electrical

paths, giving higher performance - Smaller size gives increased flexibility

33

Moores Law

http//www.intel.com

34

Main Driver Device Sizes

35

Secondary Driver Wafer Size

- /cm2

- Wafer size improvements have offset the

increasing costs of wafer processing

36

From Ingot to Chips

37

Fabricating Processors Wafers Chips

Dies per wafer p x (wafer diameter/2)2/(die

area) - p x (wafer diameter)/(v(2 x die area)

450mm wafer Image Source Intel Corporation

www.intel.com

38

Not just the Processor!

- Processor

- Logic capacity increases about 30 per year

- Performance 2x every 1.5 years

- Memory

- DRAM capacity about 60 per year

- Memory speed 1.5x every 10 years

- Cost per bit decreases about 25 per year

- Disk

- Capacity increases about 60 per year

39

Speeding it up even more

- Pipelining

- Increasing number of bits retrieved at a time

- Increase interconnection bandwidth (bus)

- On board cache

- Branch prediction

- Data flow analysis

40

Intel Microprocessor Performance

41

Increased Cache Capacity

- Typically two or three levels of cache between

processor and main memory - Chip density increased

- More cache memory on chip

- Faster cache access

Source Semico Research Corp. ASIC report, 2007

42

More Complex Execution Logic

- Enable parallel execution of instructions

- Pipeline works like assembly line

- Different stages of execution of different

instructions at same time along pipeline - Superscalar allows multiple pipelines within

single processor - Instructions that do not depend on one another

can be executed in parallel

43

Diminishing Returns

- Internal organization of processors complex

- Can get a great deal of parallelism

- Difficult to take advantage of massive

parallelism in software - Benefits from cache reach a limit

- Interconnect (wires) speed limitation

- Increasing clock rate runs into power dissipation

problem (heat) - Some fundamental physical limits are being

reached

44

New Approach Multiple Cores

- Multiple processors on single chip

- Large shared cache

- Within one processor, increase in performance

proportional to square root of increase in

complexity - If software can use multiple processors, doubling

number of processors almost doubles performance - So, use two simpler processors on the chip rather

than one more complex processor - With two processors, larger caches are justified

45

CPU Performance Factors

- CPU Execution Time CPU clock cycles x Clock

Cycle Time - OR

- CPU Execution Time (CPU clock cycles)/(Clock

rate) - EX Computer A has a clock cycle rate of 4 GHz,

so its clock cycle time is - 1/(4 x 109) seconds 2.5 x 10-10 seconds 250

picoseconds (ps). If it takes 40 billion CPU

cycles to complete a program, what is the CPU

execution time? - CPU Execution Time (40 x 109 cycles) x (2.5 x

10-10 seconds/cycle) - 10 seconds

- OR

- CPU Execution Time (40 x 109 cycles) / (4 x

109 cycles/second) - 10 seconds

46

Cycles Per Instruction (CPI)

- CPI is a term used to describe one aspect of a

processor's performance the number of clock

cycles that happen when an instruction is being

executed. All programs consist of many

instructions. Low CPI is good. - CPU Clock Cycles (Instructions for Program) x

CPI - EX Computer A is 4 GHz with a CPI of 2.0 for a

program, and Computer B is 2 GHz with a CPI of

1.2. Which computer is faster and by how much? - Answer Lets say the number of instructions is

I for both computers. - (CPU Clock Cycles)A I x 2.0

- (CPU Clock Cycles)B I x 1.2, then

- CPU TimeA (CPU clock cycles)A x (Clock cycle

time)A - (I x 2.0) cycles x 250 ps/cycle 500 ps

- CPU TimeB (I x 1.2) cycles x 500 ps/cycle

600 ps - Computer A is faster by 100 ps. The higher clock

rate more than compensates for better CPI.

47

Example

- Consider a computer with an instruction set like

this - Which program will run faster if each is

comprised of - Answer

- Program 1 (1 instr/cycle)(2000 cycle) (2

instr/cycle)(1000 cycle) - (3 instr/cycle)(2000 cycle) 10,000 cycles

- Program 2 (1 instr/cycle)(4000 cycle) (2

instr/cycle)(1000 cycle) - (3 instr/cycle)(1000 cycle) 9,000 cycles

- -Program 2 is faster

- Notes

- MIPS means millions of instructions per second

instructions / (106) - MFLOPS means millions of floating point

operations per second FLOPS / (106)

48

Measure of CPU Performance FLOPS

- FLOPS floating point operations per second.

- Floating point is a particular kind of

non-integer mathematical instruction. - Performance above measured in GFLOPS billions

of floating point operations per second

Source http//www.top500.org/list/2008/06/100

49

Worlds Fastest Computer System

50

CPU Performance

- The real measure of performance is the time it

takes for a task to run. So in many ways it

depends on the application. - In the industry, different benchmarks are used

for performance. A good example is that of the

Standards Performance Evaluation Corporation

(SPEC). These benchmarks are real programs

51

Performance of Servers

Performance based on TPC-C Spec, September, 2008

Spec includes simulation of typical server

transactions in a database Payment,

Order-Status, Delivery, Stock-Level

http//www.tpc.org/

52

Price/Performance of Servers

Price/Performance based on TPC-C Spec/Cost, Sept,

2008

Spec includes simulation of typical server

transactions in a database Payment,

Order-Status, Delivery, Stock-Level

http//www.tpc.org/

53

Computer Market

4th quarter 2007

All 2007

54

Problems with MIPS, MFLOPS

- MIPS is a useless measure sometimes

- Different chips use different instruction sets

- Not all instructions are the same within an

instruction set - Performance also depends on register size, cache

memory performance, memory bandwidth among

other things! - MFLOPS is a little less useless because it is

more specific type of instruction but also

depends on the above listed factors. - These measures give a rough idea of computer

performance

55

Designing for Performance

- Principle

- Make the common case fast.

- A computing process consists of different

events, and - uses different resources. To improve the whole

computer - system performance, the frequently happening

events - and the most often used resources should be speed

up in - the highest priority.

- Example

- Optimizing a rarely used instruction is not

helpful to - improve performance.

56

Amdahls Law

- The performance improvement to be gained from

using some faster mode of execution is limited by

the fraction of the time the faster mode can be

used. - Amdahls law defines the speedup that can be

gained by using a particular feature. - For example, to improve the computers

performance, there are many options CPU speed,

memory access time, hard disk rotate speed and

access time, I/O access time, data bus width, and

so on. - How important is each one of them?

57

Speedup Definition

- Execution time without

using the enhancement - Speedup------------------------------------------

-------------------- - Execution time after using

the enhancement

Speedup tells how much faster a task will run by

using the enhancement.

Suppose that we are considering an enhancement

that runs 10 times faster than the original

machine but is only usable 40 of the time. What

is the overall speedup gained by incorporating

the enhancement?

58

Types of Systems (1)

- Desktops and laptops the largest market

- Price-performance is most important

- Servers availability, reliability, scalability,

throughput

59

Component Costs of PCs

60

Cost vs. Price

61

Types of Systems (2)

- Embedded computers fastest growing segment

- -includes palms, cell phones, video games,

microwaves, printers, networking switches,

automobiles, etc. - - price, real-time performance, low memory, low

power - Summary