Nonlinear Programming Review - PowerPoint PPT Presentation

1 / 47

Title:

Nonlinear Programming Review

Description:

Duality and optimality conditions revisited ... f(x) is a convex function if and only if for any given two points x1 and x2 in ... – PowerPoint PPT presentation

Number of Views:338

Avg rating:3.0/5.0

Title: Nonlinear Programming Review

1

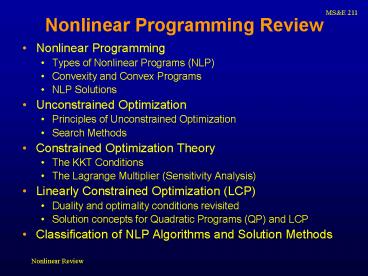

Nonlinear Programming Review

- Nonlinear Programming

- Types of Nonlinear Programs (NLP)

- Convexity and Convex Programs

- NLP Solutions

- Unconstrained Optimization

- Principles of Unconstrained Optimization

- Search Methods

- Constrained Optimization Theory

- The KKT Conditions

- The Lagrange Multiplier (Sensitivity Analysis)

- Linearly Constrained Optimization (LCP)

- Duality and optimality conditions revisited

- Solution concepts for Quadratic Programs (QP) and

LCP - Classification of NLP Algorithms and Solution

Methods

2

Nonlinear Optimization Model

3

Types of Nonlinear Programs

- Unconstrained optimization.

- Linearly constrained optimization

- Quadratic Programming

- Convex optimization

- Objective function is a convex function in

minimization or a concave function in

maximization (over the feasible set) - Feasible set is a convex set

- Nonconvex optimization

- Geometric programming

- Fractional programming

4

Gradient Vector and Hessian Matrix

- The gradient vector of f at x

- The Hessian Matrix of f at x

5

Convex and Concave Functions

f(x)

f(x)

f(x2)

? f(x1)(1- ?)f(x2)

?

f(x1)

?

f(?x1 (1- ?)x2)

x1

x2

x

x

?x1(1-?)x2

f(x) is a convex function if and only if for any

given two points x1 and x2 in the function domain

and for any constant 0 ? ? ? 1 f(?x1 (1- ?)x2) ?

? f(x1)(1- ?)f(x2)

6

Properties of Convex Function

f(x)

b

x

If f(x) is a convex function, then the lower

level set x f(x) ? b is a convex set for any

constant b.

The graph of a convex function lies above its

tangent planes. The Hessian matrix of a convex

function is positive semi-definite.

7

Convex Quadratic Function

f(x)xTQxcTx is a convex function if and only

if Q is positive semidefinite. f(x)xTQxcTx is

a strictly convex function if and only if Q is

positive definite. If Q is positive definite,

Q-1 exists.

8

Convex Sets

- A set is convex if every line segment connecting

any two points in the set is contained entirely

within the set - Ex - polyhedron

- Ex - ball

- An extreme point of a convex set is any point

that is not on any line segment connecting any

other two points of the set - The intersection of convex sets is a convex set

9

Why do we care so much about convexity?

- Because it guarantees that a local optimum is a

global optimum - This is significant because all of our basic

optimization algorithms search for local optima - Those that try harder to find global optima

generally just run underlying algorithms several

times starting at different solutions

10

Possible Optimal Solutions to Convex NLPs (not

occurring at corner points)

11

Local vs. Global Optimal Solutions for Nonconvex

NLPs

12

Unconstrained Optimization

13

Common Assumptions

- We generally assume f(x) is continuous and

differentiable over the feasible region. - If it is not, we can still apply solution

techniques but they often become a bit more

complicated (e.g. have to examine at

discontinuities)

14

Principles of Optimization Unconstrained

- Problem

- Mimimize f(x), where x is a vector that could

have any values, positive or negative - First Order Necessary Condition

- ?f(x) 0 (?f/?xi 0 for all i) is the first

order necessary condition for optimization - Second Order Necessary Condition

- ?2f(x) is positive semidefinite (PSD)

- x ?2f(x) x ? 0 for all x

- Second Order Sufficient Condition

- (Given FONC satisfied)

- ?2f(x) is positive definite (PD)

- x ?2f(x) x gt 0 for all x

15

Principles of Optimization Unconstrained, Except

Non-Negativity Condition

- Problem

- Minimize f(x), where x is a vector, x gt 0

- First Order Necessary Condition

- ?f/?xi 0 if xi gt 0

- ?f/?xi ? 0 if xi 0

- Thus ?f/?xixi 0 for all xi, or

- ?f(x) x 0

- If interior point (x gt 0), then ?f(x) 0

- Nothing changes if the constraint is not binding

f

?f/?xi lt 0

?f/?xi 0

xi

16

Search Methods

- The primary algorithmic method of finding local

optima (which are global for convex and concave

functions) for unconstrained optimization

problems - Also commonly used as a subroutine in more

complex problems

17

Illustration of the Bisection Method

f(x)

xl

xr

x

x

- Key Points

- Global convergence

- Converges at a fixed, slow rate

18

The Bisection Method

- How do we find x? (within an error tolerance ?)

- Choose x (xl xr) / 2

- Evaluate f(x)

- If f(x) lt 0 set xl x. If f(x) gt 0 set xr

x. - If xr - xl lt 2?. Stop. Set x x.

19

Illustration of Newtons Method

f(x)

xi

xi1

x

- Key Points

- Fast convergence if close enough to optimum

- Will eventually converge for convex functions.

- Not guaranteed to converge for general functions

- (Avoid points x where f(x)0)

20

Newtons Method (in one variable)

- Choose an initial point x0 and some tolerance ?.

Set i 0. - Compute f(xi) and f(xi)

- Generate the new point

- xi1 xi f(xi)/f(xi).

- 4. Set i i1. If f(xi) lt ?, stop.

21

The Gradient Search Method

- The following algorithm assumes we are

minimizing a convex function. - Take an initial point x0. Set i 0.

- Evaluate ?f (xi). If ?f (xi) ? ? where ? is a

given tolerance, stop. - Express

- ?(t)f (xi - t?f (xi)).

- Use the critical point condition or bisection

search to find t that minimizes ?(t). - Set

- xi xi t?f (xi).

- Go to 2.

22

Illustration of the Gradient Search Method

x2

x0 t?f (x0)

f(x)f(x0)

x0

?

?

?

x1

x1

x0 -t?f (x0)

- Key Points

- Global convergence

- Converges at a fixed, slow rate

23

Newtons Method (in multiple variables)

- Choose an initial point x0 and some tolerance ?.

Set i 0. - Compute ?f(xi) and ? 2f(xi)

- Generate the new point

- xi1 xi ? 2f(xi)-1f(xi) .

- 4. Set i i1. If ?f(xi) lt ?, stop.

24

Illustration of the Newton Search Method

x2

f(x)f(x0)

x0

?

?

?

x1

x1

x0 -t (?2f (x0))-1?f (x0)

- Key Points

- As with one-dimensional case

- (Avoid points x where ? 2f(xi)-1 does not exist.)

25

Theory of Constrained Optimization

26

Optimality Conditions

- Problem

- Minimize f(x), where x is a vector

- such that ci(x) ? 0 for i 1,2,,m

- KKT Conditions (Necessary Conditions)

- ?f(x) ?i1m ?i?ci(x)

- ci(x) ? 0, for i 1,2,,m

- ?i gt 0, for i 1,2,,m

- ?i ci(x) 0, for i1,2,,m

- Furthermore, these conditions are sufficient if

(as we have assumed here) we are dealing with a

convex programming problem

27

Optimality Conditions (continued)

- If f(x) and -ci(x), i1,,m, are all convex

functions, then the KKT conditions are also

sufficient, that is, the KKT point is a global

minimizer of f on the feasible set. - Otherwise the KKT conditions are analogous to the

First Order Necessary Conditions for the

unconstrained case - They will find a local optimum if the program is

locally convex

28

Optimality Conditions (equality case)

- Problem

- Minimize f(x), where x is a vector

- Such that hi(x) 0 for i 1,2,,m

- KKT Conditions (Necessary Conditions)

- ?f(x) ?i1m ?i?hi(x)

- hi(x) 0 for i 1,2,,m

- Rewrite

- Minimize f(x)

- Such that hi(x) 0 for i 1,2,,m

- hi(x) ? 0 for i 1,2,,m

29

KKT ConditionsFinal Notes

- KKT conditions may not lead directly to a very

efficient algorithm for solving NLPs. However,

they do have a number of benefits - They give insight into what optimal solutions to

NLPs look like - They provide a way to set up and solve small

problems - They provide a method to check solutions to large

problems - The Lagrangian values can be seen as shadow

prices of the constraints

30

Linearly Constrained Optimization Model

31

Optimality Conditions

- If f(x) is a convex function on the feasible set,

then the KKT conditions are sufficient, that is,

the KKT point is a global minimizer of f on the

feasible set. - The dual problem for LCP can be written as

32

Optimality Conditions

- If f(x) is homogeneous of degree 1, then

- The dual problem for LCP can be written as

33

Optimality Conditions

- Primal Feasibility

- Dual Feasibility

- Complementary Slackness

34

Quadratic Optimization Problem

The objective function is convex if and only if

matrix Q is positive semi-definite.

35

Quadratic Optimization

- Primal Feasibility

- Dual Feasibility

- Complementary Slackness

36

Algorithms for Linearly Constrained Convex

Programs

- Other algorithms for Quadratic Programs include

- Simplex type method

- Interior Point Methods for QP Such methods

follow along the lines of Interior Point Methods

for LPs to find polynomial time algorithms for

QPs. - Other algorithms for Linearly Constrained Convex

Programs include - Interior Point Methods

- Frank-Wolfe approximates the objective function

with a linear function - SQP Sequential Quadratic Approximation

Programming approximates the objective function

with a quadratic

37

Sequential Unconstrained Algorithms

- Convert the original problem to a sequence of

unconstrained problems - It can be shown that these problems converge to a

local optimum - Examples include penalty methods and barrier

methods

38

What to know about Interior-Point Methods

- Polynomial-time algorithms for Linear Programming

(LP) and Quadratic Programming (QP) - The best solve LPs or QPs in the order of

- O(n3L)

- Many are Sequential Unconstrained Algorithms

(e.g. employing barrier methods)

39

Sequential Unconstrained Minimization Method

(SUMT)

- Minimize

- P(x r) f(x) r B(x)

- where B(x) is a Barrier Function

- B(x) is small when x is far from the boundary of

FS - B(x) is large when x is close to the boundary of

FS - B(x) is infinity when x is on the boundary of

FS. - r (gt0) is called the barrier parameter

- Solve the unconstrained problem for the given r

- Reduce r and repeat the above step.

40

The Barrier Functions

- Logarithmic Barrier

- Reciprocal Barrier

41

Barrier Method Example

42

Comments About NLP Algorithms

- All of the algorithms we have discussed can

terminate at any local optimum. - Thus at termination they are only guaranteed to

have found an optimal solution in the case of

convex programming. - So how do we find a better solution in the case

of Non-convex Programming? - Generally, we simply just rerun the algorithm

starting at a number of different starting points.

43

Comments About Starting Points

- The starting point influences the local optimal

solution obtained. - The null starting point should be avoided.

- When possible, it is best to use starting values

of approximately the same magnitude as the

expected optimal values.

44

1. Parimutuel Market Microstructure

Optimality Condition?

45

2. Fisher Equilibrium

46

Individual Maximization

47

Aggregate Social Maximization