Demand Paging - PowerPoint PPT Presentation

1 / 22

Title:

Demand Paging

Description:

Pages are loaded into memory from backing store, and visa versa upon page eviction. Pages loaded into memory as they are referenced (or demanded); less useful pages ... – PowerPoint PPT presentation

Number of Views:1423

Avg rating:3.0/5.0

Title: Demand Paging

1

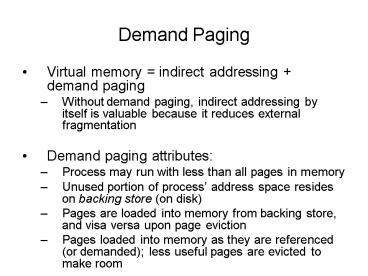

Demand Paging

- Virtual memory indirect addressing demand

paging - Without demand paging, indirect addressing by

itself is valuable because it reduces external

fragmentation - Demand paging attributes

- Process may run with less than all pages in

memory - Unused portion of process address space resides

on backing store (on disk) - Pages are loaded into memory from backing store,

and visa versa upon page eviction - Pages loaded into memory as they are referenced

(or demanded) less useful pages are evicted to

make room

2

Demand Paging Solves

- Insufficient memory for single process

- Insufficient memory for several processes during

multiprogramming - Relocation during execution via paging

- Efficient use of memory only active subset of

process pages are in memory (unavoidable waste

is internal to pages) - Ease of programming no need to be concerned

with partition starting address and limits - Protection separate address spaces enforced by

translation of hardware - Sharing map same physical page into more than 1

address space - Less disk activity than with swapping alone

- Fast process start-up process can run with as

little as 1 page - Higher CPU utilization since many partially

loaded processes can be in memory simultaneously

3

Data Structures for Demand Paging

- Page tables location of pages that are in

memory - Backing store map location of pages that are

not in memory - Physical memory map (frame map)

- Fast physical to virtual mapping (to invalidate

translations for page that is no longer in

memory) - Allocation/use of page frames in use or free

- Number of page frames are allotted to each

process - Cache of page tables Translation Lookaside

Buffer (TLB)

4

Hardware Influence on Demand Paging

- TLB is special hardware

- Base/limit registers describe kernels and

current process page table area - Size of PTE and meaning of certain bits in PTE

(present/absent, protection, referenced, modified

bits)

5

Virtual Memory Policies

- Fetch policy which pages to load and when?

- Page replacement policy which pages to

remove/overwrite and when in order to free up

memory space?

6

Fetch Policy

- Demand paging pages are loaded on demand, not

in advance - Process starts up with none of its pages loaded

in memory page faults load pages into memory - Initially many page faults

- Context switching may clear pages and will cause

faults when process runs again

7

Fetch Policy

- Pre-paging load pages before process runs

- Need a working set of pages to load on context

switches - Few systems use pure demand paging, but instead,

does pre-paging - Thrashing occurs when program causes page fault

every few instructions - What can be done to alleviate problem?

8

Page Replacement Policy

- Question of which page to evict when memory is

full and a new page is demanded - Goal reduce the number of page faults

- Why? Page fault is very expensive 10 msec. to

fetch from disk on a 100 MIPS machine, 10 msec.

is equal to 1 Million instructions

9

Page Replacement Policy

- Demand paging is likely to cause a large number

of page faults, especially when a process starts

up - Locality of reference saves demand paging

- Locality of reference next reference more

likely to be near last one - Reasons

- Next instruction in stream is close to the last

- Small loops

- Common subroutines

- Locality in data layout (arrays, matrices of

data, fields of structure are contiguous) - Sequential processing of files and data

- With locality of reference, a page that is

brought in by one instruction is likely to

contain the data required by the next instruction

10

Page Replacement Policy

- Policy can be global (inter-process) or local

(per-process) - Policies

- Optimal replace page that will be used farthest

in the future (or never used again) - FIFO variants might throw out important pages

- Second chance, clock

- LRU variants difficult to implement exactly

- NFU crude approximation to LRU

- Aging efficient and good approximation to LRU

- NRU crude, simplistic version of LRU

- Working set expensive to implement

- Working set clock efficient and most widely

used in practice

11

Page Replacement Policy NRU

- Not recently used a poor mans LRU

- Uses 2 bits (can be implemented in HW)

- R page has been referenced

- M page has been modified

- OS clears R-bit periodically

- R0 means pages are cold

- R1 means pages are hot or recently referenced

- When free pages are needed, sweep through all of

memory and reclaim pages based on R and M

classes - 00 not referenced, not modified

- 01 not referenced, modified

- 10 referenced, not modified

- 11 referenced and modified

- Pages should be removed in what order?

- How can class 01 exist if it was modified,

shouldnt the page have been referenced?

12

Page Replacement Policy FIFO

- First-in, first-out

- Easy to implement, but does not consider page

usage pages that are frequently referenced

might be removed - Keep linked list representing order in which

pages have been brought into memory

13

Page Replacement Policy Second Chance

- Variant of FIFO

- When free page frames are needed, examine pages

in FIFO order starting from the beginning - If R0, reclaim page

- If R1, set R0 and place at the end of FIFO list

(hence, the second chance) - If not enough reclaims on first pass, revert to

pure FIFO on second pass

14

Page Replacement Policy Clock

- Variant of FIFO, better implementation of Second

Chance - Pages are arranged in circular list pages never

moved around the list - When free page frames are needed, examine pages

in clock-wise order from current position - If R0, reclaim page

- If R1, set R0 and advance hand

15

Page Replacement Policy Clock

- 2-hand clock variant

- one hand changes R from 1 to 0

- second hand reclaims pages

- How is this different from 1-hand clock?

- With 1 hand, time between cycles of the hand is

proportional to memory size - With 2 hands, time between changing R and

reclaiming pages can be varied dynamically

depending on the momentary need to reclaim page

frames

16

Page Replacement Policy LRU

- Keep linked list representing order in which

pages have been used - On potentially every reference, find referenced

page in the list and bring it to the front very

expensive! - Can do LRU faster with special hardware

- On reference store time (or counter) in PTE find

page with oldest time (or lowest counter) to

evict - NxN Matrix algorithm

- Initially set NxN bits to 0

- When page frame K is referenced,

- Set all bits of row K to 1

- Set all bits of column K to 0

- Row with lowest binary value is LRU

- But, hardware is often not available!

17

Page Replacement Policy NFU and Aging

- Simulating LRU in software

- NFU

- At each clock interrupt, add R bit (0 or 1) to

counter associated with page - Reclaim page with lowest counter

- Disadvantage no aging of pages

- Aging

- Shift counter to the right, then add R to the

left recent R bit is added as most significant

bit, thereby dominating the counter value - Reclaim page with lowest counter

- Acceptable disadvantages

- Cannot distinguish between references early in

clock interval from latter references since shift

and add is done to all counters at once - With 8-bit counter, have only a memory of 8 clock

ticks back

18

Page Replacement Policy Working Set

- Working set set of pages process is currently

using - As a function of k most recent memory references,

w(k,t) is working set at any time t - As a function of past e msec. of execution time,

w(e,t) is set of pages process referenced in the

last e msec. of process execution time - Replacement policy find page not in working set

and evict it

19

Page Replacement Policy Working Set

Implementation

- If R0, page is candidate for removal

- Calculate age current time time of last use

- If age threshold, page is reclaimed

- If age be removed if it is oldest page in working set

- If R1, set time of last use current time

- Page was recently referenced, so in working set

- If no page has R0, choose random page for

removal (one that requires no writeback)

20

Page Replacement Policy Working Set Clock

- Use circular list of page frames

- If R0, age threshold, and

- Page is clean (M0), reclaim

- Page is dirty (M1), schedule write but advance

hand to check other pages - If R1, set R0 and advance hand

- At end of 1st pass, if no page has been

reclaimed - If write has been scheduled, keep moving hand

until write is done and page is clean. Evict 1st

clean page - If no writes scheduled, claim any clean page even

though it is in the working set

21

Page Replacement Policy Summary

- FIFO easy to implement, but does not account for

page usage - Because of locality of reference, LRU is a good

approximation to optimal - Naïve LRU has high overhead update some data

structure on every reference! - Practical algorithms

- Approximate LRU

- Maintain list of free page frames for quick

allocation - When number of page frames in free list falls

below a low water mark (threshold), invoke page

replacement policy until number of free page

frames goes above high water mark

22

Page Replacement Policy Evaluation Metrics

- Results page fault rate over some workload

- Speed work that must be done on each reference

- Speed work that must be done when page is

reclaimed - Overhead storage required by algorithms

bookkeeping and special hardware required