Review last week - PowerPoint PPT Presentation

1 / 36

Title:

Review last week

Description:

RAID(0),1,2,3,4,5. Redundancy in time (Re-execution) Processes, threads ... plotting error removal rate versus t to see which model best fits data ... Recovery blocks ... – PowerPoint PPT presentation

Number of Views:54

Avg rating:3.0/5.0

Title: Review last week

1

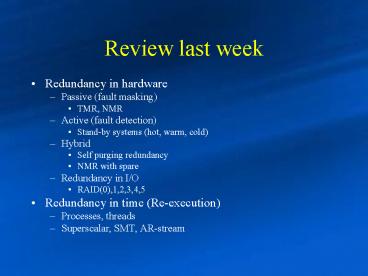

Review last week

- Redundancy in hardware

- Passive (fault masking)

- TMR, NMR

- Active (fault detection)

- Stand-by systems (hot, warm, cold)

- Hybrid

- Self purging redundancy

- NMR with spare

- Redundancy in I/O

- RAID(0),1,2,3,4,5

- Redundancy in time (Re-execution)

- Processes, threads

- Superscalar, SMT, AR-stream

2

Today

- Reliability of networks

- Software reliability

- Redundancy in software

3

Reliability of Networks

- Based on graph theory nodes represent computers,

branches represent communication links - Simplest model assumes nodes do not fail but

links do - Path is a collection of branches that provide

communications between specific pair of nodes - In general we are interested in knowing

- RallP(all nodes are connected)

- RstP(nodes s and t are connected)

- RkP(k nodes are connected)

4

Reliability of Networks

Simple state space enumeration

b

a

1

5

4

6

2

c

3

d

If all links are equal and pprob. of being

up qprob. being down

5

An example

For this graph is easier to calculate all paths

that failed instead of listing the state space

- Given a pathset defined by reachability,

- RelAlice,Bob Prob(any path from Alice to Bob)

- 1-Prob(all paths failed)

- 1 (1 - .81)(1 - .81)

- .9639

6

Primary Graph Reductions

- We can perform graph reductions to facilitate

calculation - Irrelevant do not contribute to any operational

state remove - Series sequence of edges are required

simultaneously combine with axiom of

probability - P(A?B) P(A)P(B)

- Parallel network is operational if any of these

edges are operational combine with axiom of

probability - P(A?B) P(A) P(B) P(A?B)

Sequential reduction

P(A?B) .81.81-(.81.81) .9639

Parallel reduction

7

Reliability of Networks

b

a

- To improve network reliability we can increase p

or add more branches to the network - There are other more efficient methods to compute

network reliability - Factoring aggregate nodes to reduce size of

graph

1

5

4

6

2

c

3

d

8

SW and Reliability

- If the software, hardware and human failures are

independent the reliability of a whole computer

system could be described as - Rsystem Rhw Rsw Roperator

- Often this is not the case

9

SW and Reliability

- Is the nature of software probabilistic or

deterministic? - If it were possible to perform exhaustive testing

on all possible inputs, software could be

considered of a deterministic nature - Since exhaustive testing is impossible, the

probability gt 0, that a designer had not included

some combination of inputs during testing, makes

the nature of software probabilistic - There is still controversy about this issue

- Software reliability the probability of a

failure free operation over a given time

interval

10

Software Engineering

- Software can be designed in many different ways

- constraints are few compared to designing

hardware - Area, power, technology, performance

- Systematic ways for software development have

been proposed - Software Engineering - A systematic approach to

the analysis, design, implementation and

maintenance of software

11

SW Development cycle

- Software development is a lengthy, complex

process - Software development process consists of

- Requirements

- Specifications

- Design

- Coding

- Prototypes

- Testing

12

SW Development cycle

- Models of software development

Waterfall

Spiral

13

Software Engineering/Errors

- Usually in software engineering

- Software problemsoftware errorbug

- Software is normally developed by teams

- New bugs may be produced when integrating

- Coding is about 20 of total development effort,

testing may be 40 - Software errors can occur at

- Specification and requirements stage

- Design

- Program logic

- Software does not wear out as hardware does but

may become obsolete

14

Regression testing

- Regression Testing

- is any type of software testing which seeks to

uncover regression bugs. - Regression bugs occur whenever software

functionality that previously worked as desired

stops working or no longer works in the same way

that was previously planned. - Typically regression bugs occur as an unintended

consequence of program changes. - Common methods of regression testing include

re-running previously run tests and checking

whether previously-fixed faults have reemerged. - Standard practice in SW development

- automated tools available (JUnit)

15

Error Removal models

- t is the number of months of development time. At

t0 software contains Et errors, as testing

progresses Ec(t) errors are corrected - Er(t)Et-Ec(t)

With no new error generation

16

Error Removal models

- Constant error removal rate ?0 errors/month

Er(t)Et-?0t

Et

Errors remaining Er(t)

Errors corrected Ec(t)

t

Constant error removal ?0 rate

17

Error Removal models

- Linearly decreasing error-removal rate

dEr(t)/dt -(K1-K2t) as dEr(t)/dt -gt0 at tt0,

K2K1/t0 we get dEr(t)/dt -K1(1-t/t0)

integrating (with K1K) gives Er(t)C-Kt(1-

t/2t0) at t0 Er(t)EtC, therefore Er(t) Et

Kt(1-t/2t0)

Et

Errors remaining Er(t)

Errors corrected Ec(t)

t

Linearly decreasing rate

18

Error Removal models

- Exponentially decreasing error-removal rate.

Predicts harder time in finding errors as program

is perfected

dEd(t)/dtadEr(t)/dt -gt assuming remaining

errors are proportional to Ed (errors detected)

with Ed(t)Ec(t) and

Er(t)Et-Ec(t) we get dEc(t)/dt aEt-Ec(t)

solving diff. equation gives Ec(t)Ae-at B with

initial conditions t0gtEc0 gives A-BBEtEc

when t-gt8 Ec Et(1- e-at substituting in

Er(t)Et-Ec(t) gives Er(t) Et e-at

Et

Errors remaining Er(t)

Errors corrected Ec(t)

t

Exponentially decreasing rate

19

Software Reliability models

- A software reliability model should be used to

answer questions such as - When should we stop testing?

- Will the software work well and be considered

reliable? - These are issues that software management should

address

20

Reliability models

- Constant error removal rate is the simplest

model, assuming failure rate is

z(t)kEr(t) and Er(t)Et-?0t (constant error

removal) z(t)k(Et-?0t) (hazard function or

failure rate)

For fixed values of debugging time t

t2gtt1most debugging

R(t)

t1gtt0medium debugging

t0least debugging

t1/?

t2/?

MTTF approaches infinity when errors (ß)-gt 0!!

The model does not reflect intuition. How to

obtain Et, k, ?0?

21

Reliability models

- Exponential error removal rate (fixed values of

debugging time)

R(t)

8.5 months debugging

8 months debugging

.673

6 months debugging

Example start with Et130 errors at t0,

decreasing to 10 errors in t8 months Er(t8)10

errors130e-a8 gt a0.3206 if we require

R(t).673 at t300 hrs after t8 months

debugging we get R(300).673 gt k.000132

t300

This is a better model since agrees with intuition

22

Reliability models

- Constants used (e.g. Et,k,a) in a software

reliability model can be estimated empirically by - Handbook (history of bugs in a company)

- Statistical estimations plotting error removal

rate versus t to see which model best fits data

23

Reliability models

- Other models have been proposed

- Scheidewind

- Generalized exponential model

- Musa/Okumoto model

- Littlewood/Verral model

24

Reliability models

- Reliability models are understood/used only in a

small sector in the software industry - Criticisms

- Some models assume the waterfall software

development model (test is only done in the final

stages) - Do not take into account the complexity of a

software system as a parameter in the reliability

function - Every application and test case is treated

uniformly

25

TMR and software

- If a TMR system is implemented using the same

software in each component well have - RsysRTMR Rsoftware ? assuming independence of

Hw/Sw errors - System will be very dependent on software

reliability. We need independent versions of the

software to provide better reliability

26

Software analogies to TMR

- N-version programming

- Idea same specification is implemented in a

number of different versions by different teams.

All versions compute simultaneously and the

majority output is selected using a voting

system. - Airbus commercial aircraft uses this technique.

- Recovery blocks

- A number of explicitly different versions of the

same specification are written and executed in

sequence - An acceptance test is used to select the output

to be transmitted

27

N-version programming

Assuming version independence

28

Output comparison

- As in hardware systems, ideally the output

comparator is a simple piece of software that

uses a voting mechanism to select the output. - Note in real-time systems, there may be a

requirement that the results from the different

versions are all produced within a certain time

frame.

29

N-version programming

- Different system versions are designed and

implemented by different teams. - Assumes low probability for the case teams make

the same mistakes.

30

Design diversity

- Some problems with design diversity

- Empirical evidence suggests that teams not

culturally diverse tend to tackle problems in the

same way. - Different teams make the same mistakes. Some

parts of an implementation are more difficult

than others so all teams tend to make mistakes in

the same place - Specification errors

- Errors will be reflected in all implementations

- This can be addressed to some extent by using

multiple specification representations.

31

N-version programming

Considering common mode dependencies in

requirements and programming

Pi1-prob indep-mode-sw fault

Pcmr1-prob common-mode-req fault Effect of

identical misinterpretation of requirements

pcmr

pcms

Pcms1-prob common-mode-sw fault Effect of

identical or equivalent incorrect designs for

different portions of the problem

pi

32

NMR HW/SW

- Space shuttle computer system

Software A

Hardware A

input

Software A

Hardware B

voter

output

primary

Software A

Hardware C

Software A

Hardware D

Hardware E

Software B

backup

33

Fault Tolerant Techniques in Software

- Check points and roll backs

- Applications state saved at checkpoint. Roll

back restarts execution from a previous

checkpoint - Recovery Blocks

- Alternates - secondary modules that perform same

function of a primary module - are executed when

primary fails to pass an acceptance test

34

Fault Tolerant Techniques in Software

- Check points and roll backs

- Reboot/restart (human) simplest but weakest

since information may be lost - Recovery (reboot initiated automatically by the

system) - Journaling (stores all transactions)

- Retry (immediately after error detection)

- Checkpoints (state is saved only at specific

points)

35

Recovery blocks

36

Recovery blocks

- Force different algorithms to be used in each

version - idea is to reduce probability of common errors

- Design of the acceptance test is difficult as it

must be independent of the computation used - This approach may not be applicable in real-time

systems - sequential execution of redundant versions