Iterative Solution Methods - PowerPoint PPT Presentation

1 / 37

Title:

Iterative Solution Methods

Description:

GS iteration converges for any initial vector if A is a diagonally dominant matrix ... The matrix is diagonally dominant and all diagonal entries are positive ... – PowerPoint PPT presentation

Number of Views:152

Avg rating:3.0/5.0

Title: Iterative Solution Methods

1

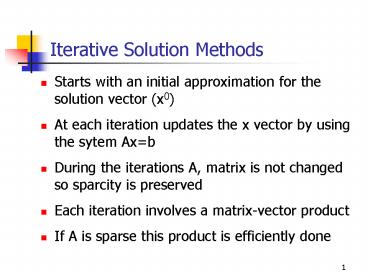

Iterative Solution Methods

- Starts with an initial approximation for the

solution vector (x0) - At each iteration updates the x vector by using

the sytem Axb - During the iterations A, matrix is not changed so

sparcity is preserved - Each iteration involves a matrix-vector product

- If A is sparse this product is efficiently done

2

Iterative solution procedure

- Write the system Axb in an equivalent form

- xExf (like xg(x) for fixed-point iteration)

- Starting with x0, generate a sequence of

approximations xk iteratively by - xk1Exkf

- Representation of E and f depends on the type of

the method used - But for every method E and f are obtained from A

and b, but in a different way

3

Convergence

- As k??, the sequence xk converges to the

solution vector under some conditions on E matrix - This imposes different conditions on A matrix for

different methods - For the same A matrix, one method may converge

while the other may diverge - Therefore for each method the relation between A

and E should be found to decide on the convergence

4

Different Iterative methods

- Jacobi Iteration

- Gauss-Seidel Iteration

- Successive Over Relaxation (S.O.R)

- SOR is a method used to accelerate the

convergence - Gauss-Seidel Iteration is a special case of SOR

method

5

Jacobi iteration

6

xk1Exkf iteration for Jacobi method

- A can be written as ALDU (not

decomposition)

Axb ? (LDU)xb

Dxk1 -(LU)xkb

xk1-D-1(LU)xkD-1b E-D-1(LU) fD-1b

7

Gauss-Seidel (GS) iteration

8

x(k1)Ex(k)f iteration for Gauss-Seidel

Axb ? (LDU)xb

(DL)xk1 -Uxkb

xk1-(DL)-1Uxk(DL)-1b E-(DL)-1U f-(DL)-1b

9

Comparison

- Gauss-Seidel iteration converges more rapidly

than the Jacobi iteration since it uses the

latest updates - But there are some cases that Jacobi iteration

does converge but Gauss-Seidel does not - To accelerate the Gauss-Seidel method even

further, successive over relaxation method can be

used

10

Successive Over Relaxation Method

- GS iteration can be also written as follows

Faster convergence

11

SOR

1lt?lt2 over relaxation (faster convergence) 0lt?lt1

under relaxation (slower convergence) There is an

optimum value for ? Find it by trial and error

(usually around 1.6)

12

x(k1)Ex(k)f iteration for SOR

Dxk1(1-?)Dxk?b-?Lxk1-?Uxk (D

?L)xk1(1-?)D-?Uxk?b E(D ?L)-1(1-?)D-?U f

?(D ?L)-1b

13

The Conjugate Gradient Method

- Converges if A is a symmetric positive definite

matrix - Convergence is faster

14

Convergence of Iterative Methods

Define the solution vector as

Define an error vector as

15

Convergence of Iterative Methods

power

iteration

The iterative method will converge for any

initial iteration vector if the following

condition is satisfied

Convergence condition

16

Norm of a vector

A vector norm should satisfy these conditions

Vector norms can be defined in different forms as

long as the norm definition satisfies these

conditions

17

Commonly used vector norms

Sum norm or l1 norm

Euclidean norm or l2 norm

Maximum norm or l? norm

18

Norm of a matrix

A matrix norm should satisfy these conditions

Important identitiy

19

Commonly used matrix norms

Maximum column-sum norm or l1 norm

Spectral norm or l2 norm

Maximum row-sum norm or l? norm

20

Example

- Compute the l1 and l? norms of the matrix

21

Convergence condition

Express E in terms of modal matrix P and

? ?Diagonal matrix with eigenvalues of E on the

diagonal

22

Sufficient condition for convergence

If the magnitude of all eigenvalues of iteration

matrix E is less than 1 than the iteration is

convergent

It is easier to compute the norm of a matrix than

to compute its eigenvalues

23

Convergence of Jacobi iteration

E-D-1(LU)

24

Convergence of Jacobi iteration

Evaluate the infinity(maximum row sum) norm of E

Diagonally dominant matrix

If A is a diagonally dominant matrix, then Jacobi

iteration converges for any initial vector

25

Stopping Criteria

- Axb

- At any iteration k, the residual term is

- rkb-Axk

- Check the norm of the residual term

- b-Axk

- If it is less than a threshold value stop

26

Example 1 (Jacobi Iteration)

Diagonally dominant matrix

27

Example 1 continued...

Matrix is diagonally dominant, Jacobi iterations

are converging

28

Example 2

The matrix is not diagonally dominant

29

Example 2 continued...

The residual term is increasing at each

iteration, so the iterations are diverging. Note

that the matrix is not diagonally dominant

30

Convergence of Gauss-Seidel iteration

- GS iteration converges for any initial vector if

A is a diagonally dominant matrix - GS iteration converges for any initial vector if

A is a symmetric and positive definite matrix - Matrix A is positive definite if

- xTAxgt0 for every nonzero x vector

31

Positive Definite Matrices

- A matrix is positive definite if all its

eigenvalues are positive - A symmetric diagonally dominant matrix with

positive diagonal entries is positive definite - If a matrix is positive definite

- All the diagonal entries are positive

- The largest (in magnitude) element of the whole

matrix must lie on the diagonal

32

Positive Definitiness Check

Positive definite Symmetric, diagonally dominant,

all diagonal entries are positive

33

Positive Definitiness Check

A decision can not be made just by investigating

the matrix. The matrix is diagonally dominant

and all diagonal entries are positive but it is

not symmetric. To decide, check if all the

eigenvalues are positive

34

Example (Gauss-Seidel Iteration)

Diagonally dominant matrix

35

Example 1 continued...

When both Jacobi and Gauss-Seidel iterations

converge, Gauss-Seidel converges faster

36

Convergence of SOR method

- If 0lt?lt2, SOR method converges for any initial

vector if A matrix is symmetric and positive

definite - If ?gt2, SOR method diverges

- If 0lt?lt1, SOR method converges but the

convergence rate is slower (deceleration) than

the Gauss-Seidel method.

37

Operation count

- The operation count for Gaussian Elimination or

LU Decomposition was 0 (n3), order of n3. - For iterative methods, the number of scalar

multiplications is 0 (n2) at each iteration. - If the total number of iterations required for

convergence is much less than n, then iterative

methods are more efficient than direct methods. - Also iterative methods are well suited for sparse

matrices

![Chapter 3: Simplex methods [Big M method and special cases] PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/4471558.th0.jpg?_=202101090310)