Estimation of regression model

1 / 13

Title:

Estimation of regression model

Description:

It tells is what proportion of the variation in Y is explained by the model. ... r2=1-(RSS/TSS) ... ESS can be error sums-of-squares or estimated or explained SSQ. ... –

Number of Views:49

Avg rating:3.0/5.0

Title: Estimation of regression model

1

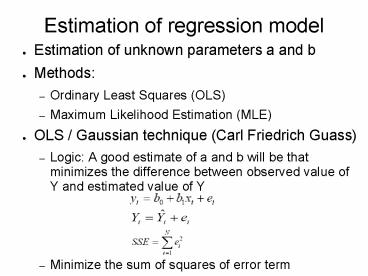

Estimation of regression model

- Estimation of unknown parameters a and b

- Methods

- Ordinary Least Squares (OLS)

- Maximum Likelihood Estimation (MLE)

- OLS / Gaussian technique (Carl Friedrich Guass)

- Logic A good estimate of a and b will be that

minimizes the difference between observed value

of Y and estimated value of Y - Minimize the sum of squares of error term

2

Error estimation

3

Why squared error?

- Because

- (1) the sum of the errors expressed as deviations

could be zero as it is with standard deviations,

and - (2) some feel that big errors should be more

influential than small errors. - Therefore, we wish to find the values of a and b

that produce the smallest/ minimizes sum of

squared errors - In order to do this, we use calculus minimization

technique. - Take the first derivative and equate it to zero.

- Solve for the unknown. Ensure second derivative

is positive - In this case we must take partial derivatives

since we have two parameters (a b) to worry

about.

4

(No Transcript)

5

- -

- -

6

Properties of OLS estimators

- Point estimators

- SRF passes through sample means of Y and X

- Mean values of estimated and actual Y are equal

- Mean values of residuals is zero

- In deviation form (deviation from mean) SRF is

written without intercept - Residuals are uncorrelated with predicted Y

- Residuals are uncorrelated with X

7

10 Assumptions of OLS method

- In order to make inferences from the estimates we

must make some assumptions in the way Yi was

generated - YiabXiui so assumptions regarding X and u are

critical in interpretation of estimates - Regression model is linear in parameters

- X variable is nonstochastic

- Mean value of disturbance is zero E(ui/Xi)0

- Homoscedasticity of u var(ui/Xi)

- No autocorrelation between error terms

Cov(ui,uj/Xi,Xj)0 - Zero covariance between X and u Cov(ui,Xi)0

8

Assumptions continued..

- No. of observations N must b greater than

parameters to be estimated - Variability essential in X values. Var(X)gt0

- Regression model is correctly specified

- There is no multicollinearity (not applicable in

a two variable model)

9

Precision of the OLS estimates

- Standard errors is a indicator of the precision

and is critical for inference - Given the assumptions SE of B1 and B2 are

10

Gauss Markov theorem

- Given the assumptions of the CLRM, the least

squares estimators in the class of unbiased

linear estimators, have the minimum variance, ie,

they are BLUE (best linear unbiased estimator) - It is linear

- It is unbiased average or expected value of B2

is equal to true value B2 - It has minimum variance and is thus efficient

estimator.

11

Measure of Goodness of Fit

- Since we are interested in how well the model

performs at reducing error, we need to develop a

means of assessing that error reduction. - Total SSExplained SSResidual SS

12

Coefficient of Determination(r2)

- The r2 (or R-square) is also called the

coefficient of determination. - It tells is what proportion of the variation in Y

is explained by the model. - r2 lies between 0 and 1

- Closer to 1, better the model

- An r2 of .95 means that 95 of the variation in Y

is caused by the variation in X - r2 ESS/TSS

- r21-(RSS/TSS)

- The correlation coefficient is the sq root of r2

and ranges between -1.0 and 1.0

13

Sums of Squares Confusion

- Note Occasionally you will run across ESS and

RSS which generate confusion since they can be

used interchangeably. ESS can be error

sums-of-squares or estimated or explained SSQ.

Likewise RSS can be residual SSQ or regression

SSQ. Hence the use of USS for Unexplained SSQ in

this treatment.