17th October - PowerPoint PPT Presentation

Title:

17th October

Description:

Your friend shows up and says he has the joint distribution ... wind up not satisfying the P(AVB)= P(A) P(B) -P(A&B) ... (for those who don't like Bayes Balls) ... – PowerPoint PPT presentation

Number of Views:58

Avg rating:3.0/5.0

Title: 17th October

1

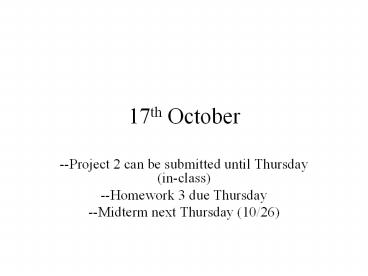

17th October

- --Project 2 can be submitted until Thursday

(in-class) - --Homework 3 due Thursday

- --Midterm next Thursday (10/26)

2

What happens if there are multiple symptoms?

Conditional independence To the rescue Suppose

P(TA,Catchcavity) P(TACavity)P(CatchC

avity)

- Patient walked in and complained of toothache

- You assess P(CavityToothache)

- Now you try to probe the patients mouth with that

steel thingie, and it catches - How do we update our belief in Cavity?

- P(CavityTA, Catch) P(TA,Catch Cavity)

P(Cavity) -

P(TA,Catch) - a

P(TA,CatchCavity) P(Cavity)

3

(No Transcript)

4

(No Transcript)

5

(No Transcript)

6

(No Transcript)

7

Conditional Independence Assertions

- We write X Y Z to say that the set of

variables X is conditionally independent of the

set of variables Y given evidence on the set of

variables Z (where X,Y,Z are subsets of the set

of all random variables in the domain model) - We saw that Bayes Rule computations can exploit

conditional independence assertions.

Specifically, - X Y Z implies

- P(X YZ) P(XZ) P(YZ)

- P(XY, Z) P(XZ)

- P(YX,Z) P(YZ)

- Idea Why not write down all conditional

independence assertions that hold in a domain?

8

Cond. Indep. Assertions (Contd)

- Idea Why not write down all conditional

independence assertions (CIA) (X Y Z) that

hold in a domain? - Problem There can be exponentially many

conditional independence assertions that hold in

a domain (recall that X, Y and Z are all subsets

of the domain variables. - Brilliant Idea May be we should implicitly

specify the CIA by writing down the

dependencies between variables using a

graphical model - A Bayes Network is a way of doing just this.

- The Baye Net is a Directed Acyclic Graph whose

nodes are random variables, and the immediate

dependencies between variables are represented by

directed arcs - The topology of a bayes network shows the

inter-variable dependencies. Given the topology,

there is a way of checking if any Cond. Indep.

Assertion. holds in the network (the Bayes Ball

algorithm and the D-Sep idea)

9

Cond. Indep. Assertions (contd)

- We said that a bayes net implicitly represents a

bunch of CIA - Qn. If I tell you exactly which CIA hold in a

domain, can you give me a bayes net that exactly

models those and only those CIA? - Unfortunately, NO. (See the X,Y,Z,W blog example)

- This is why there is another type of graphical

models called undirected graphical models - In an undirected graphical model, also called a

markov random field, nodes correspond to random

variables, and the immediate dependencies between

variables are represented by undirected edges. - The CIA modeled by an undirected graphical model

are different - X Y Z in an undirected graph if every path

from a node in X to a node in Y must pass

through a node in Z (so if we remove the nodes in

Z, then X and Y will be disconnected) - Undirected models are good to represent soft

constraints between random variables (e.g. the

correlation between different pixels in an image)

while directed models are good for representing

causal influences between variables

10

CIA implicit in Bayes Nets

- So, what conditional independence assumptions are

implicit in Bayes nets? - Local Markov Assumption

- A node N is independent of its non-descendants

(including ancestors) given its immediate

parents. (So if P are the immediate paretnts of

N, and A is the set of Ancestors, then N A

P ) - (Equivalently) A node N is independent of all

other nodes given its markov blanked (parents,

children, childrens parents) - Given this assumption, many other conditional

independencies follow. For a full answer, we need

to appeal to D-Sep condition and/or Bayes Ball

reachability

11

Topological Semantics

Independence from Every node holds Given markov

blanket

Independence from Non-descedants holds Given

just the parents

These two conditions are equivalent Many other

coniditional indepdendence assertions follow from

these

12

Takes O(2n) for most natural queries of type

P(DEvidence) NEEDS O(2n) probabilities as

input Probabilities are of type P(wk)where wk

is a world

Directly using Joint Distribution

Can take much less than O(2n) time for most

natural queries of type P(DEvidence) STILL

NEEDS O(2n) probabilities as input

Probabilities are of type P(X1..XnY)

Directly using Bayes rule

Can take much less than O(2n) time for most

natural queries of type P(DEvidence) Can

get by with anywhere between O(n) and O(2n)

probabilities depending on the conditional

independences that hold. Probabilities are

of type P(X1..XnY)

Using Bayes rule With bayes nets

13

Review

14

Review

15

Bayes Ball Alg ?Shade the evidence nodes

?Put one ball each at the X nodes ?See if any

if them can find their way to any of

the Y nodes

16

(No Transcript)

17

(No Transcript)

18

(No Transcript)

19

(No Transcript)

20

(No Transcript)

21

(No Transcript)

22

10/19

23

Happy Deepavali!

10/19

4th Nov, 2002.

24

Blog Questions

- Answer

- Check to see if the joint distribution given by

your friend satisfies all the conditional

independence assumptions. - For example, in the Pearl network, Compute

P(JA,M,B) and P(JA). These two numbers should

come out the same! - Notice that your friend could pass all the

conditional indep assertions, and still be

cheating re the probabilities - For example, he filled up the CPTs of the network

with made up numbers (e.g. P(B)0.9 P(E)0.7

etc) and computed the joint probability by

multiplying the CPTs. This will satisfy all the

conditional indep assertions..! - The main point to understand here is that the

network topology does put restrictions on the

joint distribution.

- You have been given the topology of a bayes

network, but haven't yet gotten the conditional

probability tables (to be concrete, you may

think of the pearl alarm-earth quake scenario

bayes net). Your friend shows up and says he

has the joint distribution all ready for you. You

don't quite trust your friend and think he is

making these numbers up. Is there any way you can

prove that your friends' joint distribution

is not correct?

25

Blog Questions (2)

- Answer Noas long as we only ask the friend to

fill up the CPTs in the bayes network, there is

no way the numbers wont makeup a consistent

joint probability distribution - This should be seen as a feature..

- Also we had a digression about personal

probabilities.. - John may be an optimist and believe that

P(burglary)0.01 and Tom may be a pessimist and

believe that P(burglary)0.99 - Bayesians consider both John and Tom to be fine

(they dont insist on an objective frequentist

interpretation for probabilites) - However, Bayesians do think that John and Tom

should act consistently with their own beliefs - For example, it makes no sense for John to go

about installing tons of burglar alarms given his

belief, just as it makes no sense for Tom to put

all his valuables on his lawn

- Continuing bad friends, in the question above,

suppose a second friend comes along and says that

he can give you the conditional probabilities

that you want to complete the specification of

your bayes net. You ask him a CPT entry, and

pat comes a response--some number between 0 and

1. This friend is well meaning, but you are

worried that the numbers he is giving may lead

to some sort of inconsistent joint probability

distribution. Is your worry justified ( i.e., can

your friend give you numbers that can lead to

an inconsistency?) (To understand

"inconsistency", consider someone who insists on

giving you P(A), P(B), P(AB) as well as P(AVB)

and they wind up not satisfying the P(AVB)

P(A)P(B) -P(AB)or alternately, they insist on

giving you P(AB), P(BA), P(A) and P(B), and the

four numbers dont satisfy the bayes rule

26

Blog Questions (3)

- Your friend heard your claims that Bayes Nets can

represent any possible conditional independence

assertions exactly. He comes to you and says he

has four random variables, X, Y, W and Z, and

only TWO conditional independence assertionsX

.ind. Y W,ZW .ind. Z X, YHe dares

you to give him a bayes network topology on these

four nodes that exactly represents these and only

these conditional independencies. Can you? (Note

that you only need to look at 4 vertex directed

graphs).

- Answer No this is not possible.

- Here are two wrong answers

- Consider a disconnected graph where X, Y, W, Z

are all unconnected. In this graph, the two CIAs

hold. However, unfortunately so do many other

CIAs - Consider a graph where W and Z are both immediate

parents of X and Y. In this case, clearly, X

.ind. Y W,Z. However, W and Z are definitely

dependent given X and Y (Explainign away). - Undirected models can capture these CIA exactly.

Consider a graph X is connected to W and Z and Y

is connected to W and Z (sort of a diamond). - In undirected models CIA is defined in terms of

graph separability - Since X and Y separate W and Z (i.e., every path

between W and Z must pass through X and Y), W

.ind. ZX,Y. Similarly the other CIA - Undirected graphs will be unable to model some

scenarios that directed ones can so you need

both

27

Blog Comments..

arc said... I'd just like to say that this

project is awesome. And not solely because it

doesn't involve LISP, either!

Cant leave well enough alone rejoinder

28

CIA implicit in Bayes Nets

- So, what conditional independence assumptions are

implicit in Bayes nets? - Local Markov Assumption

- A node N is independent of its non-descendants

(including ancestors) given its immediate

parents. (So if P are the immediate paretnts of

N, and A is the set of Ancestors, then N A

P ) - (Equivalently) A node N is independent of all

other nodes given its markov blanked (parents,

children, childrens parents) - Given this assumption, many other conditional

independencies follow. For a full answer, we need

to appeal to D-Sep condition and/or Bayes Ball

reachability

29

Topological Semantics

Independence from Every node holds Given markov

blanket

Independence from Non-descedants holds Given

just the parents

These two conditions are equivalent Many other

coniditional indepdendence assertions follow from

these

30

Bayes Ball Alg ?Shade the evidence nodes

?Put one ball each at the X nodes ?See if any

if them can find their way to any of

the Y nodes

31

(No Transcript)

32

D-sep (direction dependent Separation)(for those

who dont like Bayes Balls)

- X Y E if every undirected path from X to Y

is blocked by E - A path is blocked if there is a node Z on the

path s.t. - Z is in E and Z has one arrow coming in and

another going out - Z is in E and Z has both arrows going out

- Neither Z nor any of its descendants are in E and

both path arrows lead to Z

33

(No Transcript)

34

(No Transcript)

35

P(AJ,M) P(A)?

How many probabilities are needed?

13 for the new 10 for the old Is this the

worst?

36

Making the network Sparse by introducing

intermediate variables

- Consider a network of boolean variables where n

parent nodes are connected to m children nodes

(with each parent influencing each child). - You will need nm2n conditional probabilities

- Suppose you realize that what is really

influencing the child nodes is some single

aggregate function on the parents values (e.g.

sum of the parents). - We can introduce a single intermediate node

called sum which has links from all the n

parent nodes, and separately influences each of

the m child nodes - You will wind up needing only n2n2m conditional

probabilities to specify this new network!

37

(No Transcript)

38

(No Transcript)

39

(No Transcript)

40

We only consider the failure to cause Prob of

the causes that hold

Prob that X holds even though ith parent

doesnt

How about Noisy And? (hint AB gt ( A V B) )

k

ri

ij1

41

(No Transcript)

42

(No Transcript)

43

(No Transcript)

44

(No Transcript)

45

(No Transcript)

46

(No Transcript)

47

(No Transcript)

48

Constructing Belief Networks Summary

- Decide on what sorts of queries you are

interested in answering - This in turn dictates what factors to model in

the network - Decide on a vocabulary of the variables and their

domains for the problem - Introduce Hidden variables into the network as

needed to make the network sparse - Decide on an order of introduction of variables

into the network - Introducing variables in causal direction leads

to fewer connections (sparse structure) AND

easier to assess probabilities - Try to use canonical distributions to specify the

CPTs - Noisy-OR

- Parameterized discrete/continuous distributions

- Such as Poisson, Normal (Gaussian) etc

49

Case Study Pathfinder System

- Domain Lymph node diseases

- Deals with 60 diseases and 100 disease findings

- Versions

- Pathfinder I A rule-based system with logical

reasoning - Pathfinder II Tried a variety of approaches for

uncertainity - Simple bayes reasoning outperformed

- Pathfinder III Simple bayes reasoning, but

reassessed probabilities - Parthfinder IV Bayesian network was used to

handle a variety of conditional dependencies. - Deciding vocabulary 8 hours

- Devising the topology of the network 35 hours

- Assessing the (14,000) probabilities 40 hours

- Physician experts liked assessing causal

probabilites - Evaluation 53 referral cases

- Pathfinder III 7.9/10

- Pathfinder IV 8.9/10 Saves one additional life

in every 1000 cases! - A more recent comparison shows that Pathfinder

now outperforms experts who helped design it!!

![Download Book [PDF] Bette Davis in Her Own Words PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10054268.th0.jpg?_=20240613062)