Why parallel computing matters and what makes it hard - PowerPoint PPT Presentation

Title:

Why parallel computing matters and what makes it hard

Description:

e.g., billiard balls, semiconductor device simulation, galaxies ... Only simulate up to (and including) the minimum time stamp of inputs ... – PowerPoint PPT presentation

Number of Views:75

Avg rating:3.0/5.0

Title: Why parallel computing matters and what makes it hard

1

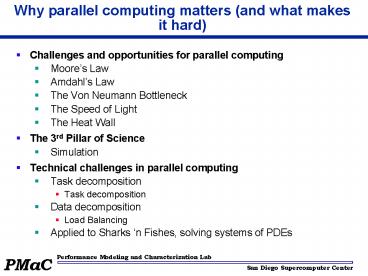

Why parallel computing matters (and what makes it

hard)

- Challenges and opportunities for parallel

computing - Moores Law

- Amdahls Law

- The Von Neumann Bottleneck

- The Speed of Light

- The Heat Wall

- The 3rd Pillar of Science

- Simulation

- Technical challenges in parallel computing

- Task decomposition

- Task decomposition

- Data decomposition

- Load Balancing

- Applied to Sharks n Fishes, solving systems of

PDEs

2

Moores Law

Moores Law the number of transistors per

processor chip by doubles every 18 months.

3

Moores Law

- Gordon Moore (co-founder of Intel) predicted in

1965 that the transistor density of semiconductor

chips would double roughly every 18 months. - Moores law has had a decidedly mixed impact,

creating new opportunities to tap into

exponentially increasing computing power while

raising fundamental challenges as to how to

harness it effectively. - The Red Queen Syndrome

- It takes all the running you can do, to keep in

the same place. If you want to get somewhere

else, you must run at least twice as fast as

that!' - Things Moore never said

- computers double in speed every 18 months ?

- cost of computing is halved every 18 months ?

- cpu utilization is halved every 18 months ?

4

Snavelys Top500 Laptop?

- Among other startling implications is the fact

that the peak performance of the typical laptop

would have placed it as one of the 500 fastest

computers in the world as recently as 1995. - Shouldnt we all just go find another job now?

- No because Moores Law has several more subtle

implications and these have raised a series of

challenges to utilizing the apparently

ever-increasing availability of compute power

these implications must be understood to see

where we are today in HPC.

5

Real Work (the fly in the ointment)

- Scientific calculations involve operations upon

large amounts of data, and it is in moving data

around within the computer that the trouble

begins. As a very simple pedagogical example

consider the expression. - A B C

- This generic expression takes two values

(arguments), and performs an arithmetic operation

to produce a third. Implicit in such a

computation is that A and B must to be loaded

from where they are stored and the value C stored

after it has been calculated. In this example,

there are three memory operations and one

floating-point operation (Flop).

6

Real Work cont.

- Obviously, if the rate at which the CPU can

perform the one Flop in this example increases,

the time required to load and store the three

values in memory had better decrease by the same

proportion if the overall rate of the computation

is to continue to track Moores Law. As has been

noted, the rates of improvement in memory

bandwidth have not in fact been keeping up with

Moores Law for some time FoSC. - Amdahls Law

- Speedup(P) Time(1)/Time(P) lt 1/(s (1-s)/P) lt

1/s, - where P be a number of processors and s is the

fraction of work done sequentially.

7

Amdahls Law

- The law of diminishing returns

- When a task has multiple parts, after you speed

up one part a lot, the other parts come to

dominate the total time - An example from cycling

- On a hilly closed-loop course you cannot ever

average more than 2x your uphill speed even if

you go downhill at the speed of light! - For supercomputers this means even though

processors get faster the overall time to

solution is limited by memory and interconnect

speeds (moving the data around)

8

The Problem of Latency

- Since memory bandwidth is also increasing faster

than is memory latency, there a slower, but

important overall trend towards an increase in

the number of words of memory bandwidth that

equate to memory latency. For a processor to

avoid stalling and to realize peak bandwidths

under these conditions, it must be designed to

operate on multiple outstanding memory requests.

The number of outstanding requests that need to

be processed in order to saturate memory

bandwidth is now approaching 100 to 1000 FoSC. - Einsteins Law

- It is clear that latency tolerance mechanisms are

an essential component of future system design.

9

Hierarchical systems

- Modern supercomputers are composed of a hierarchy

of subsystems - CPU and registers

- Cache (multiple levels)

- Main (local) memory

- System interconnect to other CPUs and memory

- Disk

- Archival

- Latencies span 11 orders of magnitude!

Nanoseconds to Minutes. This is a solar-system

scale. It is 794 million miles to Saturn. - Bandwidths taper

- Cache bandwidths generally are at least four

times larger than local memory bandwidth, and

this in turn exceeds interconnect bandwidth by a

comparable amount, and so on outward to the disk

and archive system.

10

Red Shift

- While the absolute speed of all computer

subcomponents have been changing rapidly, they

have not all been changing at the same rate. For

example, the analysis in FoSC indicates that,

while peak processor speeds have been increasing

at 59 between 1988 and 2004, memory speeds of

commodity processors have been increasing at only

23 per year since 1995, and DRAM latency has

only been improving at a pitiful rate of 5.5 per

year. This red shift in the latency distance

between various components has produced a crisis

of sorts for computer architects, and a variety

of complex latency-hiding mechanisms currently

drive the design of computers as a result.

11

3 ways of science

- Experiment

- Theory

- Simulation

12

Parallel Models and Machines

- Steps in creating a parallel program

- decomposition

- assignment

- orchestration/coordination

- mapping

- Performance in parallel programs

- try to minimize performance loss from

- load imbalance

- communication

- synchronization

- extra work

13

Some examples

- Simulation models

- A model problem sharks and fish

- Discrete event systems

- Particle systems

- Lumped systems (Ordinary Differential Equations,

ODEs) - (Next time Partial Different Equations, PDEs)

14

Sources of Parallelism and Locality in Simulation

- Real world problems have parallelism and locality

- Many objects do not depend on other objects

- Objects often depend more on nearby than distant

objects - Dependence on distant objects often simplifies

- Scientific models may introduce more parallelism

- When continuous problem is discretized, may limit

effects to timesteps - Far-field effects may be ignored or approximated,

if they have little effect - Many problems exhibit parallelism at multiple

levels - e.g., circuits can be simulated at many levels

and within each there may be parallelism within

and between subcircuits

15

Basic Kinds of Simulation

- Discrete event systems

- e.g.,Game of Life, timing level simulation for

circuits - Particle systems

- e.g., billiard balls, semiconductor device

simulation, galaxies - Lumped variables depending on continuous

parameters - ODEs, e.g., circuit simulation (Spice),

structural mechanics, chemical kinetics - Continuous variables depending on continuous

parameters - PDEs, e.g., heat, elasticity, electrostatics

- A given phenomenon can be modeled at multiple

levels - Many simulations combine these modeling techniques

16

A Model Problem Sharks and Fish

- Illustration of parallel programming

- Original version (discrete event only) proposed

by Geoffrey Fox - Called WATOR

- Basic idea sharks and fish living in an ocean

- rules for movement (discrete and continuous)

- breeding, eating, and death

- forces in the ocean

- forces between sea creatures

- 6 problems (SF1 - SF6)

- Different sets of rule, to illustrate different

phenomena

17

- http//www.cs.berkeley.edu/demmel/cs267/Sharks_an

d_Fish/

- Sharks and Fish 1. Fish alone move continuously

subject to an external current and Newton's laws.

- Sharks and Fish 2. Fish alone move continuously

subject to gravitational attraction and Newton's

laws. - Sharks and Fish 3. Fish alone play the "Game of

Life" on a square grid. - Sharks and Fish 4. Fish alone move randomly on a

square grid, with at most one fish per grid

point. - Sharks and Fish 5. Sharks and Fish both move

randomly on a square grid, with at most one fish

or shark per grid point, including rules for fish

attracting sharks, eating, breeding and dying. - Sharks and Fish 6. Like Sharks and Fish 5, but

continuous, subject to Newton's laws.

18

Discrete Event Systems

19

Discrete Event Systems

- Systems are represented as

- finite set of variables

- each variable can take on a finite number of

values - the set of all variable values at a given time is

called the state - each variable is updated by computing a

transition function depending on the other

variables - System may be

- synchronous at each discrete timestep evaluate

all transition functions also called a finite

state machine - asynchronous transition functions are evaluated

only if the inputs change, based on an event

from another part of the system also called

event driven simulation - E.g., functional level circuit simulation

20

Sharks and Fish as Discrete Event System

- Ocean modeled as a 2D toroidal grid

- Each cell occupied by at most one sea creature

21

The Game of Life (Sharks and Fish 3)

- Fish only, no sharks

- An new fish is born if

- a cell is empty

- exactly 3 (of 8) neighbors contain fish

- A fish dies (of overcrowding) if

- cell contains a fish

- 4 or more neighboring cells are full

- A fish dies (of loneliness) if

- cell contains a fish

- less than 2 neighboring cells are full

- Other configurations are stable

22

Parallelism in Sharks and Fishes

- The simulation is synchronous

- use two copies of the grid (old and new)

- the value of each new grid cell depends only on 9

cells (itself plus 8 neighbors) in old grid - simulation proceeds in timesteps, where each cell

is updated at every timestep - Easy to parallelize using domain decomposition

- Locality is achieved by using large patches of

the ocean - boundary values from neighboring patches are

needed - Load balancing is more difficult. The activities

in this system are discrete events

P4

Repeat compute locally to update local

system barrier() exchange state info

with neighbors until done simulating

P1

P2

P3

P5

P6

P7

P8

P9

23

Parallelism in Circuit Simulation

- Circuit is a graph made up of subcircuits

connected by wires - component simulations need to interact if they

share a wire - data structure is irregular (graph)

- parallel algorithm is synchronous

- compute subcircuit outputs

- propagate outputs to other circuits

- Graph partitioning assigns subgraphs to

processors - Determines parallelism and locality

- Want even distribution of nodes (load balance)

- With minimum edge crossing (minimize

communication) - Nodes and edges may both be weighted by cost

- NP-complete to partition optimally, but many good

heuristics (later lectures)

24

Parallelism in Asynchronous Circuit Simulation

- Synchronous simulations may waste time

- simulate even when the inputs do not change,

little internal activity - activity varies across circuit

- Asynchronous simulations update only when an

event arrives from another component - no global timesteps, but events contain time

stamp - Ex Circuit simulation with delays (events are

gates changing) - Ex Traffic simulation (events are cars changing

lanes, etc.)

25

Scheduling Asynch. Circuit Simulation

- Conservative

- Only simulate up to (and including) the minimum

time stamp of inputs - May need deadlock detection if there are cycles

in graph, or else null messages - Speculative

- Assume no new inputs will arrive and keep

simulating, instead of waiting - May need to backup if assumption wrong

- Ex Parswec circuit simulator of Yelick/Wen

- Ex Standard technique for CPUs to execute

instructions - Optimizing load balance and locality is difficult

- Locality means putting tightly coupled subcircuit

on one processor since active part of circuit

likely to be in a tightly coupled subcircuit,

this may be bad for load balance

26

Particle Systems

27

Particle Systems

- A particle system has

- a finite number of particles

- moving in space according to Newtons Laws (i.e.

F ma) - time is continuous

- Examples

- stars in space with laws of gravity

- electron beam semiconductor manufacturing

- atoms in a molecule with electrostatic forces

- neutrons in a fission reactor

- cars on a freeway with Newtons laws plus model

of driver and engine - Reminder many simulations combine techniques

such as particle simulations with some discrete

events (Ex Sharks and Fish)

28

Forces in Particle Systems

- Force on each particle can be subdivided

force external_force nearby_force

far_field_force

- External force

- ocean current to sharks and fish world (SF 1)

- externally imposed electric field in electron

beam - Nearby force

- sharks attracted to eat nearby fish (SF 5)

- balls on a billiard table bounce off of each

other - Van der Wals forces in fluid (1/r6)

- Far-field force

- fish attract other fish by gravity-like (1/r2 )

force (SF 2) - gravity, electrostatics, radiosity

- forces governed by elliptic PDE

29

Parallelism in External Forces

- These are the simplest

- The force on each particle is independent

- Called embarrassingly parallel

- Evenly distribute particles on processors

- Any distribution works

- Locality is not an issue, no communication

- For each particle on processor, apply the

external force

30

Parallelism in Nearby Forces

- Nearby forces require interaction gt

communication - Force may depend on other nearby particles

- Ex collisions

- simplest algorithm is O(n2) look at all pairs

to see if they collide - Usual parallel model is domain decomposition of

physical domain - Challenge 1 interactions of particles near

processor boundary - need to communicate particles near boundary to

neighboring processors - surface to volume effect means low communication

- Which communicates less squares (as below) or

slabs? - Challenge 2 load imbalance, if particles cluster

- galaxies, electrons hitting a device wall

31

Load balance via Tree Decomposition

- To reduce load imbalance, divide space unevenly

- Each region contains roughly equal number of

particles - Quad tree in 2D, Oct-tree in 3D

32

Parallelism in Far-Field Forces

- Far-field forces involve all-to-all interaction

gt communication - Force depends on all other particles

- Ex gravity

- Simplest algorithm is O(n2) as in SF 2, 4, 5

- Just decomposing space does not help since every

particle apparently needs to visit every other

particle - Use more clever algorithms to beat O(n2)

33

Far-field forces Particle-Mesh Methods

- Superimpose a regular mesh

- Move particles to nearest grid point

- Exploit fact that far-field satisfies a PDE that

is easy to solve on a regular mesh - FFT, Multigrid

- Wait for next lecture

- Accuracy depends on how fine the grid is and

uniformity of particles

34

Far-field forces Tree Decomposition

- Based on approximation

- O(n log n) or O(n) instead of O(n2)

- Forces from group of far-away particles

simplifies - They resemble a single larger particle

- Use tree each node contains an approximation of

descendents - Several Algorithms

- Barnes-Hut

- Fast Multipole Method (FMM) of Greengard/Rohklin

- Anderson

- Later lectures

35

Lumped SystemsODEs

36

System of Lumped Variables

- Many systems approximated by

- System of lumped variables

- Each depends on continuous parameter (usually

time) - Example circuit

- approximate as graph

- wires are edges

- nodes are connections between 2 or more wires

- each edge has resistor, capacitor, inductor or

voltage source - system is lumped because we are not computing

the voltage/current at every point in space along

a wire - Variables related by Ohms Law, Kirchoffs Laws,

etc. - Form a system of Ordinary Differential Equations,

ODEs - We will refresh more on ODEs and PDEs

- See http//www.sosmath.com/diffeq/system/introduct

ion/intro.html

37

Circuit physics

- The sum of all currents is 0 (Kirchoff Current

Law) - The sum of all voltages around the loop is 0

- V IR (Ohms Law)

38

Circuit Example

- State of the system is represented by

- v_n(t) node voltages

- i_b(t) branch currents all at time t

- v_b(t) branch voltages

- Eqns. Include

- Kirchoffs current

- Kirchoffs voltage

- Ohms law

- Capacitance

- Inductance

- Write as single large system of ODEs (possibly

with constraints)

0 A 0 v_n 0 A 0 -I i_b

S 0 R -I v_b 0 0 -I Cd/dt 0 0 Ld/dt I

0

39

Systems of Lumped Variables

- Another example is structural analysis in Civil

Eng. - Variables are displacement of points in a

building - Newtons and Hooks (spring) laws apply

- Static modeling exert force and determine

displacement - Dynamic modeling apply continuous force

(earthquake) - The system in these case (and many) will be

sparse - i.e., most array elements are 0

- neither store nor compute on these 0s

40

Solving ODEs

- Explicit methods to compute solution(t)

- Ex Eulers method

- Simple algorithm sparse matrix vector multiply

- May need to take very small timesteps, especially

if system is stiff (i.e. can change rapidly) - Implicit methods to compute solution(t)

- Ex Backward Eulers Method

- Larger timesteps, especially for stiff problems

- More difficult algorithm solve a sparse linear

system - Computing modes of vibration

- Finding eigenvalues and eigenvectors

- Ex do resonant modes of building match

earthquakes? - All these reduce to sparse matrix problems

- Explicit sparse matrix-vector multiplication

- Implicit solve a sparse linear system

- direct solvers (Gaussian elimination)

- iterative solvers (use sparse matrix-vector

multiplication) - Eigenvalue/vector algorithms may also be explicit

or implicit

41

Parallelism in Sparse Matrix-vector multiplication

- y Ax, where A is sparse and n x n

- Questions

- which processors store

- yi, xi, and Ai,j

- which processors compute

- yi sum from 1 to n of Ai,j xj

- Graph partitioning

- Partition index set 1,,n N1 u N2 u u Np

- for all i in Nk, store yi, xi, and row i of A

on processor k - Processor k computes its own yi

- Constraints

- balance load

- balance storage

- minimize communication

42

Graph Partitioning and Sparse Matrices

- Relationship between matrix and graph

1 2 3 4 5 6

1 1 1 1 2 1 1

1 1 3

1 1 1 4 1 1

1 1 5 1 1 1

1 6 1 1 1 1

- A good partition of the graph has

- equal number of (weighted) nodes in each part

(load balance) - minimum number of edges crossing between

- Can reorder the rows/columns of the matrix by

putting all the nodes in one partition together

43

More on Matrix Reordering via Graph Partitioning

- Goal is to reorder rows and columns to

- improve load balance

- decrease communication

- Ideal matrix structure for parallelism

(nearly) block diagonal - p (number of processors) blocks

- few non-zeros outside these blocks, since these

require communication

P0 P1 P2 P3 P4

44

Summary

- Red Shift means the promise implies by Moores

Law is largely unrealized for scientific

simulation that by necessity operates on large

data - Consider The Butterfly Effect

- Computer Architecture is a hot field again

- Large centralized, specialized compute engines

are vital - Grids, utility programing, SETI_at_home etc. do not

meet all the needs of largescale scientific

simulation for reason that should now be obvious - Consider a galactic scale