Software Exploits for ILP

1 / 34

Title:

Software Exploits for ILP

Description:

variables may be aliased or pointed to. variables may use indirect ... Determine all dependencies (true, output, anti) in the loop below and determine ... – PowerPoint PPT presentation

Number of Views:76

Avg rating:3.0/5.0

Title: Software Exploits for ILP

1

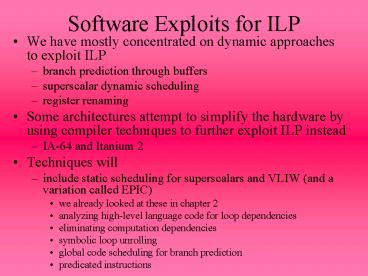

Software Exploits for ILP

- We have mostly concentrated on dynamic approaches

to exploit ILP - branch prediction through buffers

- superscalar dynamic scheduling

- register renaming

- Some architectures attempt to simplify the

hardware by using compiler techniques to further

exploit ILP instead - IA-64 and Itanium 2

- Techniques will

- include static scheduling for superscalars and

VLIW (and a variation called EPIC) - we already looked at these in chapter 2

- analyzing high-level language code for loop

dependencies - eliminating computation dependencies

- symbolic loop unrolling

- global code scheduling for branch prediction

- predicated instructions

2

Loop Dependencies

- In order to achieve greater ILP, we need to

promote LLP (loop level parallelism) - we already used loop unrolling to do so, we

must ensure that there are no loop dependencies - a dependence arises if two loop iterations are

dependent - Consider

- for (i1ilt100i) xixis

- data dependence between xi on lhs and rhs but

it only exists within an iteration, not across

iterations (as in xi1xis) - for (i1ilt100i) xi xi-1 s

- here, the data dependence on x is loop carried

- a compiler may not be able to unroll a loop with

a loop carried dependence - question how far is the distance of the

dependence? - if far enough, unrolling may still succeed

(consider xi xi-5 s) - three forms of dependencies true (data), anti

and output

3

Example

- Convert this loop into

- A1A1B1 for (i1ilt99i)

Bi1CiDi Ai1Ai1Bi1

B101C100D100 - now the loop is parallel

- the dependencies are not loop carried

- Bi1 from S1 to S2

- Ai1 to Ai1 in S2

- Identify any dependencies and note which are loop

carried - can we parallelize the loop?

- note a loop is parallel if it has no loop

carried dependencies or it can be written without

a cycle of dependencies - For (i1ilt100i) AiAiBi /

S1 / Bi1CiDi / S2 / - data dependence on A in S1 (not loop carried)

- data dependence on B from S2 to S1 (loop carried)

- the loop carried dependence implies no LLP, but

the dependence is not a circular one - this loop can be parallelized

notice the reversed order between the two

statements

4

Identifying Dependencies

- Detecting data dependencies is done through

matching symbolic names, so there are situations

where the compiler may not be able to help us - variables may be aliased or pointed to

- variables may use indirect referencing through

arrays - consider R1 1000 and R2 1004, a dependence

exists with 4(R1) and 0(R2) yet may not be

identified by the compiler - Other forms of dependencies might be easier to

identify (particularly in the high-level code)

where a loop-based dependence is usually in the

form of a recurrence - for (i2ilt100i)

- yiyi-2yi

- here, yi is dependent on a value that was

computed 2 iterations ago - this recurrence distance is called the dependence

distance - for (i6ilt100i)

- yiyi-5yi

- here, the dependence distance is 5

5

Algorithm for Identifying Recurrence

- Array accesses are affine if indices follow a

pattern like aib - a and b are constants, i is the loop index

- Almost all loop carried dependence algorithms

rely on the arrays being affine - The GCD test states that given two accesses of

the same array - where the accesses are to elements aib and

cid - if d-b is not divisible by GCD(a, c) then there

are no loop carried dependencies - note that if d b is divisible by GCD(a, c),

then there is no conclusion (there might or might

not be loop carried dependencies) - additional tests might be applied in this case

although some of these tests are NP-Complete, so

we might use approximation methods instead - Does the following loop have loop carried

dependences? - for (i1ilt100ii1) x2i3x2i 5

- a2, b3, c2, d0

- GCD(a, c)2, d - b -3

- 2 does not divide -3 (-3/2 has a remainder), so

the loop has no loop carried dependencies

6

Removing Dependencies

- Consider the following example

- what are the types of dependencies?

- how can we rewrite the code to remove

dependencies to make the loop parallelizable? - true from S1 to S3, from S1 to S4 (yi)

- anti from S1 to S2 (xi), from S3 to S4 (yi)

- output from S1 to S4 (yi)

- yi is used in S3 and S4 as a source register

and in S4 as a destination register, so we rename

one use of yi to ti - xi is used in S2 as a destination register and

in S1 and S2 as a source register, so we rename

one of them to x1i - solution shown above to the right

for (i1ilt100ii1) for(i1ilt100ii1)

yixi/c / S1 / tixi/c xixi

c / S2 / x1ixic ziyic / S3

/ zitic yic-yi / S4

/ yic-ti

7

Dealing with Pointers

- Determining if two pointers are pointing at the

same memory location (aliases) is nearly

impossible statically - however, there are some things we can determine

- are two pointers pointing into the same data

structure (list or tree)? - are two pointers pointing at the same type of

data? - is a pointing, which is being used to pass an

address as a parameter, pointing at an object

being referenced in the function? - of two pointers, is one pointing at a local

object and the other at a global object? - so, while there is no solution to identifying

aliases, we can use some analysis (called

points-to analysis) to rule out aliases in

specific situations - and therefore, we will be able to guarantee that

two pointers are not aliases - that is, if points-to analysis claims two

pointers are not aliases, we can trust that

result, if points-to analysis fails, we cannot

conclude anything and should be more cautious

about loop unrolling

8

Back Substitution

- Within a basic block of code, we can eliminate

operations that copy values using copy

propagation - as a simple example, we can reduce the two

statements to the right to a single statement

with the single statement below them - we have already done this type of optimization in

loop unrolling by combining array index

adjustments - another example is to take advantage of algebraic

associativity and rearrange the order of

computations in an expression as shown to the

right - in the first block of code, there are data

dependencies between the first and second and the

second and third instructions, which might result

in an extra stall that does not appear in the

second block of code

DADDUI R1, R2, 4 DADDUI R1, R1, 4

DADDUI R1, R2, 8 DADD R1, R2, R3 DADD

R4, R1, R6 DADD R8, R4, R7 DADD R1, R2, R3

DADD R4, R6, R7 DADD R8, R1, R4

9

Software Pipelining

- Compiler-based loop unrolling adds instructions

to the program and uses more registers - Another idea is to symbolically unroll the loop

by arranging the loop components in their

opposite order - the new loop interleaves execution of the

original loop - consider a loop that has three parts A, B, C,

then the new loop contains iteration i2 of A,

i1 of B and i of C for iteration i - this requires manipulating the loop maintenance

mechanisms and to have pre and post loop

instructions

10

Example

Loop L.D F0,0(R1) ADD.D F4,F0,F2 S.D

F4,0(R1) DSUBI R1,R1,8 BNE

R1,R2,Loop Iteration i L.D F0,0(R1) ADD.D

F4,F0,F2 S.D F4,0(R1) Iteration i1 L.D

F0,0(R1) ADD.D F4,F0,F2 S.D

F4,0(R1) Iteration i2 L.D F0,0(R1) ADD.D

F4,F0,F2 S.D F4,0(R1) Bold-faced instructions

are unrolled

- The compiler selects the appropriate instruction

from three iterations of the loop and builds a

new loop out of them, adding the proper pre- and

post-code

L.D F0, 16(R1) L.D F6, 8(R1) ADD.D F4, F6,

F2 Loop S.D F4,16(R1) ADD.D F4,F0,F2 L.D

F0,0(R1) DSUBI R1,R1,8 BNE

R1,R2,Loop ADD.D F8, F0, F2 S.D F4,

8(R1) S.D F8, 0(R1)

11

Another Example

- This loop has 4 operations to unroll

- We precede this loop with the three L.Ds, 2

ADD.Ds, 1 MUL.D, and follow the loop with 1

ADD.D, 2 MUL.Ds and 3 S.Ds

Loop L.D F0, 0(R1) ADD.D F2, F1, F0 MUL.D F4,

F2, F3 S.D F4, 0(R1) DSUBI R1, R1, 8 BNEZ R1,

Loop

Loop S.D F4, 24(R1) // S.D from iteration I

3 MUL.D F4, F2, F3 // MUL.D from iteration I

2 ADD.D F2, F1, F0 // ADD.D from iteration I

1 L.D F0, 0(R1) // L.D from iteration

I DSUBI R1, R1, 8 BNEZ R1, Loop

12

Global Code Scheduling

- Here, the compiler attempts to select a path

through selection statements based on branch

predictions - some code might be moved prior to a branch to

make it more efficient - or, we might combine this with loop unrolling so

that the compiler is able to perform the

operation without having to know whether a

condition will be true or not - The compiler generates a straight line of code

without a branch - We must preserve data and control dependencies

which makes it tricky - since this relies on branch predictions, the

straight line of code may lead to a violation of

data dependencies if the prediction is

inaccurate, so the approach must include

mechanisms for failed predictions such as

canceling instructions or not allowing the

instructions to write to registers/memory - in addition, we have to ensure that if a moved

instruction raises an exception, that we only

handle the exception if the instruction would

have been executed anyway (that is, if we

predicted correctly)

13

Example

- Consider the following code

- aiaibi if (ai 0) bi

else ci - if we have knowledge that says the condition

(ai 0) is mostly true - then we can move bi before the comparison

- removing one of the branches

- we would still have one branch to branch around

the else clause

Another option is to move ci z before the

if-statement or into the branch delay slot

- in moving bi we must ensure that we have not

violated other dependencies - for instance, if the condition was ai bi,

then we couldnt move the assignment statement

14

Trace Scheduling

SGT R3, R1, R2 BEQZ R3, else DADDI

R1, R1, 1 J next else DSUBI R2,

R2, 1 next

- Trace scheduling is a form of global scheduling

where we rearrange code to assume one branch will

be taken - Consider the code

- if(x gt y) x else y--

- the MIPS code is given to the right, assuming

that x is stored in R1 and y in R2 - Assume that x gt y is true 90 of the time, we can

then revise our code as shown to the right - original code if true, 4 instructions, if

false, 3 instructions - new code if true, 3 instructions, if false, 5

instructions - Assuming each instruction takes 1 cycle with no

stalls, we have a speedup of - (90 4 10 3) / (90 3 10 5) 1.22

or 22 speedup

SGT R3, R1, R2 DADDI R1, R1, 1 BNEZ R3,

next DSUBI R1, R1, 1 DSUBI R2, R2,

1 next

if we are wrong about the prediction, we have to

reset R1

15

Conditional Instructions

- A conditional instruction is an instruction that

can combine a comparison and an ALU operation - although we dont want to combine conditions and

branches, we can combine conditions and simple

ALU operations - such as a register move or a data load

- if the condition is false, we just cancel the

operation before the datum is placed into the

destination register - The advantage in an If statement (without the

else clause), there is an explicit branch, but in

a conditional instruction, the branch is

eliminated - in MIPS, we have conditional move operations

- MOVZ R1, R2, R3 (R1 ? R2 if R3 0) and MOVN

(move negative) - although MIPS does not have a conditional load,

we can envision one - LWC R2, 0(R3), R1 (load 0(R3) into R2 if R1

0) - these operations will start performing the move

or load operation but only store the result in

the WB stage if the condition evaluates to true

16

Examples

- Consider

- if (a 0) s t

- R1 stores a

- R2 stores s

- R3 stores t

- We can replace

- with CMOVZ R2, R3, R1

- The CMOV instruction has no branch penalty

- 1 cycle instead of potentially 3 (including

branch delay)

- Consider a superscalar where we can issue a load

ALU but not a branch ALU - This code will incur a stall if the branch is not

taken between the two LW instructions - if we assume the branch is most often not taken,

we can change LW R8, 20(R10) into LWC R8,

20(R10), R10 and move it to before the BEQZ into

the vacant instruction spot - LWC conditional load

BNEZ R1, L DADDI R2, R3, 0 L

17

Handling Exceptions

- The conditional instruction should not cause an

exception during the move or load (if the

condition is false, the operation would never

have taken place) - In the previous example, consider from before

- LWC R8, 20(R10), R10

- if R10 is 0 then this causes an exception since

location 20 (R10 0, so we have 20 0) is most

likely a part of the OS - Two approaches to handling this problem

- hardware-software cooperation when an exception

arises, hardware alerts the OS of whether the

exception was raised through an ordinary or a

speculated instruction - poison bits add a bit to each register and to

each instruction and set the bit for any

speculated instruction - set a registers poison bit if instruction is

speculated, or if register was assigned a value

computed with a register with a set poison bit - if an exception arises from a correctly

speculated instruction, then all registers with

set poison bits will have to be reinitialized

18

Hardware for Compiler Speculation

- We can go further with compiler-based speculation

by adding hardware to support what the compiler

speculates - consider the following if-else statement

- if (a 0) a b else a a 4

- where a is stored at 0(R3) and b is at 0(R2),

assume that the condition is true 90 of the

time, then the code on the left can become the

code on the right by adding an extra register - here we use an additional register combined with

trace scheduling to make the code execute more

efficiently

LW R1, 0(R3) BNEZ

R1, L1 LW R1, 0(R2)

J L2 L1 ADDI R1, R1, 4 L2

SW R1, 0(R3)

LW R1, 0(R3) LW R14,

0(R2) BEQZ R1, L3 ADDI R14,

R1, 4 L3 SW R14, 0(R3)

Discounting stalls, original code takes 90 5

10 4 4.9 cycles, the new code takes 90 4

10 5 4.1 cycles, a speedup of 1.195 or

almost 20

19

Speculative Load and Check

- We can also add to the hardware two (or more)

speculative instructions that preserve exception

handling behavior - From our previous example where we speculated

that the if clause was going to be executed, we

can add a speculative load (sLW) and a

speculative check (SPECCK) as follows

LD R1,0(R3) sLD R14,0(R2) //

speculatively load B such that BNEZ R1,L1 //

it cannot cause a terminating SPECCK 0(R2) //

exception check the spec. J L2 // here at

SPECCK L1 DADDI R14,R1,4 L2 SD R14,0(R3)

20

Limitations on Speculated Instructions

- Instructions that are annulled (turned into

no-ops) still take execution time - Conditional instructions are most useful when the

condition can be evaluated early - such as during the ID stage of our pipeline

- Speculated instructions may cause a slow down

compared to unconditional instructions requiring

either a slower clock rate or greater number of

cycles - The use of conditional instructions can be

limited when the control flow involves more than

a simple alternative sequence - for example, moving an instruction across

multiple branches requires making it conditional

on both branches, which requires two conditions

to be specified or requires additional

instructions to compute the controlling predicate - if such capabilities are not present, the

overhead of if conversion will be larger,

reducing its advantage

21

The Intel IA-64

- We wrap up our examination of software support

for ILP by examining a processor that relies

heavily on this - RISC-style, load-store instruction set

- compiler speculated instructions known as EPIC

(explicitly parallel instruction computer) - a variation on VLIW where the compiler explicitly

denotes where instruction parallelism stops due

to dependencies - 128 integer and 128 FP registers

- integer registers are 65 bits to hold a poison

bit - 64 1-bit predicate registers to store the

assumption of a predicated instruction (which way

was predicted) - 8 64-bit branch registers (for indirect branches)

- register windows although in this case, stored

on a stack, for quick parameter passing

22

Bundles

- Compiler builds instruction groups out of

individual instructions - take the next X instructions and place

parallelizable ones together - stops are inserted after each group to indicate

a limitation in parallelization - Next, the compiler takes groups and makes VLIW

instruction bundles out of them - a bundle will consist of 3 instructions (128

bits), possibly including no-ops to fill in slots

that cant be used because of limitations in ILP - if a bundle contains a stop, then the stop is

added to the VLIW to indicate that a stall might

be necessary - Instructions are issued to one of 5 units

- M memory

- I integer

- F FP

- B branch

- L X extended instructions which take up 2

slots in an VLIW - the hardware can handle up to 2 M or 2 I in one

bundle - These categories help identify legal types of

bundles

23

Partial Listing of IA-64 Bundles

See page G-36 figure G.7 for complete

table Heavy lines indicate stops that is,

locations where a stall is required or where

instructions that follow are not parallel

Some bundles have multiple stops (see 3)

24

Example

- Unroll the xi xi s loop seven times and

schedule it for the IA-64 to minimize the number

of cycles

First, we must determine instruction groups, what

follows is the unrolled but unscheduled code with

lines to break up instruction groups Loop L.D

F0, 0(R1) S.D F4, 0(R1) L.D F6,

-8(R1) S.D F8, -8(R1) L.D F10, -16(R1)

S.D F12, -16(R1) L.D F14, -24(R1) S.D F16,

-24(R1) L.D F18, -32(R1) S.D F20,

-32(R1) L.D F22, -40(R1) S.D F24,

-40(R1) L.D F26, -48(R1) S.D F28,

-48(R1) ADD.D F4, F0, F2 DADDI R1, R1,

-56 ADD.D F8, F6, F2 BNE R1, R2,

Loop ADD.D F12, F10, F2 ADD.D F16, F14,

F2 ADD.D F20, F18, F2 ADD.D F24, F22,

F2 ADD.D F28, F26, F2

Note that the final stop is not necessarily going

to be needed because the IA-64 uses speculation

and will branch if the target buffer indicates to

branch

25

Solution

Most of the stops will not cause stalls because

there is adequate distance, however the final

ADD.D is too close to the final S.D and so a

stall is required, thus the execution cycle

jumps from 9 to 11 totally time to execute, 12

cycles

26

Sample Problem 1

- Determine all dependencies (true, output, anti)

in the loop below and determine if the loop is

parallelizable

for(j0jlt100j) ai-1 bi

ai //S1 bi ci-1 ci1

//S2 ci //S3 ai ci

s //S4

Output dependence loop carried on a from S1 to

S4 Antidependence non-loop carried on b from S2

to S1 and non-loop carried on a from S4 to S1

and loop carried on a from S1 to S1 True

dependence non-loop carried on c from S3 to S3

and loop-carried on c from S3 to S2 The loop

carried true dependence makes this loop

non-parallelizable

27

Sample Problem 2

- Use the GCD test to determine if there is a

dependency - for(i2ilt100i2) aia50i1

- first normalize the code

- by dividing the for-loop values by 2 and

multiplying the array index by 2 - for(i1ilt50i1) ai2a100i1

- this gives us a2, b0, c100, d1

- GCD(a, c) 2

- d b 1

- since 2 does not divide into 1, there are no loop

carried dependencies in this loop

- Repeat the same problem with this new loop

- for(i2ilt100i2) aiai-1

- first normalize the loop

- for(i1ilt50i1) a2ia2i-1

- a2, b0, c2, d-1

- GCD(a, c) 2

- d b -1

- 2 does not divide into 1 so again this loop has

no loop carried dependencies

28

Sample Problem 3

- Consider the following payroll code

- first, show how this code would appear in MIPS

without speculation - assume F0 is 40.0, F2 is 1.5, F4 is hours and F6

is wages - next, assume that the then clause is taken most

of the time and rewrite the MIPS with this

speculation using extra registers as needed - assume each instruction takes 1 cycle to execute

and there are no stalls, if the speculation is

correct 95 of the time, how much faster is the

speculated code than the original?

if (hours lt 40) pay wages hours

else pay wages 40 wages 1.5 (hours

40)

29

Solution

Non-speculative code C.LE.D F4, F0 BC1F

else MUL.D F8, F4, F6 J out else MUL.D

F8, F6, F0 SUB.D F10, F4, F0 MUL.D F10,

F10, F6 MUL.D F10, F10, F2 ADD.D F8, F8,

F10 out S.D F8,

Speculative code C.LE.D R1, F4, F0 MUL.D

F8, F4, F6 BC1T out else MUL.D F8,

F6, F0 SUB.D F10, F4, F0 MUL.D F10, F10,

F6 MUL.D F10, F10, F2 ADD.D F8, F8,

F10 out S.D F8,

The non-speculative code takes 5 instructions if

the then clause is executed and 8

instructions if the else clause is executed

whereas the speculative code takes 4

instructions if the then clause is executed and 9

instructions if the else clause is executed If

the then clause is taken 95 of the time, we

have speculative 95 4 5 9

4.25 non-speculative 95 5 5 8

5.15 Speedup 5.15 / 4.25 1.212 or 21 speedup!

30

Sample Problem 4

if(x ! 0) y-- else y

- For the if-else statement to the right

- write the MIPS code without any speculation

- write the MIPS code with predicated instructions

so that there are no branches - write the MIPS code to speculate that the ELSE

clause is taken most often - which code executes fastest?

- assume R1 and R2 store x and y

DADDI R5, R2, 1 DSUBI R6, R2, 1 SEQ R4,

R1, R0 CMOVZ R2, R6, R4 CMOVZ R2, R5, R1

BEQZ R1, else DSUBI R2, R2, 1 J cont else DAD

DI R2, R2, 1 cont

DADDI R2, R2, 1 BEQZ R1, cont DSUBI R2, R2,

2 cont

Non-speculation and speculating the else clause

are the same (assuming no stalls) when the else

clause is taken but speculation is superior if

the then clause is taken, and the predicated

instructions take 5 cycles no matter what

31

Sample Problem 4

- Assume that the MUL.D instruction takes 7 cycles

to execute - Perform symbolic loop unrolling and scheduling on

the following loop so that there are no stalls

required to execute the code - how much performance increase is there over the

code as given below (assume forwarding is

available and branches are handled by assume not

taken) not counting the startup and cleanup code?

Loop L.D F0, 0(R1) L.D F1, 0(R2) MUL.D F2,

F0, F1 S.D F2, 0(R3) DSUBI R1, R1, 8 DSUBI

R2, R2, 8 DSUBI R3, R3, 8 BNEZ R3, Loop

32

Solution

// start up code will require 2 L.D and 1

MUL.D Loop S.D F2, 16(R3) MUL.D F2, F0,

F1 L.D F0, 0(R1) L.D F1, 0(R2) DSUBI R1, R1,

8 DSUBI R2, R2, 8 DSUBI R3, R3, 8 BNEZ

R3, Loop // cleanup code will require 1 MUL.D and

2 S.D Original code would contain 1 stall after

the second L.D, 6 stalls after MUL.D, 1 stall

after the third DSUBI, and the branch delay slot,

so 9 stalls per iteration This code has no

stalls (outside of the startup and cleanup code),

so the loop takes 8 cycles versus 17 cycles, a

speedup of 17 / 8 2.125 (over 100!)

33

Sample Problem 5

- Rewrite the given code without branches by using

predicated instructions (conditional moves and

conditional loads)

Consider that cmovz and cmovn dont tell us if a

previous condition was true or false, so we have

to organize our code cleverly

if(a gt b) x 1 else if(c lt d) x

2 else x 3

assume a, b, c, d and x are stored in R1, R2,

R3, R4, R5 and R11, R12, R13 store 1, 2 and 3

Solution DADD R5, R0, R13 // initialize x to

3 DSUB R20, R3, R4 // R20 c d (R20 lt 0 if c

lt d) DSUB R21, R2, R3 // R21 b a (R21 lt 0 if

a gt b) CMOVZ R5, R12, R20 // reset x to 2 if R20

lt 0 CMOVZ R5, R11, R21 // reset x to 1 if R21

lt 0

34

Sample Problem 6

- Unroll and schedule the bundles for the IA-64 for

the loop that performs ai bi ci such

that the code executes in as few cycles as

possible