A note about gradient descent: - PowerPoint PPT Presentation

1 / 20

Title:

A note about gradient descent:

Description:

where is the output for input pattern , are the synaptic weights and is the ... fit a 1D a linear, a sigmoid and a quadratic function, for both networks. ... – PowerPoint PPT presentation

Number of Views:243

Avg rating:3.0/5.0

Title: A note about gradient descent:

1

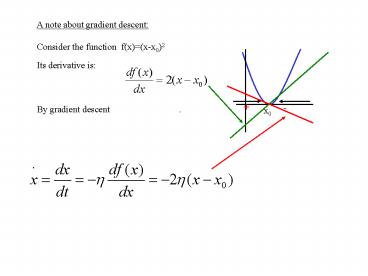

A note about gradient descent Consider the

function f(x)(x-x0)2 Its derivative is By

gradient descent .

-

x0

2

Solving the differential equation

or in the general form

What is the solution of this type of equation

Try

3

THE PERCEPTRON (Classification)

Threshold unit

where is the output for input pattern

, are the synaptic weights and is

the desired output

AND

w1 w2 w3 w4 w5

4

AND

Linearly seprable

5

OR

1

0 1

Linearly separable

6

Perceptron learning rule

Convergence proof Hertz, Krough, Palmer

(HKP) Handout 1, read at home. Assignment

3a program in matlab a preceptron with a

perceptron learning rule and solve the OR, AND

and XOR problems. (due before Feb 2, send me by

email to harel.shouval_at_uth.tmc.edu)

w1 w2 w3 w4 w5

7

Linear single layer network (

approximation, curve fitting)

or

Linear unit

where is the output for input pattern

, are the synaptic weights and is

the desired output

Minimize mean square error

w1 w2 w3 w4 w5

8

Linear single layer network (

approximation, curve fitting)

Linear unit

where is the output for input pattern

, are the synaptic weights and is

the desired output

Minimize mean square error

w1 w2 w3 w4 w5

9

The best solution is obtained when E is minimal.

For linear neurons there is an exact solution for

this called the pseudo-inverse (see

HKP). Looking for a solution by gradient descent

-gradient

E

w

Chain rule

10

and

Since

Error

Therefore

Which types of problems can a linear network

solve?

11

Sigmoidal neurons

for example

Which types of problems can a sigmoidal networks

solve? Assignment 3b Implement a one layer

linear and sigmoidal network, fit a 1D a linear,

a sigmoid and a quadratic function, for both

networks.

12

Multi layer networks

Output layer

- Can solve non linearly separable classification

problems. - Can approximate any arbitrary function, given

enough units in the hidden layer.

Hidden layer

Input layer

13

Note is not a vector but a matrix

14

Solving linearly inseparable problems

XOR

Hint XOR or and not and

15

XOR

-.5

1 0.5

.5

0

0.5 -0.5 1 -1

How do we learn a multi-layer network The credit

assignment problem !

16

Gradient descent/ Back Propagation, the solution

to the credit assignment problem

Where

For hidden layer to output weights

17

For input to hidden layer

Where

and

and

18

For input to hidden layer

Assignment 3c Program a 2 layer network in

matlab, solve the XOR problem. Fit the curve

x(x-1) between 0 and 1, how many hidden units did

you need?

19

- Formal neural networks can accomplish many tasks,

for example - Perform complex classification

- Learn arbitrary functions

- Account for associative memory

- Some applications Robotics, Character

recognition, Speech recognition, - Medical diagnostics.

- This is not Neuroscience, but is motivated

loosely by neuroscience and carries important

information for neuroscience as well. - For example Memory, learning and some aspects of

development are assumed to be based on synaptic

plasticity.

20

What did we learn today?

Is BackProp biologically realistic?